Dominic Cronin's weblog

What's in store for Tridion in 2021

It's now almost a tradition for me to round off the old year by speculating about what the new year will bring for Tridion specialists. As we entered 2019 I was enjoying the return of the Tridion name that had come with the release of Sites 9 in 2018. The GraphQL endpoint was the hottest new news, and we were waving goodbye to the last of vbScript.

The predictions weren't very different as we came into 2020. The basic shape of modern Tridion architectures was starting to look a bit more fully formed. We'd be using DXA, and cloud technologies were much more front and centre. I spent a large part of 2020 rewriting an application to support this kind of architecture, and knee deep in everything from Jenkins to OpenShift, the SDL cloud, and of course, DXA. I was working with DXA 2.2 for the new application, while other applications that were already cloudified were running DXA 2.1, so we had plenty of back and forth with support and R&D to make sure everything could co-exist on the same infra. It sometimes seems like the only way you can upgrade is if R&D manages to make all upgrades "anything first". That's a pretty tall order, but they managed to find us a route through the maze. So onwards and upwards.

The programming work for this was all done in Java, so I had to get beyond just dabbling in Spring MVC, and get some real solid coding done. So - for me at least, one of my New Year's resolutions will be to stop pretending I'm not a Java programmer. The other prediction that comes out of this is that pretty soon, most Tridion systems will look like this, and there'll be less legacy work. When Sites 9 was released in 2018, we had the first version that really, really, really wouldn't run vbScript. If you still have legacy stuff running, 2021 will be the year when you really have to "get to done", because there won't be any supported version of Tridion that can run it.

My next prediction is about the SDL cloud. In 2021, this will become a much more established approach. They'll continue to push this in the sales process as the normal way to run Tridion, and particularly for greenfield systems, that's what people will do. There are a couple of things that will drive this. Firstly, the cloud offering will mature further, and more of the day-to-day activities will become self-service. It's in everyone's interest if support tickets are only needed for "interesting things".

I'm also predicting the return of customisation. With Sites 9.5 we now have the add-ons feature - which makes it much more practical to do customisations and deploy them to highly managed environments within the scope of "sprint work". (This is the second of the things that will push us to the cloud.) For this kind of thing, it's a case of the less friction the better. If you're going to have to jump through a row of administrative hoops for every environment, your simple events system (for example) will quickly acquire enough story points that some other feature will get prioritised. If you can automate the deployment in your devops street, well of course, first you'll have to invest in being able to, but then all sorts of improvements will again become much more attractive to do. This might not sound like much, but even small enhancements can do wonders for the way people engage with Tridion. If the content team know that they can have things fixed to be more suitable for their specific way of working, they'll be much happier.

In 2021, more Tridion customers will be actively engaged with a combination of both Sites and Docs. It's there in the product, and SDL's clear direction is towards a much more integrated future. I don't think there's ever been a customer that used every single Tridion feature, and I don't suppose that's going to be any different with the Sites/Docs combination, but there will be some for whom it's a good fit and we'll see more of it.

In 2021, we will see architectures that are much more headless. Server-side applications based on DXA and other frameworks won't go away, but there will be some customers consuming all their content directly from the Public Content API. There will also be customers who take a hybrid approach, with the main site served from an application, with some features consuming the API from the browser.

The real consequences of the RWS takeover will start to become apparent. The combined company is now the biggest vendor of language services, with a huge customer base, compared with RWS and SDL previously being 4th and 5th respectively. This is probably a good thing, although their competitors will probably not take it lying down. There'll be cost savings, of course, but I'm guessing that will be limited to consolidating sales offices and the like. After any such merger, there'll be an effort to "de-duplicate" the technology. There must be quite some overlap in the language technology, but as far as I know, RWS hasn't got any existing offering that would compete with Tridion Sites and Docs. The last few years have seen SDL move away from previous ambitions of pervasive-platform-hood and put the focus back on being best-of-breed; this and the recent technical mergers of Sites and Docs are evidence that technology de-duplication has already been a clear focus for SDL. So it's probably just "business as usual", but the question remains as to whether the new company sees this division as a driver for future business. The shareholders will want to see cost savings. Will RWS have the vision to invest in the R&D to capitalise on the directions we've seen so far, or will it be just enough hay to feed the cash cow? My 2021 prediction on this is non-commital. We'll see some hints, but nobody's going to be throwing the tiller hard over, especially now that they are steering a super-tanker. This time next year, we might have a clue. The same goes for the inevitable re-branding that's coming. SDL is a strong brand, and they'll do it carefully. Presumably this time we get to keep the name Tridion! :-)

Happy New Year, everyone!

Another Access Manager gotcha

I'm on a roll today - this is my second blog post about a gotcha in the Tridion Access Manager. The first was quite technical, but this is just really a heads up about something that's fine if you expect it and know what to do.

When you first run Access Manager and you haven't yet added an identity provider, you are still able to undertake various system admin tasks (such as adding an identity provider) without being logged in. The thing is, that as soon as you successfully add an identity provider, that doesn't work any more, so at some point in the process, you have to log in at the identity provider and then you get a confirmation back from Access Manager that the identity provider has been added.

The gotcha is that it doesn't then log your browser session in as the recently confirmed account at the identity provider, so as far as the server is concerned, all that anonymous sysadmin stuff is over, and you need to be using your new account. The documentation does helpfully mention that that "the first idP needs to give Administrator-level access to at least one user", but be careful. If you get it wrong, you can lock yourself out completely. (Fortunately, on my development rig it takes me about 2 minutes to drop the database and run the script to create it again. In a production scenario, you might want to have the steps very clearly mapped out and a fallback plan.)

Anyway - back to the gotcha, which is that having added your IdP, you are still not logged in. In the screencap below, you can see how it looks now that I'm successfully logged in with my "dominic" account from the idP, but at this point, it will probably still say Anonymous in the top right corner. If you click on various buttons trying to do things, the browser will get a response that authorization is required, and it will throw up a login dialog. Unfortunately this login doesn't seem to go via the identity provider, so you're left stupidly typing in all the possible combinations of username and password that it might possibly be.

The solution is actually quite simple. Simply close your browser entirely, then open it again and return to the Access Management URL. It will ask you to log in, and you can now successfully log in with your new credentials. So it's not the end of the world, but when you are playing with new stuff, even this kind of headwind is enough to drive you bonkers. I reckon I went round this particular loop five or six times before I eventually got it right. At the same time, I was trying to tweak the identity provider so that the right claims would be added to the ID token to match what was expected for the "Username claim" and the "Full name claim" and it wasn't until I got that far that I realised what the problem was with logging in.

Hopefully this will save someone some hair-pulling.

Keylength gotcha when setting up Tridion Access management.

I'm busy setting up Access Management on my Tridion server. There are quite a lot of moving parts, so I'm working step by step and checking as I go. I've got as far as configuring the details of my identity provider, and I'm about to start kicking the tyres properly, but it's not quite working just yet. My "go to" tool for checking something like this is Fiddler: I want to see what traffic is going backwards and forwards between the Access Manager server and the identity provider, if only to see what's in the JSON, but before I can get to that, I can see that I'm getting some 500 errors when the browser calls the /access-management/connect/token endpoint.

In the response, I can see the following:

IDX10630: The 'Microsoft.IdentityModel.Tokens.X509SecurityKey,

KeyId: '604BDFF176DB97F7C6D42CC4E7252C92F69F6A82',

InternalId: '48edf05d-a5da-4c81-98d7-96a4e08da898'.'

for signing cannot be smaller than '2048' bits. KeySize: '1024'.

(Parameter 'key.KeySize')

It says I should check the logs, and sure enough, the same information is there. I'm guessing that it's trying to sign the token using the certificate I've provided. It's using the Microsoft.IdentityModel library - which is probably quite justified in complaining about an insecure key length. Maybe you'll never come across this problem in production work, but I'd just generated the certificate in OpenSSL using the defaults. Close enough for a dev box, I thought, but apparently not.

So - back to OpenSSL and this time, I've specified the key length when generating the keys.

openssl genpkey -out accmanTokenSigning.key -algorithm RSA -pkeyopt rsa_keygen_bits:2048

With the new keys, it's simply a question of generating a new CSR, signing it, and exporting it to pfx format, copying it over to my server, editing the appsettings.json to point at the new file and a quick IISRESET.

It looks like that's now working, and I can get a bit further with familiarising myself with the intricacies of the relationship between Access manager and the identity provider.

With the best will in the world, there's no way Tridion R&D can catch every possible way in which a library from Microsoft that they use decides to be fussy. To be fair to Microsoft, it's fussy in a good way. For end users like me, it's just one more gotcha to look out for.

The main take away from this is "don't doubt yourself". When you're dealing with an unfamiliar system and it doesn't immediately behave as it should, the temptation is to just throw your hands up and assume it's beyond you to get the incantations right. It's black magic, after all, and you don't understand it. So back to the old mantra: look in the logs, look in the other logs, and look in the logs you haven't thought of". In this case, Fiddler got me there pretty quickly - that had been my starting point, because I didn't know if it was Access manager or the Identify provider causing trouble. Even if the HTTP response hadn't said look in the logs, I would have done so fairly soon. There's always more information if you look for it.

The Tridion Expert Summit 2020

Today and yesterday have seen the Tridion Expert Summit 2020, an online conference that in some ways is the successor of the Tridion Developer Summit, and in other ways is definitely something different. It's hard to say how much of the difference comes from it being online. I definitely missed the personal interactions, but none of us would have wished to ignore the need to be responsible regarding covid. I hope next year we'll see this evolve into a "physically present" event.

As an online event, I have to say I was impressed with the platform. SLD/RWS had used spotme, and the combination of being able to follow the live stream while simultaneously interacting with the agenda, Q&A, and to some extend with other attendees, was pretty smooth.

Pretty much all recent Tridion events have had an uncomfortable divide between the "sites" audience and the "docs" people. To some extent, we're all still in our individual silos, and material relevant to one group is less relevant to the other. Still, to an increasing degree, we're seeing content that's of common interest, such as the combined architecture and how it all hangs together. For me, at least, it was cool to see that my old team-mate Ivo van de Lagemaat, these days a product owner on Docs, hasn't forgotten his old coding skills! He put together a working demo without any trouble in "What metadata in Tridion Docs can teach us about decoupled metadata integration and the future of taxonomy features".

Many of the topics were covered from different aspects by various speakers. the main points from a Sites perspective were:

Data publishing

The evolution of publishing models over the years, and how we've come eventually to what's described as "Data publishing": in other words, the component template is no more. (Not really, because they'll have more than one publishing model.) Tridion's always had a better-than-most claim to supporting "headless" but now there'll be a dedicated and optimised publishing model for it.

Tridion integration framework

a technology agnostic basis for plug-ins and integrations

Taxomomies and smart tagging

External taxonomies become more of a first-class citizen. Content can be tagged automatically, and then the tagging reviewed and tweaked by a human.

CoreService.REST

A modern take on the what we used to call the TOM, this time based on Open API definitions

Tridion Access management

A unified approach for integrating with external identity providers.

All told, there was plenty of interesting material. Thanks to all who spoke and to everyone involved in organising it.

Programatically changing the Publishable flag on a Category

Not long ago I was writing a script which, among many other things, needed to set the Publishable property of a category. In the Tridion user interface, a category has a checkbox, large as life, that says "Publishable". How hard could it be, I thought. :-)

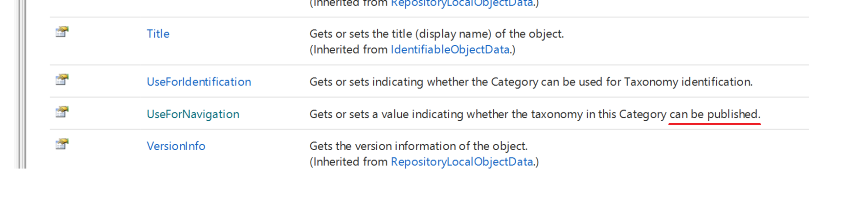

It turns out that when you work with the API (in this case, the core service), it's not called Publishable (or any variation on that), but UseForNavigation.

I kind of get it. Back when categories first could be published, the focus was on using them to build navigations. There's even a note in the documentation that says "Before SDL Tridion 2009 the behavior was get or set whether the taxonomy can be used for navigation."

Well I suppose every product as complex (and powerful) as Tridion will have it's history and quirks. In fact, it only cost me a few minutes to figure this out, so it's not really a problem. I'm still going to file this post under "gotchas" though!

Don't mount your Hyper-V disk and change the contents if it has checkpoints

I just managed to get myself into trouble with Hyper-V. I'm busy setting up a new Tridion image, so I'd started with a fresh Windows Server Essentials install, and then once I had that running, I wanted to copy a handful of installers from the host computer to the new image. What could be simpler than just mounting the VHDX, I thought. Wrong!

So.... mounting the virtual disk is easy, you just right-click on the file, and Windows offers you a Mount option in the context menu. You have to stop the virtual server first, but OK. So I did this - copied my installers over, unmounted the drive with the Eject option from the context menu of what had become E: and went back to start the image again. This promptly failed with various messages that said there was a mismatch between the differencing virtual disk and the parent disk. I hadn't really wanted a differencing disk (which is what it turns out a checkpoint is really called). Checkpoint shmeckpoint... this is not a highly available super reliable server I'm building. Anyway - checkpoints... it looks like it's just journaling; all your edits go in the checkpoint file, and I suppose a restore is just deleting the checkpoint file.

Enough speculation - what it means is that for the disk to work, Hyper-V has to know the order that the various slices are layered on top of each other, and when it does that - it also does some integrity checking. Not a bad thing, you might think, but adding some files had presumably borked a checksum or whatever, and it was throwing up the mismatch message. Apparently you can fix this through the Inspect button in the user interface, but then I got a different error. Fortunately - everything in Hyper-V works from the command line too, so from the powershell I was able to do the following and it was all good again.

Set-VHD .\some_checkpoint_or_other.avhdx -ParentPath .\TheVirtualDisk.vhdx -IgnoreIdMismatch

If you have more checkpoints, you can make each one in turn a parent of the other in the same way.

Mostly - this post is about the fact that it's apparently stupid to mount a VHDX and edit it, and nobody had told me. Next time I'll just run up a share.

So what use is that Discover-EnvironmentCapabilities.ps1 script anyway?

Not long ago, I posted a powershell script on Tridion Practice that allows you to connect to a Tridion discovery service and read out the capabilities that are offered by the corresponding content delivery environment. Well that's a pretty good party trick as far as it goes, but hey maybe you already had a pretty good idea of how you've got things set up, so it's kind of a one-trick pony kind of thing eh? Well it turns out that the script is much more useful than just that.

For starters, I'd like to mention that I've recently updated the script so that it also lists the Model service if it's there, mostly because I was interested in querying it directly. When you are building a DXA application, you can always throw the logging into DEBUG to see the service calls, but it's also very handy to be able to do the service calls yourself, without your application in the way. After all, "divide and conquer" is the most ancient debugging technique of all. Isolate your problem to get a better look at it. Still - as many of the APIs are not public and documented, the debug logging is probably your first port of call to figure out how the query ought to look.

Especially if you're dealing with an automatically provisioned system such as SDL's cloud, the only reliable way to get the service urls is to ask the discovery service for them, so when I wanted to query the model service, I'd first need to do that anyway. Actually I first needed to know the localization, so I was starting with the content service. I didn't want to be re-typing URLs, so actually for my first quick-and-dirty, I just copied all the discovery code into my script and hacked in a $capabilities variable to capture all the output from the foreach loop. Something like this:

$capabilities = foreach($capabilityName in $capabilityNames) {

# invoking the discovery service and returning PSObjects

}

Then I could just follow up with

$contentUri = ($capabilities | ? {$_.Capability -eq 'ContentServiceCapability'}).'Service URI'

$queryUri = ($contentUri -replace '/content.svc','/client/v4/content.svc') + "/GetPublicationMappingsFunctionImport(Url='$url')"

Invoke-RestMethod -Method Get -Uri $queryUri -Headers @{Authorization=$Authorization}

(You've probably realised by now that if you want to follow along at home, it's probably best to start by visiting the cookbook recipe from the link above and downloading the script.) Anyway - this worked great, and gave me an XML document from which I could dig out the PublicationId. I'd simply used the same $Authorization variable that I'd created to use with the Discovery service, which of course is valid for the other services too. (BTW - if JSON is your poison, just fix up the -Headers parameter to be @{Authorization=$Authorization;Accept='application/json'})

Now I was ready to call the Model service, but the thought of yet another script that copied in the discovery code was starting to make me feel a bit itchy around the DRY principle. I mean... no need to be a fanatic, but enough is enough, eh? So then I realised I didn't have to copy it. All I needed was to "dot source" the existing script. I had the discovery script in the same folder, so my entire script ended up being 4 lines:

$capabilities = . .\Discover-EnvironmentCapabilities.ps1

$modelUri = ($capabilities | ? {$_.Capability -eq 'ModelServiceCapability'}).'Service URI'

$queryUri = $modelUri + "/PageModel/tcm/309/nl/blah/foo/index?includes=INCLUDE"

Invoke-RestMethod -Method Get -Uri $queryUri -Headers @{Authorization=$Authorization}

"Dot sourcing" in powershell (and also in some other shells) means using the dot operator to execute another script in your current context. The dot operator is the first of the two dots on the right hand side of the $capabilities assignment. This meant that not only did I manage to populate $capabilities with the return value of the script (a list of PSObjects describing capabilities) but any variables that were assigned in the discovery script were now also available for use locally. This meant that I could just use $Authorization and thereby avoid having to do all the tedious OAuth wrangling again.

So while the architectural purist in me is quietly cursing, spitting and mumbling about dependencies and side effects, my inner scripting hacker is bouncing around with glee. This is great! Re-use FTW!!! (Yes, yes, I should probably factor out the OAuth stuff too, some day, maybe...)

Anyway - this is just too handy not to share. Hope you all enjoy it.

Constructing an ImportExport ItemsSelector in Powershell

I've used the Tridion ImportExport API a couple of times from the PowerShell, and until now, I didn't really have any reason to use anything except a Subtree selector for my exports. If you put your items in a bundle, this is what you use, and for the rest, mostly what you want is everything in a folder or structure group. Invoking the constructor of SubtreeSelection usually looks something like this:

$selection = New-Object Tridion.ContentManager.ImportExport.SubtreeSelection $someOrgItemUrl,$true

This is fine because the arguments are both single variables. The trouble comes when you want to construct an Items Selector. Your first attempt probably looks like:

$items = @($itemUrl)

$selection = New-Object Tridion.ContentManager.ImportExport.ItemsSelection $items

You're probably thinking: I only want one item, but the constructor expects an [IEnumerable[string]] so I'll just use the array subexpression operator @() to force my single item to be an array and let Powershell take care of the rest of the magic of casting to IEnumerable. Powershell for the easy life, eh?

But it doesn't work. You get back some message like

New-Object : Cannot convert argument "0", with value: "foobar", for "ItemsSelection" to type "System.Collections.Generic.IEnumerable`1[System.String]": "Cannot convert the "foobar" value of type "System.String" to type "System.Collections.Generic.IEnumerable`1[System.String]"."

So what's going on here? It turns out that Powershell thinks that constructor parameters shouldn't be collections. However you want to imagine that, its type resolution logic ends up converting your collection back to a single item (presumably the first) which the constructor promptly rejects. I went through various hoops trying to force things to be an array, or a single item containing an array. You can create your array either with the subexpression operator @(), or just with a unary comma operator ($foo = ,$itemURl) but I ended up calling split with an empty delimiter. I'm not saying it's pretty, but it worked for me. I then also cast it explicitly to the expected collection type. In Powershell v5, the constructor is available using the static method syntax on the type, and calling the constructor this way is less prone to type resolution magic messing things up. Don't ask me exactly how. I have no idea. Anyway - this is what worked eventually:

[System.Collections.Generic.IEnumerable`1[System.String]]$items = $itemUrl.split('')

$selection = [Tridion.ContentManager.ImportExport.ItemsSelection]::new($items)

I hope this saves somebody some hair pulling and Googling.

Docker integration with WSL2

I have just set up the Docker/WSL2 integration on my computer, and it looks very promising.

Update: I've now just set my WSL back to version 1 and reinstalled Docker. As I said - it looks promising, but we're not there yet. Fair enough - running on the insider release of Windows and with a "beta" flag set in Docker, you can't really complain if it stops working. For now, I need it working, so back to the old set up. I'm still looking forward to when they get it stable.

Adding an authorization header for the Tridion content service using Fiddler

I've started to experiment with the GraphQL API offered by Tridion Sites 9's Content service. The obvious way to do this is to use the GraphiQL endpoint. On my system I can do this by pointing my browser at http://cd.local:8081/cd/api/graphiql. The only fly in the ointment is that the service expects an OAuth header, so you have to take care of that yourself. The guidance I've seen so far is to use a browser plugin like Requestly to do this, so I duly installed it, and was able to get successful query responses instead of the dreaded 'invalid_grant'. All well and good, but honestly, it's a right faff. Firstly, the plugin itself is clunky, so to open the relevant config window, you're at least several clicks away from sorting out your authorization header, which wouldn't be too bad, but the darned things keep timing out, so you keep having to repeat the procedure. Maybe there's a better plugin, but I figured life's too short. I use Fiddler quite often for faking various scenarios and making test setups work a bit more like they are supposed to in the real world, so why not knock off a quick Fiddler script and be done with it.... I thought!

Actually - it turned out to be a bit fiddly, but I now have it working, so time to share. Usual disclaimers.... it's not very polished. It works for my scenario, and if yours is different you'll have to use the source, Luke.

So - go and open up Fiddler and head to the FiddlerScript button or go to the Rules->CustomiseRules menu option. Once you have a script editing screen in view, you should be able to find the function OnBeforeRequest(oSession: Session). Inside this function, paste in the following code and fix it up to meet your own bizarre preferences:

if (oSession.uriContains("http://cd.local:8081/cd/api")) {

var client_id = "cduser";

var client_secret = 'CDUserP@ssw0rd';

var strBody = "client_id=$client_id&client_secret=$client_secret&grant_type=client_credentials&resources=%2F".replace("$client_id",encodeURIComponent(client_id)).replace("$client_secret",encodeURIComponent(client_secret));

var arrBody = new byte[strBody.length];

for (var i = 0;i < strBody.length;i++){

arrBody[i] = strBody.charCodeAt(i);

}

var oHeaders = new HTTPRequestHeaders();

oHeaders.RequestPath ="http://cd.local:8082/token.svc";

oHeaders["Content-Type"] = "application/x-www-form-urlencoded";

oHeaders["Host"] = "cd.local:8082"

oHeaders.HTTPMethod = "POST";

oHeaders["Content-Length"] = arrBody.length;

var oAuthSession = FiddlerApplication.oProxy.SendRequestAndWait(oHeaders, arrBody, null, null);

if (200 == oAuthSession.responseCode) {

var oJSON = Fiddler.WebFormats.JSON.JsonDecode(oAuthSession.GetResponseBodyAsString());

oSession.RequestHeaders.Add("Authorization", oJSON.JSONObject["token_type"] + ' ' + oJSON.JSONObject["access_token"]);

}

else {

MessageBox.Show("Bad Auth: " + oAuthSession.responseCode);

}

}

If you now go back to your grapiql page, you should find that your requests are authorised. If it doesn't work, make sure that you've removed your rule out of Requestly or whatever you've been using; given two "Authorized" headers, the service will very likely not behave nicely.

There are plenty of obvious improvements that can still be made. For example, it's probably fairly easy to switch this on and off with a setting in Fiddler, or to check for an existing Authorization header.

Anyway - this is going to make my life much nicer as I play with the API.