Dominic Cronin's weblog

2020 foresight. How will the New Year look for Tridion specialists?

A year ago, I wrote a similar blog post about the year 2019 which we were then entering. Looking back, it wasn't a bad set of predictions. More Sites 9, more DXA, the end of vbScript, the beginning of GraphQL, more cloud computing and devops. OK - so I wasn't exactly making bizarre claims or strange predictions: something more akin to "more of the same", "keep up the good work" and generally keeping steadily on towards the projects and architectures of the future.

So I didn't get it too badly wrong. How well will I do this time? Well let me start with my first prediction. This coming year will really, really, definitely and for ever see the absolute, total and utter end of vbScript templating as part of what Tridion people have to do. Really. Truth be told, this is going to be a better prediction than it was last year. At my current customer, there are still one or two pockets of resistance, but the new architecture is in place, and a relatively straightforward implementation should see the job done.

For myself, I've seen a very welcome increase in the amount of DXA work and devops, including Java, Jenkins and OpenShift, combined with SDL's cloud offering. I've also had the chance to bring my front-end skills up to date, and to embrace the notion of being a "T-shaped" agile team member. The reality for Tridion specialists has always been that we are generalists too, and most of us have spent our days doing whatever it takes to get enterprise level web applications up and running. As we now see further shifts in architectural emphasis, there will be more going on in the browser, so we'll be there. I hesitate to say "full-stack", because that's a stereotype in itself, so perhaps the old term "n-tier" is closer. I expect in 2020 that we'll see further differentiation in front-end work, so that there'll perhaps be a clearer division between front-end-front-end and back-end-front-end. With modern frameworks, there's plenty for a programmer to do in the browser without getting intimately involved in the intricacies of the presentation layer.

Tridion itself is also steadily moving towards supporting these architectures, with the GraphQL API and expected architectural shifts towards getting a very efficient "publishing pipeline". It can be tempting to see things through the lens of how we'd build our current applications on the new architecture, but at the Tridion Developer Summit 2019, I was involved in a very interesting round-table discussion that was supposed to be about headless WCMS. We ended up discussing how new architectures could bring new possibilities to the kind of web sites people create, or want to create. The availability of advanced queries on a published data store opens the door to designs that part company with the "traditional" model of a hierarchy of web pages. Of course, these possibilities have been available for quite some time, but mostly for very big sites where search is a far more comfortable navigation paradigm than hierarchy. As it gets progressively easier to do, we'll see some shifts in what people expect. I'm not saying every site will end up as a single-page application, but we'll see variations on all the traditional themes, and some we've not seen yet. The future is out there.

As the worlds of web site design and web application architecture morph into something new, Tridion's role is both the same and different. If you want headless, of course, Tridion can do that, but the reasons you'll want Tridion are much the same as they've always been: superb content management features, technical excellence, scalability, blueprinting, and the ability to integrate with anything. It's great to be able to take the new stuff for granted, but also the old stuff.

In 2020, I think we will see an acceptance in the industry that Tridion is back! In the early days, it was always pitched as a "best of breed" system that did what it did very well and integrated very well with other nearby systems. The typical Tridion customer didn't want it to be a document management system or a customer relations management system: they had those already. They also clearly didn't want it to become a pervasive platform that would be a one stop shop for a one size fits all. A few years ago, SDL departed from the "best of breed" identity that had served it so well, and in doing so, damaged its own performance in the market. Fortunately, these things were corrected, but it's like steering a super-tanker; it took some time to see the results of the correction, and then people wanted to just check the course for a little while longer. We're now far enough to be able to say that the good ship Tridion is on course and sailing for better weather.

2020 is going to be a good year!

Happy New Year.

Querying the Tridion GraphQL service with Powershell

Yet another in the ongoing series of "If it's Tridion, I want to be able to do it in Powershell" :-)

Now available for your delectation up on Tridion Practice

Is it the end of the component presentation?

Among the most interesting talks at the recent Tridion Developer Summit, was one by Raimond Kempees and Anton Minko, in which they looked into their crystal ball to give some hints about the direction content delivery is going in. In brief, the news is that Tridion R&D are now following through on the consequences of recent changes in the way web content management is done. The market is demanding headless Web CMS systems, and although Tridion's current offering is fully able to punch its weight as a headless system, the focus looks as though it's going even further in that direction. Buzzwords aside, the reality is that templating is moving further and further away from the content manager, and will probably end up being done largely in the browser.

This blog post is not about the death of the server-based web application: that might be a little premature, but it's really clear that we won't be doing our templating on the content manager. Apart from legacy work, this is already the case for most practical purposes. If you are using a framework like DXA, you will most likely not wish to modify the templating that comes with the framework. The framework templating doesn't render output, but presents the data from your components and pages in a generic format such as JSON. Assuming that all the relevant data is made available, you shouldn't ever need to intervene at this stage in the proceedings. Any modifications or customisations you may wish to make will probably be done in C#, and not in a templating language. (Tridion's architecture is very flexible, and I'm talking here about where mainstream use of the product is going. There are still organisations whose current work looks very different to this, and they have their own very good reasons for that.)

So the templating has already moved out to DXA, and there's an advanced content service which offers JSON data which you specify in GraphQL queries. Architectural decisions in your project are still likely to be about what should happen on the server and what in the browser, but you won't be doing much on the content manager beyond, erm... managing content.

Among the interesting new directions sketched out in the talk were the following:

- A "native" data format for publishing. Effectively - instead of templates generating JSON, Tridion itself will do this, so you won't need templates any more.

- A fast publishing pipeline to ensure that the content gets from the content manager to the content service in a highly efficient manner.

All well and good, but somewhere in there, almost as a throwaway line, they touched on the end of the component presentation? Well it's kind of a logical conclusion in some ways, but when they mentioned this, I had a kind of "whoa" moment. So don't throw the baby out with the bath water, guys!

Seriously - for sure, if we don't have component templates any more, we can't really have component presentations, can we? Well yes, of course, we can, and both DD4T and DXA have followed the route of using good-old-fashioned page composition in Tridion, with the editors selecting component templates to indicate how they'd like to see the component rendered. In practice, all the component templates are identical, but the choice serves to trigger a specific "view" in the web application. The editorial experience remains the same as ever, and everyone knows what they are doing.

So in practice, instead of a page being a list of component presentations, it's become a list of components, with some sort of metadata indicating the choice of view for each component. That metadata doesn't need to be a component template, and you can see why they'd want to tidy this up. If you're writing a framework, you use the mechanisms available to you, but Tridion R&D can make fundamental changes when it's the right thing to do.

So they could get rid of component presentations. My initial reaction was to think they'd still have to do something pretty similar to "page composition as we know it", but that got me thinking. We now have page regions, which effectively turns a page from one list into a number of smaller lists. With this in place, it comes down to the fundamental reasons that we have traditionally used different component presentations. It's very common to have page template/view logic that does something like: get all the link-list components and put them in the right-hand side bar, or get the main component and use it to render the detail view in the main content area, or get all the content components and put them in the main content area. These very common use patterns are easily coped with by using regions, but maybe there are other cases where it would still be handy to specify your choice of view for a given component.

Actually, a lot of our work in page templates over the years has been about working around the inflexibility of a page being simply a list of component presentations. We've written logic that switched on which schema it was, or maybe a dozen other things, to achieve the results we needed to. Still, that simple familiar model has a lot to be said for it, and I suspect there are cases where regions on their own probably aren't enough.

I've really appreciated the way product managers at Tridion have reached out to the community in recent times to validate their ideas and share inspiration. I have every confidence that the future of pages in a world without component presentations will be the subject of similar consultations. The Tridion of two or three versions hence might look a lot different, but it will probably also have a lot of familiar things. It's all rather exciting.

Room for a little YAGNI in the DXA :-)

I wouldn't usually call out open source code in a blog post, but honestly, this made me Laugh Out Loud in the office yesterday. I'd ended up poking around in the part of the Tridion Digital Experience Manager framework (DXA) that deals with media items. Just to be clear, the media items in question would usually be either binaries that have some role in displaying your web site, or in this case, more specifically, downloads such as a PDF or whatever. The thing that made me laugh was in a function called getFriendlyFileSize(). A common use case for this would be to display a file size next to your download link so the visitor knows that they can download the PDF fairly quickly, or that maybe they'd better wait until they're on the Wifi before attempting that 10GB ISO file.

getFriendlyFileSize() converts a raw number of bytes into something like 13MB, 7KB, or 5GB. What made me laugh was the fact that the author has also very helpfully included support not only for GigaBytes, but also TeraBytes, PetaBytes and ExaBytes.

Sitting in my office right now, I'm getting about "140 down" from Speedtest. That is to say, my download speed from the Internet is about 140 Mbps, which works out in practice that if I want to grab the latest Centos-with-all-the-bells-and-whistles.ISO (let's say 10GB) it'll take me about 10 minutes. Let's say I want to scale that up to 10PB, then we're talking about 400 years or so, which somewhat exceeds the longest web server uptime known to mankind by an order of magnitude and then some.

Well maybe this is just old-fashioned thinking, but I'm inclined to think we don't need friendly Exabyte file sizes for website media downloads just yet. In the words of the old Extreme Programming mantra, "You ain't gonna need it". (YAGNI)

I'm not here to take a rise out of the hard-working hackers that contribute so much to us all. Really. I can't say that strongly enough. It made me laugh out loud, that's all.

Discovering content delivery environment capabilities... on Tridion practice

I recently wrote a script to query the capabilities of a Tridion content delivery environment. Rather than post it here, I've put it up on Tridion Practice. After all, it's about time "practice" got a bit of love. I think I'll try to get a bit more fresh material up there soon.

I hope you'll find the script useful. Dare I also hope that some of you might be inspired to contribute something to Tridion Practice? It's always been the intention that we'd try to get contributions from a range of practitioners, so please feel welcome. Cookbook recipes are probably the most prominent part of the site, but the patterns and practices sections are also interesting. Come on down!

Watch out for this Tridion quantum leap!

With the release of SDL Tridion's Sites 9.1, they've made a startling leap in their platform support for Java.

In Sites 9, Java 8 is supported. It's not deprecated, and Java 11 is not yet mentioned.

In Sites 9.1, Java 8 support is totally gone, and the only supported version is Java 11.

I was certainly caught out. In trying to puzzle out a plausible upgrade path, before I'd actually read through and absorbed all the details, I'd raised a support ticket that turned out to be unnecessary, or at least premature. We'll be going to Sites 9, so the leap to Java 11 is a little further up the road.

I get it that they have all sorts of good reasons for this. The only possible scenario I can think of for not deprecating Java 8 is that they didn't know this was needed when they released Sites 9. It's also true that we have very, very little of the Tridion product actually baked in to the application these days.

I'm still calling this a gotcha.

Peering behind the veil of Tridion's discovery service

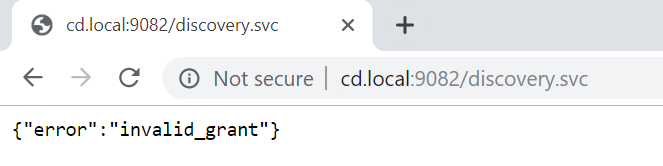

Many of us have configured applications that use Tridion's discovery service to find the various other content delivery services. All these services can be secured using oAuth, and the discovery service itself is no exception. It's almost a rite of passage to point a browser at the discovery endpoint only to see something like this:

In order to be allowed to use the discovery service, you need to provide an Authorization header when you call it, and otherwise, the "invalid_grant" error is what you get. Some of you may remember a blog post of mine from a few years ago where I showed how to call the content service with such a header. The essense of this was that first you call the token service with some credentials, and it hands you back a token that you can use in your authorization header.

That was when Web 8 was new, and I remember wondering, given that you only need to provide a discovery endpoint, how you could arrive at the location of the token service in order to get a token in the first place. I've seen enough code in the intervening period that takes a very pragmatic line and just assumes that the token service is to be found by taking the URL of the discovery service and replacing "discovery.svc" with "token.svc". That works, but it's somehow unsatisfactory. Wasn't the whole point of these service architectures that things should be discoverable and self-describing. Back then I'm fairly sure, after a cursory glance at https://www.odata.org/, I tried looking for the $metadata properties, but with the discovery service that just gets you an invalid grant.

I recently started looking at this stuff again, and somehow stumbled on how to make it work. (For the life of me, I can't remember what I was doing - maybe it's even documented somewhere.) It turns out that if you call the discovery service like this:

http://cd.local:9082/discovery.svc/TokenServiceCapabilities

it doesn't give you an invalid grant. Instead it gives you an oData response like this:

<?xml version="1.0" encoding="UTF-8"?>

<feed

xmlns:metadata="http://docs.oasis-open.org/odata/ns/metadata"

xmlns:data="http://docs.oasis-open.org/odata/ns/data"

xmlns="http://www.w3.org/2005/Atom" metadata:context="http://cd.local:9082/discovery.svc/$metadata#TokenServiceCapabilities" xml:base="http://cd.local:9082/discovery.svc">

<id>http://cd.local:9082/discovery.svc/TokenServiceCapabilities</id>

<title></title>

<updated>2019-09-04T19:28:36.916Z</updated>

<link rel="self" title="TokenServiceCapabilities" href="TokenServiceCapabilities"></link>

<entry>

<id>http://cd.local:9082/discovery.svc/TokenServiceCapabilities('DefaultTokenService')</id>

<title></title>

<summary></summary>

<updated>2019-09-04T19:28:36.916Z</updated>

<author>

<name>SDL OData v4 framework</name>

</author>

<link rel="edit" title="TokenServiceCapability" href="TokenServiceCapabilities('DefaultTokenService')"></link>

<link rel="http://docs.oasis-open.org/odata/ns/related/Environment" type="application/atom+xml;type=entry" title="Environment" href="TokenServiceCapabilities('DefaultTokenService')/Environment"></link>

<link rel="http://docs.oasis-open.org/odata/ns/relatedlinks/Environment" type="application/xml" title="Environment" href="TokenServiceCapabilities('DefaultTokenService')/Environment/$ref"></link>

<category scheme="http://docs.oasis-open.org/odata/ns/scheme" term="#Tridion.WebDelivery.Platform.TokenServiceCapability"></category>

<content type="application/xml">

<metadata:properties>

<data:id>DefaultTokenService</data:id>

<data:LastUpdateTime metadata:type="Int64">1566417662739</data:LastUpdateTime>

<data:URI>http://cd.local:9082/token.svc</data:URI>

</metadata:properties>

</content>

</entry>

</feed>

Looking at this, you can just reach into the "content" payload and read out the URL of the token service. So that explains the mystery, even if the possibility of doing a replace on the discovery service URL also counts as sufficient to render it unmysterious.

Anyway - who knew? I certainly didn't. Is this a better or more correct way of getting to the token service? I really don't know. Answers on a postcard?

Encrypting passwords for Tridion content delivery... revisited

A while ago I posted a "note to self" explaining that in order to use the Encrypt utility from the Tridion content delivery library, you needed to put an extra jar on your classpath. That was in SDL Web 8.5. This post is to explain that in SDL Tridion Sites 9, this advice still stands, but the names have changed.

But first, why would you want to do this? Basically it's a measure to prevent your passwords being shoulder-surfed. Imagine you have a configuration file with a password in it like this:

<Account Id="cduser" Password="${cduserpassword:-CDUserP@ssw0rd}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

You might not want everyone who passes by to see that your password is "CDUserP@ssw0rd". Much better to have something like encrypted:o/cgCBwmULeOyUZghFaKJA==

<Account Id="cduser" Password="${cduserpassword:-encrypted:o/cgCBwmULeOyUZghFaKJA==}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

Actually - with the possibility to do token replacement, I do wonder why you need a password in your config files at all, but that's not what this post's about.

The thing is that the jar files that used to be called cd_core.jar is now called udp-core.jar and cd_common_util.jar has become udp-common-util.jar. Actually this is a total lie, because in recent versions of Tridion all the jars have versioned names, as you'll see in the example I'm about to show you. One of these jars is to be found in the lib folder of your services, and the other in the services folder, so you might find it's easier just to copy them both to the same directory, but this is what it looks like doing it directly from the standalone folder of discovery:

PS D:\Tridion Sites 9.0.0.609 GA\Tridion\Content Delivery\roles\discovery\standalone> java -cp services\discovery-servic

e\udp-core-11.0.0-1020.jar`;lib\udp-common-util-11.0.0-1022.jar com.tridion.crypto.Encrypt foo

Configuration value = encrypted:6oR074TGuXmBdXM289+iDQ==

Note that here I've escaped the semicolon from the powershell with a backtick, but you can just as easily wrap the whole cp argument in quotes. Please note that I do not recommend the use of foo as a password. Equally, please don't use this encryption as your only means of safeguarding your secrets. It raises the bar a bit for the required memory skills of shoulder surfers, and that's about it. It's a good thing, but don't let it make you complacent. You also need to follow standard industry practices to control access to your servers and the data they hold. Of course, this is equally true of any external provisioning systems you have.

Discovery service in Tridion Sites nine has two storage configs

I just got bitten by a little "gotcha" in SDL Tridion Sites 9. When you unpack the intallation zip, you'll find that in the Content Delivery/roles/Discovery folder, there's a separate folder for registration, with the registration tool and its own copy of cd_storage_conf.xml. The idea seems to be that running the service and registering capabilities are two separate activities. I kind of get that. When I first saw the ConfigRepository element at the bottom of Discovery's configuration, I felt like it had been shoehorned into a somewhat awkward place. Yet now, it seems even more awkward. So sure, both the service and the registration tool need access to the storage settings for the discovery database, while only the registration tool needs the configuration repository.

The main difference seems to be one of security. The version of the config that goes with the registration tool has the ClientId and ClientSecret attributes while the other doesn't. This, in fact, is the gotcha that caught me out; I'd copied the storage config from the service, and ended up being unable to perform an update. The error output did mention being unable to get an OAuth token, but I didn't immediately realise that the missing ClientId and ClientSecret were the reason. Kudos to Damien Jewett for his answer on Stack Exchange, which saved me some hair-pulling.

I'm left wondering if this is the end game, or whether a future version will see some further tidying up or separation of concerns.

EDIT: On looking at this again, I realised that even in 8.5 we had two storage configs. The difference is that in 8.5 both had the ClientId and ClientSecret attributes.

Enumerating the Tridion config replacement tokens

OK - I get it. It's starting to look like I've got some kind of monomania regarding the replacement tokens in Tridion config files, but bear with me. In my last blog post, I'd hacked out a regex that could be used for replacing them with their default values, but had thought better of actually doing so. But still, the idea of being able to grab all the tokens has some appeal. I can't bear to waste that regex, so now I'm looking for a reasonable use for it.

It occurred to me that at some point in an installation, it might be handy to have a comprehensive list of all the things you can pass in as environment variables. Based on what I'd done yesterday this was quite straightforward

gci -r -include *.xml -exclude logback.xml| sls '\$\{.*?\}' `

| select {$_.RelativePath((pwd))},LineNumber,{$_.Matches.value} `

| Export-Csv SitesNineTokens.csv

By going to my unzipped Tridion zip and running this in the "Content Delivery/roles" folder, I had myself a spreadsheet with a list of all the tokens in Sites 9. Similarly, I created a spreadsheet for Web 8.5. (As you can see, I've excluded the logback files just to keep the volume down a bit, but in real life, you might also want to see those listed.)

The first thing you see when comparing Sites 9 with Web 8.5 is that there are a lot more of the things. More than twice as many. (At this point I should probably confess to some possible inaccuracy, as I haven't gone to the trouble of stripping out XML comments, so there could be some duplicates.)

65 of these come from the addition of XO and another 42 from IQ, but in general, there are just more of them. The bottom line is that to get a Tridion system up and running these days, you are dealing with hundreds of settings. To be fair, that's simply what's necessary in order to implement the various capabilities of such an enterprise system.

One curious thing I noticed is that the ambient configs all have a token to allow you to disable oauth security, yet no tokens for the security settings for the various roles. I wonder if this reflects the way people actually use Tridion.

Of course, you aren't necessarily limited by the tokens in the example configs of the shipped product. Are customers defining their own as they need them?

That's probably enough about this subject, though, isn't it?