Dominic Cronin's weblog

The Tridion Expert Summit 2020

Today and yesterday have seen the Tridion Expert Summit 2020, an online conference that in some ways is the successor of the Tridion Developer Summit, and in other ways is definitely something different. It's hard to say how much of the difference comes from it being online. I definitely missed the personal interactions, but none of us would have wished to ignore the need to be responsible regarding covid. I hope next year we'll see this evolve into a "physically present" event.

As an online event, I have to say I was impressed with the platform. SLD/RWS had used spotme, and the combination of being able to follow the live stream while simultaneously interacting with the agenda, Q&A, and to some extend with other attendees, was pretty smooth.

Pretty much all recent Tridion events have had an uncomfortable divide between the "sites" audience and the "docs" people. To some extent, we're all still in our individual silos, and material relevant to one group is less relevant to the other. Still, to an increasing degree, we're seeing content that's of common interest, such as the combined architecture and how it all hangs together. For me, at least, it was cool to see that my old team-mate Ivo van de Lagemaat, these days a product owner on Docs, hasn't forgotten his old coding skills! He put together a working demo without any trouble in "What metadata in Tridion Docs can teach us about decoupled metadata integration and the future of taxonomy features".

Many of the topics were covered from different aspects by various speakers. the main points from a Sites perspective were:

Data publishing

The evolution of publishing models over the years, and how we've come eventually to what's described as "Data publishing": in other words, the component template is no more. (Not really, because they'll have more than one publishing model.) Tridion's always had a better-than-most claim to supporting "headless" but now there'll be a dedicated and optimised publishing model for it.

Tridion integration framework

a technology agnostic basis for plug-ins and integrations

Taxomomies and smart tagging

External taxonomies become more of a first-class citizen. Content can be tagged automatically, and then the tagging reviewed and tweaked by a human.

CoreService.REST

A modern take on the what we used to call the TOM, this time based on Open API definitions

Tridion Access management

A unified approach for integrating with external identity providers.

All told, there was plenty of interesting material. Thanks to all who spoke and to everyone involved in organising it.

Programatically changing the Publishable flag on a Category

Not long ago I was writing a script which, among many other things, needed to set the Publishable property of a category. In the Tridion user interface, a category has a checkbox, large as life, that says "Publishable". How hard could it be, I thought. :-)

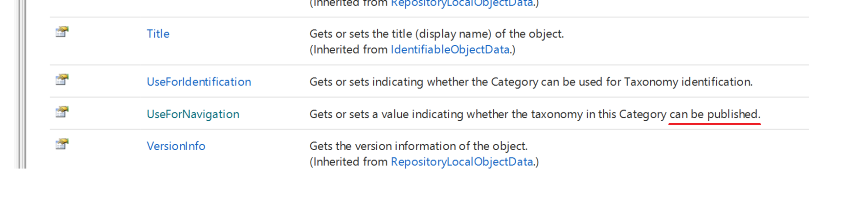

It turns out that when you work with the API (in this case, the core service), it's not called Publishable (or any variation on that), but UseForNavigation.

I kind of get it. Back when categories first could be published, the focus was on using them to build navigations. There's even a note in the documentation that says "Before SDL Tridion 2009 the behavior was get or set whether the taxonomy can be used for navigation."

Well I suppose every product as complex (and powerful) as Tridion will have it's history and quirks. In fact, it only cost me a few minutes to figure this out, so it's not really a problem. I'm still going to file this post under "gotchas" though!

So what use is that Discover-EnvironmentCapabilities.ps1 script anyway?

Not long ago, I posted a powershell script on Tridion Practice that allows you to connect to a Tridion discovery service and read out the capabilities that are offered by the corresponding content delivery environment. Well that's a pretty good party trick as far as it goes, but hey maybe you already had a pretty good idea of how you've got things set up, so it's kind of a one-trick pony kind of thing eh? Well it turns out that the script is much more useful than just that.

For starters, I'd like to mention that I've recently updated the script so that it also lists the Model service if it's there, mostly because I was interested in querying it directly. When you are building a DXA application, you can always throw the logging into DEBUG to see the service calls, but it's also very handy to be able to do the service calls yourself, without your application in the way. After all, "divide and conquer" is the most ancient debugging technique of all. Isolate your problem to get a better look at it. Still - as many of the APIs are not public and documented, the debug logging is probably your first port of call to figure out how the query ought to look.

Especially if you're dealing with an automatically provisioned system such as SDL's cloud, the only reliable way to get the service urls is to ask the discovery service for them, so when I wanted to query the model service, I'd first need to do that anyway. Actually I first needed to know the localization, so I was starting with the content service. I didn't want to be re-typing URLs, so actually for my first quick-and-dirty, I just copied all the discovery code into my script and hacked in a $capabilities variable to capture all the output from the foreach loop. Something like this:

$capabilities = foreach($capabilityName in $capabilityNames) {

# invoking the discovery service and returning PSObjects

}

Then I could just follow up with

$contentUri = ($capabilities | ? {$_.Capability -eq 'ContentServiceCapability'}).'Service URI'

$queryUri = ($contentUri -replace '/content.svc','/client/v4/content.svc') + "/GetPublicationMappingsFunctionImport(Url='$url')"

Invoke-RestMethod -Method Get -Uri $queryUri -Headers @{Authorization=$Authorization}

(You've probably realised by now that if you want to follow along at home, it's probably best to start by visiting the cookbook recipe from the link above and downloading the script.) Anyway - this worked great, and gave me an XML document from which I could dig out the PublicationId. I'd simply used the same $Authorization variable that I'd created to use with the Discovery service, which of course is valid for the other services too. (BTW - if JSON is your poison, just fix up the -Headers parameter to be @{Authorization=$Authorization;Accept='application/json'})

Now I was ready to call the Model service, but the thought of yet another script that copied in the discovery code was starting to make me feel a bit itchy around the DRY principle. I mean... no need to be a fanatic, but enough is enough, eh? So then I realised I didn't have to copy it. All I needed was to "dot source" the existing script. I had the discovery script in the same folder, so my entire script ended up being 4 lines:

$capabilities = . .\Discover-EnvironmentCapabilities.ps1

$modelUri = ($capabilities | ? {$_.Capability -eq 'ModelServiceCapability'}).'Service URI'

$queryUri = $modelUri + "/PageModel/tcm/309/nl/blah/foo/index?includes=INCLUDE"

Invoke-RestMethod -Method Get -Uri $queryUri -Headers @{Authorization=$Authorization}

"Dot sourcing" in powershell (and also in some other shells) means using the dot operator to execute another script in your current context. The dot operator is the first of the two dots on the right hand side of the $capabilities assignment. This meant that not only did I manage to populate $capabilities with the return value of the script (a list of PSObjects describing capabilities) but any variables that were assigned in the discovery script were now also available for use locally. This meant that I could just use $Authorization and thereby avoid having to do all the tedious OAuth wrangling again.

So while the architectural purist in me is quietly cursing, spitting and mumbling about dependencies and side effects, my inner scripting hacker is bouncing around with glee. This is great! Re-use FTW!!! (Yes, yes, I should probably factor out the OAuth stuff too, some day, maybe...)

Anyway - this is just too handy not to share. Hope you all enjoy it.

Discovering content delivery environment capabilities... on Tridion practice

I recently wrote a script to query the capabilities of a Tridion content delivery environment. Rather than post it here, I've put it up on Tridion Practice. After all, it's about time "practice" got a bit of love. I think I'll try to get a bit more fresh material up there soon.

I hope you'll find the script useful. Dare I also hope that some of you might be inspired to contribute something to Tridion Practice? It's always been the intention that we'd try to get contributions from a range of practitioners, so please feel welcome. Cookbook recipes are probably the most prominent part of the site, but the patterns and practices sections are also interesting. Come on down!

Peering behind the veil of Tridion's discovery service

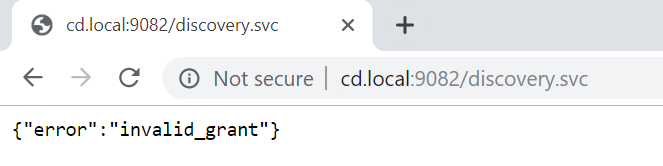

Many of us have configured applications that use Tridion's discovery service to find the various other content delivery services. All these services can be secured using oAuth, and the discovery service itself is no exception. It's almost a rite of passage to point a browser at the discovery endpoint only to see something like this:

In order to be allowed to use the discovery service, you need to provide an Authorization header when you call it, and otherwise, the "invalid_grant" error is what you get. Some of you may remember a blog post of mine from a few years ago where I showed how to call the content service with such a header. The essense of this was that first you call the token service with some credentials, and it hands you back a token that you can use in your authorization header.

That was when Web 8 was new, and I remember wondering, given that you only need to provide a discovery endpoint, how you could arrive at the location of the token service in order to get a token in the first place. I've seen enough code in the intervening period that takes a very pragmatic line and just assumes that the token service is to be found by taking the URL of the discovery service and replacing "discovery.svc" with "token.svc". That works, but it's somehow unsatisfactory. Wasn't the whole point of these service architectures that things should be discoverable and self-describing. Back then I'm fairly sure, after a cursory glance at https://www.odata.org/, I tried looking for the $metadata properties, but with the discovery service that just gets you an invalid grant.

I recently started looking at this stuff again, and somehow stumbled on how to make it work. (For the life of me, I can't remember what I was doing - maybe it's even documented somewhere.) It turns out that if you call the discovery service like this:

http://cd.local:9082/discovery.svc/TokenServiceCapabilities

it doesn't give you an invalid grant. Instead it gives you an oData response like this:

<?xml version="1.0" encoding="UTF-8"?>

<feed

xmlns:metadata="http://docs.oasis-open.org/odata/ns/metadata"

xmlns:data="http://docs.oasis-open.org/odata/ns/data"

xmlns="http://www.w3.org/2005/Atom" metadata:context="http://cd.local:9082/discovery.svc/$metadata#TokenServiceCapabilities" xml:base="http://cd.local:9082/discovery.svc">

<id>http://cd.local:9082/discovery.svc/TokenServiceCapabilities</id>

<title></title>

<updated>2019-09-04T19:28:36.916Z</updated>

<link rel="self" title="TokenServiceCapabilities" href="TokenServiceCapabilities"></link>

<entry>

<id>http://cd.local:9082/discovery.svc/TokenServiceCapabilities('DefaultTokenService')</id>

<title></title>

<summary></summary>

<updated>2019-09-04T19:28:36.916Z</updated>

<author>

<name>SDL OData v4 framework</name>

</author>

<link rel="edit" title="TokenServiceCapability" href="TokenServiceCapabilities('DefaultTokenService')"></link>

<link rel="http://docs.oasis-open.org/odata/ns/related/Environment" type="application/atom+xml;type=entry" title="Environment" href="TokenServiceCapabilities('DefaultTokenService')/Environment"></link>

<link rel="http://docs.oasis-open.org/odata/ns/relatedlinks/Environment" type="application/xml" title="Environment" href="TokenServiceCapabilities('DefaultTokenService')/Environment/$ref"></link>

<category scheme="http://docs.oasis-open.org/odata/ns/scheme" term="#Tridion.WebDelivery.Platform.TokenServiceCapability"></category>

<content type="application/xml">

<metadata:properties>

<data:id>DefaultTokenService</data:id>

<data:LastUpdateTime metadata:type="Int64">1566417662739</data:LastUpdateTime>

<data:URI>http://cd.local:9082/token.svc</data:URI>

</metadata:properties>

</content>

</entry>

</feed>

Looking at this, you can just reach into the "content" payload and read out the URL of the token service. So that explains the mystery, even if the possibility of doing a replace on the discovery service URL also counts as sufficient to render it unmysterious.

Anyway - who knew? I certainly didn't. Is this a better or more correct way of getting to the token service? I really don't know. Answers on a postcard?

Encrypting passwords for Tridion content delivery... revisited

A while ago I posted a "note to self" explaining that in order to use the Encrypt utility from the Tridion content delivery library, you needed to put an extra jar on your classpath. That was in SDL Web 8.5. This post is to explain that in SDL Tridion Sites 9, this advice still stands, but the names have changed.

But first, why would you want to do this? Basically it's a measure to prevent your passwords being shoulder-surfed. Imagine you have a configuration file with a password in it like this:

<Account Id="cduser" Password="${cduserpassword:-CDUserP@ssw0rd}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

You might not want everyone who passes by to see that your password is "CDUserP@ssw0rd". Much better to have something like encrypted:o/cgCBwmULeOyUZghFaKJA==

<Account Id="cduser" Password="${cduserpassword:-encrypted:o/cgCBwmULeOyUZghFaKJA==}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

Actually - with the possibility to do token replacement, I do wonder why you need a password in your config files at all, but that's not what this post's about.

The thing is that the jar files that used to be called cd_core.jar is now called udp-core.jar and cd_common_util.jar has become udp-common-util.jar. Actually this is a total lie, because in recent versions of Tridion all the jars have versioned names, as you'll see in the example I'm about to show you. One of these jars is to be found in the lib folder of your services, and the other in the services folder, so you might find it's easier just to copy them both to the same directory, but this is what it looks like doing it directly from the standalone folder of discovery:

PS D:\Tridion Sites 9.0.0.609 GA\Tridion\Content Delivery\roles\discovery\standalone> java -cp services\discovery-servic

e\udp-core-11.0.0-1020.jar`;lib\udp-common-util-11.0.0-1022.jar com.tridion.crypto.Encrypt foo

Configuration value = encrypted:6oR074TGuXmBdXM289+iDQ==

Note that here I've escaped the semicolon from the powershell with a backtick, but you can just as easily wrap the whole cp argument in quotes. Please note that I do not recommend the use of foo as a password. Equally, please don't use this encryption as your only means of safeguarding your secrets. It raises the bar a bit for the required memory skills of shoulder surfers, and that's about it. It's a good thing, but don't let it make you complacent. You also need to follow standard industry practices to control access to your servers and the data they hold. Of course, this is equally true of any external provisioning systems you have.

Discovery service in Tridion Sites nine has two storage configs

I just got bitten by a little "gotcha" in SDL Tridion Sites 9. When you unpack the intallation zip, you'll find that in the Content Delivery/roles/Discovery folder, there's a separate folder for registration, with the registration tool and its own copy of cd_storage_conf.xml. The idea seems to be that running the service and registering capabilities are two separate activities. I kind of get that. When I first saw the ConfigRepository element at the bottom of Discovery's configuration, I felt like it had been shoehorned into a somewhat awkward place. Yet now, it seems even more awkward. So sure, both the service and the registration tool need access to the storage settings for the discovery database, while only the registration tool needs the configuration repository.

The main difference seems to be one of security. The version of the config that goes with the registration tool has the ClientId and ClientSecret attributes while the other doesn't. This, in fact, is the gotcha that caught me out; I'd copied the storage config from the service, and ended up being unable to perform an update. The error output did mention being unable to get an OAuth token, but I didn't immediately realise that the missing ClientId and ClientSecret were the reason. Kudos to Damien Jewett for his answer on Stack Exchange, which saved me some hair-pulling.

I'm left wondering if this is the end game, or whether a future version will see some further tidying up or separation of concerns.

EDIT: On looking at this again, I realised that even in 8.5 we had two storage configs. The difference is that in 8.5 both had the ClientId and ClientSecret attributes.

Enumerating the Tridion config replacement tokens

OK - I get it. It's starting to look like I've got some kind of monomania regarding the replacement tokens in Tridion config files, but bear with me. In my last blog post, I'd hacked out a regex that could be used for replacing them with their default values, but had thought better of actually doing so. But still, the idea of being able to grab all the tokens has some appeal. I can't bear to waste that regex, so now I'm looking for a reasonable use for it.

It occurred to me that at some point in an installation, it might be handy to have a comprehensive list of all the things you can pass in as environment variables. Based on what I'd done yesterday this was quite straightforward

gci -r -include *.xml -exclude logback.xml| sls '\$\{.*?\}' `

| select {$_.RelativePath((pwd))},LineNumber,{$_.Matches.value} `

| Export-Csv SitesNineTokens.csv

By going to my unzipped Tridion zip and running this in the "Content Delivery/roles" folder, I had myself a spreadsheet with a list of all the tokens in Sites 9. Similarly, I created a spreadsheet for Web 8.5. (As you can see, I've excluded the logback files just to keep the volume down a bit, but in real life, you might also want to see those listed.)

The first thing you see when comparing Sites 9 with Web 8.5 is that there are a lot more of the things. More than twice as many. (At this point I should probably confess to some possible inaccuracy, as I haven't gone to the trouble of stripping out XML comments, so there could be some duplicates.)

65 of these come from the addition of XO and another 42 from IQ, but in general, there are just more of them. The bottom line is that to get a Tridion system up and running these days, you are dealing with hundreds of settings. To be fair, that's simply what's necessary in order to implement the various capabilities of such an enterprise system.

One curious thing I noticed is that the ambient configs all have a token to allow you to disable oauth security, yet no tokens for the security settings for the various roles. I wonder if this reflects the way people actually use Tridion.

Of course, you aren't necessarily limited by the tokens in the example configs of the shipped product. Are customers defining their own as they need them?

That's probably enough about this subject, though, isn't it?

Removing the replacement tokens from Tridion configuration files, and choosing not to

In SDL Web 8.5 we saw the introduction of replacement tokens in the content delivery configuration files. Whereas previously we'd simply had XML files with attributes and elements that we filled in with the relevant values, the replacement tokens allowed for the values to be provided externally when the configuration file is used. The commonest way to do this is probably by using environment variables, but you can also pass them as arguments to the java runtime. (A while ago, I wasted a bunch of time writing a script to pass environment variables in via java arguments. You don't need to do this.)

So anyway - taking the deployer config as my example, we started to see this kind of thing:

<Queues>

<Queue Id="ContentQueue" Adapter="FileSystem" Verbs="Content" Default="true">

<Property Value="${queuePath}" Name="Destination"/>

</Queue>

<Queue Id="CommitQueue" Adapter="FileSystem" Verbs="Commit,Rollback">

<Property Value="${queuePath}/FinalTX" Name="Destination"/>

</Queue>

<Queue Id="PrepareQueue" Adapter="FileSystem" Verbs="Prepare">

<Property Value="${queuePath}/Prepare" Name="Destination"/>

</Queue>

Or from the storage conf of the disco service:

<Role Name="TokenServiceCapability" Url="${tokenurl:-http://localhost:8082/token.svc}">

So if you have an environment variable called queuePath, it will be used instead of ${queuePath}. In the second example, you can see that there's also a syntax for providing a default value, so if there's a tokenurl environment variable, that will be used, and if not, you'll get http://localhost:8082/token.svc.

This kind of replacement token is very common in the *nix world, where it's taken to even further extremes. Most of this is based on the venerable Shell Parameter Expansion syntax.

All this is great for automated deployments and I'm sure the team running SDL's cloud services makes full use of this technique. For now, I'm still using my own scripts to replace values in the config files, so a recent addition turned out to be a bit inconvenient. In Tridion Sites 9, the queue Ids in the deployer config have also been tokenised. So now we have this kind of thing:

<Queue Default="true" Verbs="Content" Adapter="FileSystem" Id="${contentqueuename:-ContentQueue}">

<Property Name="Destination" Value="${queuePath}"/>

</Queue>

Seeing as I had an XPath that locates the Queue elements by ID, this wasn't too helpful. (Yes, yes, in the general case it's great, but I'm thinking purely selfishly!) Shooting from the hip I quickly updated my script with an awesome regex :-) , so instead of

$config = [xml](gc $deployerConfig)

I had

$config = [xml]((gc $deployerConfig) -replace '\$\{(?:.*?):-(.*?)\}','$1')

About ten seconds after finishing this, I realised that what I should be doing instead is fixing my XPath to glom onto the Verbs attribute instead, but you can't just throw away a good regex. So - I present to you, this beautiful regex for converting shell parameter expansions (or whatever they are called) into their default values while using the PowerShell. In other words, ${contentqueuename:-ContentQueue} becomes ContentQueue.

How does it work? Here it comes, one piece at a time:

' single quote. Otherwise Powershell will interpret characters like $ and {, which you don't want

\ a slash to escape the dollar from the regex

$ the opening dollar of the expansion espression

\{ match the {, also escaped from the regex

(?:.*?) match zero or more of anything, non-greedily, and without capturing

:- match the :-

(.*?) match zero or more of anything non-greedily. No ?: this time so it's captured for use later as $1

\} match the }

' single quote

The second parameter of -replace is '$1', which translates to "the first capture". Note the single quotes, for the same reason as before

So there you have it. Now if ever you need to rip through a bunch of config files and blindly accept the defaults for everything, you know how. But meh... you could also just not provide any values in the environment. I refuse to accept that this hack is useless. A reason will emerge. The universe abhors a scripting hack with no purpose.

I'm an Idea Machine!

Apparently, I'm an "Idea Machine". Who'da thought it?

So.... the SDL community site just gave me the Idea Machine badge. Apparently you get this for submitting five ideas. Alright, alright - I'll get over myself, really. Just let me enjoy the moment!! :-)

But seriously... for all the nonsense of online achievements, badges and whatever... submitting ideas is a really good... um.. idea. Many of us are working with Tridion software day in and day out, and sometimes you come across something that could be better, or even just something a bit irritating. Chances are you aren't the only one who would appreciate an improvement. So do us all a favour, hold that thought and pop along to https://community.sdl.com/ideas/sdl-tridion-dx-ideas/sdl-tridion-sites-ideas/, or the relevant site for you, and post your idea.

Once your idea is in, people can vote it up or down and comment on it. You can also be sure that it will be seen by people from R&D and product management. The only down side of the whole thing is that they have an "ideation wiki". Ideation? Ideation? Really. Maybe they'll have to find some cweatives for that.

Oh what the heck?! Come on folks! Get ideating!!!