Dominic Cronin's weblog

Programatically changing the Publishable flag on a Category

Not long ago I was writing a script which, among many other things, needed to set the Publishable property of a category. In the Tridion user interface, a category has a checkbox, large as life, that says "Publishable". How hard could it be, I thought. :-)

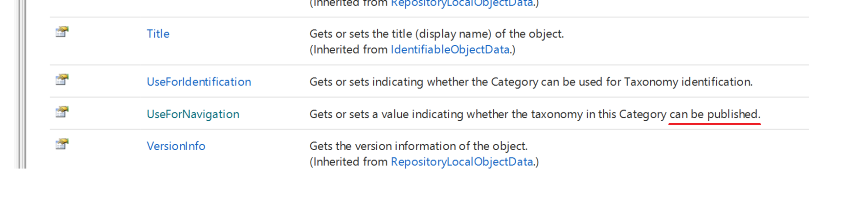

It turns out that when you work with the API (in this case, the core service), it's not called Publishable (or any variation on that), but UseForNavigation.

I kind of get it. Back when categories first could be published, the focus was on using them to build navigations. There's even a note in the documentation that says "Before SDL Tridion 2009 the behavior was get or set whether the taxonomy can be used for navigation."

Well I suppose every product as complex (and powerful) as Tridion will have it's history and quirks. In fact, it only cost me a few minutes to figure this out, so it's not really a problem. I'm still going to file this post under "gotchas" though!

Constructing an ImportExport ItemsSelector in Powershell

I've used the Tridion ImportExport API a couple of times from the PowerShell, and until now, I didn't really have any reason to use anything except a Subtree selector for my exports. If you put your items in a bundle, this is what you use, and for the rest, mostly what you want is everything in a folder or structure group. Invoking the constructor of SubtreeSelection usually looks something like this:

$selection = New-Object Tridion.ContentManager.ImportExport.SubtreeSelection $someOrgItemUrl,$true

This is fine because the arguments are both single variables. The trouble comes when you want to construct an Items Selector. Your first attempt probably looks like:

$items = @($itemUrl)

$selection = New-Object Tridion.ContentManager.ImportExport.ItemsSelection $items

You're probably thinking: I only want one item, but the constructor expects an [IEnumerable[string]] so I'll just use the array subexpression operator @() to force my single item to be an array and let Powershell take care of the rest of the magic of casting to IEnumerable. Powershell for the easy life, eh?

But it doesn't work. You get back some message like

New-Object : Cannot convert argument "0", with value: "foobar", for "ItemsSelection" to type "System.Collections.Generic.IEnumerable`1[System.String]": "Cannot convert the "foobar" value of type "System.String" to type "System.Collections.Generic.IEnumerable`1[System.String]"."

So what's going on here? It turns out that Powershell thinks that constructor parameters shouldn't be collections. However you want to imagine that, its type resolution logic ends up converting your collection back to a single item (presumably the first) which the constructor promptly rejects. I went through various hoops trying to force things to be an array, or a single item containing an array. You can create your array either with the subexpression operator @(), or just with a unary comma operator ($foo = ,$itemURl) but I ended up calling split with an empty delimiter. I'm not saying it's pretty, but it worked for me. I then also cast it explicitly to the expected collection type. In Powershell v5, the constructor is available using the static method syntax on the type, and calling the constructor this way is less prone to type resolution magic messing things up. Don't ask me exactly how. I have no idea. Anyway - this is what worked eventually:

[System.Collections.Generic.IEnumerable`1[System.String]]$items = $itemUrl.split('')

$selection = [Tridion.ContentManager.ImportExport.ItemsSelection]::new($items)

I hope this saves somebody some hair pulling and Googling.

Adding an authorization header for the Tridion content service using Fiddler

I've started to experiment with the GraphQL API offered by Tridion Sites 9's Content service. The obvious way to do this is to use the GraphiQL endpoint. On my system I can do this by pointing my browser at http://cd.local:8081/cd/api/graphiql. The only fly in the ointment is that the service expects an OAuth header, so you have to take care of that yourself. The guidance I've seen so far is to use a browser plugin like Requestly to do this, so I duly installed it, and was able to get successful query responses instead of the dreaded 'invalid_grant'. All well and good, but honestly, it's a right faff. Firstly, the plugin itself is clunky, so to open the relevant config window, you're at least several clicks away from sorting out your authorization header, which wouldn't be too bad, but the darned things keep timing out, so you keep having to repeat the procedure. Maybe there's a better plugin, but I figured life's too short. I use Fiddler quite often for faking various scenarios and making test setups work a bit more like they are supposed to in the real world, so why not knock off a quick Fiddler script and be done with it.... I thought!

Actually - it turned out to be a bit fiddly, but I now have it working, so time to share. Usual disclaimers.... it's not very polished. It works for my scenario, and if yours is different you'll have to use the source, Luke.

So - go and open up Fiddler and head to the FiddlerScript button or go to the Rules->CustomiseRules menu option. Once you have a script editing screen in view, you should be able to find the function OnBeforeRequest(oSession: Session). Inside this function, paste in the following code and fix it up to meet your own bizarre preferences:

if (oSession.uriContains("http://cd.local:8081/cd/api")) {

var client_id = "cduser";

var client_secret = 'CDUserP@ssw0rd';

var strBody = "client_id=$client_id&client_secret=$client_secret&grant_type=client_credentials&resources=%2F".replace("$client_id",encodeURIComponent(client_id)).replace("$client_secret",encodeURIComponent(client_secret));

var arrBody = new byte[strBody.length];

for (var i = 0;i < strBody.length;i++){

arrBody[i] = strBody.charCodeAt(i);

}

var oHeaders = new HTTPRequestHeaders();

oHeaders.RequestPath ="http://cd.local:8082/token.svc";

oHeaders["Content-Type"] = "application/x-www-form-urlencoded";

oHeaders["Host"] = "cd.local:8082"

oHeaders.HTTPMethod = "POST";

oHeaders["Content-Length"] = arrBody.length;

var oAuthSession = FiddlerApplication.oProxy.SendRequestAndWait(oHeaders, arrBody, null, null);

if (200 == oAuthSession.responseCode) {

var oJSON = Fiddler.WebFormats.JSON.JsonDecode(oAuthSession.GetResponseBodyAsString());

oSession.RequestHeaders.Add("Authorization", oJSON.JSONObject["token_type"] + ' ' + oJSON.JSONObject["access_token"]);

}

else {

MessageBox.Show("Bad Auth: " + oAuthSession.responseCode);

}

}

If you now go back to your grapiql page, you should find that your requests are authorised. If it doesn't work, make sure that you've removed your rule out of Requestly or whatever you've been using; given two "Authorized" headers, the service will very likely not behave nicely.

There are plenty of obvious improvements that can still be made. For example, it's probably fairly easy to switch this on and off with a setting in Fiddler, or to check for an existing Authorization header.

Anyway - this is going to make my life much nicer as I play with the API.

Encrypting passwords for Tridion content delivery... revisited

A while ago I posted a "note to self" explaining that in order to use the Encrypt utility from the Tridion content delivery library, you needed to put an extra jar on your classpath. That was in SDL Web 8.5. This post is to explain that in SDL Tridion Sites 9, this advice still stands, but the names have changed.

But first, why would you want to do this? Basically it's a measure to prevent your passwords being shoulder-surfed. Imagine you have a configuration file with a password in it like this:

<Account Id="cduser" Password="${cduserpassword:-CDUserP@ssw0rd}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

You might not want everyone who passes by to see that your password is "CDUserP@ssw0rd". Much better to have something like encrypted:o/cgCBwmULeOyUZghFaKJA==

<Account Id="cduser" Password="${cduserpassword:-encrypted:o/cgCBwmULeOyUZghFaKJA==}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

Actually - with the possibility to do token replacement, I do wonder why you need a password in your config files at all, but that's not what this post's about.

The thing is that the jar files that used to be called cd_core.jar is now called udp-core.jar and cd_common_util.jar has become udp-common-util.jar. Actually this is a total lie, because in recent versions of Tridion all the jars have versioned names, as you'll see in the example I'm about to show you. One of these jars is to be found in the lib folder of your services, and the other in the services folder, so you might find it's easier just to copy them both to the same directory, but this is what it looks like doing it directly from the standalone folder of discovery:

PS D:\Tridion Sites 9.0.0.609 GA\Tridion\Content Delivery\roles\discovery\standalone> java -cp services\discovery-servic

e\udp-core-11.0.0-1020.jar`;lib\udp-common-util-11.0.0-1022.jar com.tridion.crypto.Encrypt foo

Configuration value = encrypted:6oR074TGuXmBdXM289+iDQ==

Note that here I've escaped the semicolon from the powershell with a backtick, but you can just as easily wrap the whole cp argument in quotes. Please note that I do not recommend the use of foo as a password. Equally, please don't use this encryption as your only means of safeguarding your secrets. It raises the bar a bit for the required memory skills of shoulder surfers, and that's about it. It's a good thing, but don't let it make you complacent. You also need to follow standard industry practices to control access to your servers and the data they hold. Of course, this is equally true of any external provisioning systems you have.

Getting SSH working on WSL

Or on longhand: getting the Secure Shell working on the Windows Subsystem for Linux.

I've run a Unix command line on my Windows systems for years using Cygwin. I'm not one of those Unix nerds that can't function in a native Windows world, but there was always one particular use case that Windows was spectacularly poor in. If you wanted to connect to *nix systems, the obvious way to do this was via the Secure Shell (SSH) and Windows just didn't have an SSH client. Full stop. Nothing, nada, etc. Windows had its own mechanisms for connecting securely to.... another Windows box. If you wanted to connect to something that wasn't Windows.... well who'd want to do that?

Those of us that did installed Cygwin. This was an implementation of a *nix kernel in a DLL, and a bunch of the standard utilities built to use it. You could (and still can) do pretty much anything: if you couldn't search a file system without grep, Cygwin made it OK for you. I didn't use many of the utilities apart from occasionally Ghostscript to manipulate PDFs, but I used SSH every day.

Eventually Microsoft wised up and realised that open source wasn't the enemy. Linux was cool, and even Microsofties could learn to love it. So they implemented the Linux kernel as a Windows driver and called it the Windows Subsystem for Linux. They first teamed up with Ubuntu to get the user space stuff running, and then later with Suse and Debian, so you've got a fair choice if you're fussy about your distros.

And still - the killer use case is opening up a secure shell session to a Linux box. This is why we want WSL. So it's a bit rubbish when you discover that the standard way of logging in to such a remote session doesn't work. I'm talking about public key authentication. The basic idea is that you have two files holding the two parts f a public/private key pair. One lives on the server, and the other on the client. With this setup, you just make the connection and you're logged in. In order to keep this secure, the standard SSH client software insists that they key file is secured so that it's private to you. If anyone else can read it, the software will just refuse to play ball.

This is all well and good as long as you can set the security up to do that, but under WSL, in its out of the box configuration, you can't. This has been a source of great irritation to me, and I have now figured out the solution for the second time, having failed to write a note-to-self blog post the last time. This time, I'm writing it. See?

The bottom line is that you need to have the WSL enable file system metadata so that you can override the security settings you need to. Here's an article explaining why, and here's one explaining how.

TL;DR

From in your WSL shell create "/etc/wsl.conf". You'll probably need to sudo in to vi to do this or you won't be able to save it. In the file, add the following:

[automount] options="metadata"

With this in place, the next time you start the shell, metadata will be enabled, and you'll be able to "chmod 700" your key files to your heart's content.

Tridion Core service PowerShell settings for SSO-enabled CMS

In a Single-Sign-On (SSO) configuration, it's necessary to use Basic Authentication for web requests to the Tridion Content Manager from the browser. This is probably the oldest way of authenticating a web request, and involves sending the password in plain over the wire. This allows the SSO system to make use of the password, which would be impossible if you used, for example, Windows Authentication. The down side of this is that you'd be sending the password in plain over the wire... can't have that, so we encrypt the connection with HTTPS.

What I'm describing here is the relatively simple use case of using the powershell module to log in to an SSO-enabled site using a domain account. Do please note that this won't work if you're expecting to authenticate using SSO. Then you'll need to mess around with federated security tokens and such things. My use case is a little simpler as I have a domain account I can log in with. As the site is set up to support most of the users coming in via SSO, these are the settings I needed, and hence this "note to self" post. If anyone has gone the extra mile to get SSO working, I'd be interested to hear about it.

So this is how it ends up looking:

Import-Module Tridion-CoreService

Set-TridionCoreServiceSettings -HostName 'contentmanager.company.com'

Set-TridionCoreServiceSettings -Version 'Web-8.5'

Set-TridionCoreServiceSettings -CredentialType 'Basic'

Set-TridionCoreServiceSettings -ConnectionType 'Basic-SSL'

$ServiceAccountPassword = ConvertTo-SecureString 'secret' -AsPlainText -Force

$ServiceAccountCredential = New-Object System.Management.Automation.PSCredential ('DOMAIN\login', $ServiceAccountPassword)

Set-TridionCoreServiceSettings -Credential $ServiceAccountCredential

$core = Get-TridionCoreServiceClient

$core.GetApiVersion() # The simplest test

This is just an example, so I've stored my password in the script. The password is 'secret'. It's a secret. Don't tell anyone. Still - even though I'm a bit lacking in security rigour, the PowerShell isn't. It only wants to work with secure strings and so should you. In fact, it's not much more fuss to work with Convert-ToSecureString and friends to keep everything ship shape and Bristol fashion.

Using the Tridion PowerShell module in a restricted environment

At some point, pretty much every Tridion specialist is going to want to make use of Peter Kjaer's Tridion Core Service Powershell modules. The modules come with batteries included, and if you look at the latest version, you'll see that the modules are available from the PowerShell gallery, and therefore a simple install via Install-Module should "just work".

Most of us spend a lot of our time on computers that are behind a corporate firewall, and on which the operating system is managed for us by people whose main focus is on not allowing us to break anything. I recently found myself trying to install the modules on a system with an older version of PowerShell where Install-Module wasn't available. The solution for this is usually to install the PowerShellGet module which makes Install-Module available to you. In this particular environment, I knew that various other difficulties existed, notably with the way the PowerShell module path is managed. Installing a module would first require a solution to the problem of installing modules. In the past, I'd made a custom version of the Tridion module as a workaround, but now I was trying to get back to a clean copy of the latest, greatest version. Hacking things by hand would defeat my purpose.

It turned out that I was able to clone the GIT repository, so I had the folder structure on disk. (Failing that I could have tried downloading a Zip file from GitHub.)

Normally, you install your modules in a location on the Module Path of your PowerShell, and the commonest of these locations is the WindowsPowerShell folder in your Documents folder. (There are other locations, and you can check these with "gc Env:\PSModulePath".) As I've mentioned, in this case, using the normal Module Path mechanism was problematic, so I looked a little further. It turned out the solution was much simpler than I had feared. You can simply load a module by specifying its location when you call ImportModule. I made sure that the tridion-powershell-modules folder I'd got from GIT was in a known location relative to the script file from which I wanted to invoke it, and then called Import-Module using the location of Tridion-CoreService.psd1

$scriptLocation = Split-Path ((Get-Variable MyInvocation -Scope 0).Value).MyCommand.Path

import-module $scriptLocation\..\tridion-powershell-modules\CoreService\Tridion-CoreService.psd1

Getting the script location from the built-in MyInvocation variable is ugly, but pretty much standard PowerShell. Anyway - this works, and I now have a strategy for setting up my scripts to use the latest version of the core service module. Obviously, if you want the Alchemy or Content Delivery module, a similar technique ought to work.

Getting gvim to work from the Ubuntu on Windows bash prompt

Just lately I've been tinkering a bit more with Linux-y things, among which trying to get to grips with a bit of bash scripting. As my main work environment is a Windows 10 system, the obvious place for such tinkering is in the Windows Sub-System for Linux (WSSL or WSL depending on whose abbreviation you favour). In any case, the bash prompt in Windows.

Generally, WSSL works rather well, <rant>my main proviso there being the really unhelpful problems with permissions. I get it... it's probably a really nasty job to fix it, but really!.... for chmod to be broken is just wrong! More to the point, it means I can't use a private key for ssh logins to other systems. Maybe I'll go back to cygwin after all.</rant>

Anyway, today's problem was rather more tractable. I wanted to edit a bash script using gvim. My first attempt was just to open it from the bash prompt:

dominic@DOMINIC:/mnt/d/code/bash$ gvim foo.sh

E233: cannot open display

Press ENTER or type command to continue

Yeah OK, that then falls back to a standard vim session in the terminal, but if that's what I'd wanted, I wouldn't have typed 'gvim'.

It turns out that there's a version of gvim in the Ubuntu user-space stuff that comes with WSSL. When you type gvim at the prompt, it finds /usr/bin/gvim in the PATH, and tries to open that.

Nil desperandum

dominic@DOMINIC:/mnt/d/code/bash$ file /usr/bin/gvim

/usr/bin/gvim: symbolic link to `/etc/alternatives/gvim'

dominic@DOMINIC:/mnt/d/code/bash$ sudo unlink /usr/bin/gvim

dominic@DOMINIC:/mnt/d/code/bash$ sudo ln -s /mnt/c/Program\ Files\ \(x86\)/vim/vim80/gvim.exe /usr/bin/gvim

After that it worked like a treat. Maybe the other way to go would be to see if you can get an XWindows server running on WSSL, but this got me up and running without having to get into even more faff with copies of rc files and whatnot.

Preparing HTML data for use in a Tridion Rich Text Format area

I recently had to create some Tridion components from code via the core service. The incoming data was in the form of HTML, and not XML in the XHTML namespace, which is what is required for a Tridion RTF area. I'd also had to do some preparatory clean-up of the data, and by the time I wanted to fix up the namespaces, I already had the input data in an XLinq XElement.

These days, if I'm processing XML in .NET, I'm quite likely to use XLinq. It's taken me a while to get comfortable with some of its idioms. The technique I ended up using is similar to the classic approach we typically adopt in XSLT, starting with an identity transform and making a couple of minor tweaks to the data as it goes through.

So, mostly by way of a "note to self", here's how it looks in XLinq. All you need to do is pass in your XElement containing your XHTML, and it will rip through all the elements and put them in the XHTML namespace, leaving all the attributes and other nodes untouched.

public XNode PutHtmlElementsInXhtmlNamespace(XNode input){

XNamespace xhtmlNs = "http://www.w3.org/1999/xhtml";

var element = input as XElement;

if (element != null)

{

XName name = xhtmlNs + element.Name.LocalName;

return new XElement(name,element.Attributes(),

element.Nodes().Select(n => PutHtmlElementsInXhtmlNamespace(n)));

}

return input;

}

In this way you can easily create data that's suitable for use in an RTF. Piecing the rest of a Content element together with XElement is pretty easy too, or of course, you can use the venerable Fields class for the rest.

Which device size are you looking at in Bootstrap 3?

If you work on websites these days, you've probably come across Bootstrap. It's a HTML/CSS/JS framework for producing responsive user interfaces for web sites. One of the things it does for you is manage a grid system in which your page has 12 columns, and you get to decide how many columns each element in your page should occupy. You do this by putting classes on your HTML elements that look something like "col-xs-4", which means "allow this element to occupy 4 columns on an extra small device. In Bootstrap 3, there are four device sizes: Extra small, Small, Medium and Large. If you specify different amounts of columns for the different devices, then as you resize your device (usually in the responsive emulator of your browser), you'll see the various blocks sliding under each other as things get smaller.

When you're doing this, it's quite handy to know which device size Bootstrap thinks it's got at any given moment. I wanted to know this, so after a bit of fiddling, I came up with the following:

<span class="hidden-sm hidden-md hidden-lg">XS</span> <span class="hidden-xs hidden-md hidden-lg">SM</span> <span class="hidden-xs hidden-sm hidden-lg">MD</span> <span class="hidden-xs hidden-sm hidden-md">LG</span>

With this pasted somewhere handy in the footer or header, you can monitor whether the changing shape of your page is in line with your expectations for a given device size. You'll see the letters that refer to the size of device you're looking at. Obviously, it's something you'd want to remove before you actually ship code.

A couple of provisos:

- This is for Bootstrap 3. Bootstrap 4 is different enough that you might even see it as a different framework. The equivalent technique would be with "display" classes that typically begin with "d-".

- You might be able to get this a bit tighter. The device sizes are a hierarchy, so maybe some of my classes aren't necessary. I stopped when it worked. Life's too short!

- Bootstrap is very customisable, so YMMV