Dominic Cronin's weblog

Removing the replacement tokens from Tridion configuration files, and choosing not to

In SDL Web 8.5 we saw the introduction of replacement tokens in the content delivery configuration files. Whereas previously we'd simply had XML files with attributes and elements that we filled in with the relevant values, the replacement tokens allowed for the values to be provided externally when the configuration file is used. The commonest way to do this is probably by using environment variables, but you can also pass them as arguments to the java runtime. (A while ago, I wasted a bunch of time writing a script to pass environment variables in via java arguments. You don't need to do this.)

So anyway - taking the deployer config as my example, we started to see this kind of thing:

<Queues>

<Queue Id="ContentQueue" Adapter="FileSystem" Verbs="Content" Default="true">

<Property Value="${queuePath}" Name="Destination"/>

</Queue>

<Queue Id="CommitQueue" Adapter="FileSystem" Verbs="Commit,Rollback">

<Property Value="${queuePath}/FinalTX" Name="Destination"/>

</Queue>

<Queue Id="PrepareQueue" Adapter="FileSystem" Verbs="Prepare">

<Property Value="${queuePath}/Prepare" Name="Destination"/>

</Queue>

Or from the storage conf of the disco service:

<Role Name="TokenServiceCapability" Url="${tokenurl:-http://localhost:8082/token.svc}">

So if you have an environment variable called queuePath, it will be used instead of ${queuePath}. In the second example, you can see that there's also a syntax for providing a default value, so if there's a tokenurl environment variable, that will be used, and if not, you'll get http://localhost:8082/token.svc.

This kind of replacement token is very common in the *nix world, where it's taken to even further extremes. Most of this is based on the venerable Shell Parameter Expansion syntax.

All this is great for automated deployments and I'm sure the team running SDL's cloud services makes full use of this technique. For now, I'm still using my own scripts to replace values in the config files, so a recent addition turned out to be a bit inconvenient. In Tridion Sites 9, the queue Ids in the deployer config have also been tokenised. So now we have this kind of thing:

<Queue Default="true" Verbs="Content" Adapter="FileSystem" Id="${contentqueuename:-ContentQueue}">

<Property Name="Destination" Value="${queuePath}"/>

</Queue>

Seeing as I had an XPath that locates the Queue elements by ID, this wasn't too helpful. (Yes, yes, in the general case it's great, but I'm thinking purely selfishly!) Shooting from the hip I quickly updated my script with an awesome regex :-) , so instead of

$config = [xml](gc $deployerConfig)

I had

$config = [xml]((gc $deployerConfig) -replace '\$\{(?:.*?):-(.*?)\}','$1')

About ten seconds after finishing this, I realised that what I should be doing instead is fixing my XPath to glom onto the Verbs attribute instead, but you can't just throw away a good regex. So - I present to you, this beautiful regex for converting shell parameter expansions (or whatever they are called) into their default values while using the PowerShell. In other words, ${contentqueuename:-ContentQueue} becomes ContentQueue.

How does it work? Here it comes, one piece at a time:

' single quote. Otherwise Powershell will interpret characters like $ and {, which you don't want

\ a slash to escape the dollar from the regex

$ the opening dollar of the expansion espression

\{ match the {, also escaped from the regex

(?:.*?) match zero or more of anything, non-greedily, and without capturing

:- match the :-

(.*?) match zero or more of anything non-greedily. No ?: this time so it's captured for use later as $1

\} match the }

' single quote

The second parameter of -replace is '$1', which translates to "the first capture". Note the single quotes, for the same reason as before

So there you have it. Now if ever you need to rip through a bunch of config files and blindly accept the defaults for everything, you know how. But meh... you could also just not provide any values in the environment. I refuse to accept that this hack is useless. A reason will emerge. The universe abhors a scripting hack with no purpose.

Encrypting passwords for Tridion content delivery

This is just a quick note to self, because I just spent a few minutes figuring out something fairly trivial and I don't want to forget it.

Previously, to encrypt a password for Tridion content delivery, you would do something like:

java -cp cd_core.jar com.tridion.crypto.Encrypt foobar

It's been a while since I did this, and I hadn't realised that in Web 8.5 it doesn't work any more. They've factored the Crypto class out into a utility jar, so now the equivalent command has become something like:

java -cp cd_core.jar;cd_common_util.jar com.tridion.crypto.Encrypt foobar

Of course, these days the jars also have build numbers in the name, so it's a bit uglier. The point is that you have to have cd_core and cd_common_util on your classpath.

Stripping namespace declarations from XML

I've recently been working on an application that will allow members of our content management teams to search within a chosen folder in Tridion for specific content. You might think that's well enough covered by the built-in search functionality, but we're heading towards a search and replace feature, so we pretty much have to process the content ourselves. In the end users' view of the world, a Rich Text field in a component has... well... a rich text view, and, for the power-users, a Source tab where you can see the underlying HTML. That's all fine, but once you get to the technical implementation, it's a bit more complicated, and we'll end up replicating some of Tridion's own smoke and mirrors to present a view to the users that's consistent with what they are used to. This means not only that we need to be able to translate from text to HTML, but also from "XML in the XHTML namespace" to HTML. One of the bulding blocks we need to do this is the ability to take XML with namespace declarations, and get rid of them so that the result isn't in a namespace.

A purist (such as myself) might say that the only correct way to parse XML is with an XML parser, and just in case you've never ended up there, I heartily recommend that you read this answer on Stack Exchange before proceding further. Still - in this case, what I want to do is amenable to RegExes, and yes, I know: now I have two problems. Anyway - FWIW - I started this at the office, thinking I'd just quickly Google for a namespace-stripping regex and I'd be on my way. Suffice it to say that the Internet is rubbish at this. I ended up with a page of links to rubbish regexes that just weren't going to float my boat. So I mailed the problem to myself at home, and today, in the quiet of a Sunday morning, it didn't seem quite so daunting. Actually, I'm still considering whether an XML-parser approach, or an XSLT might not be better, and I may end up there if my needs turn out to be more complex, but for now, here's the namespace stripper.

static Regex namespaceRegex = new Regex(@"

xmlns # literal (:[^\s=]+)? # : followed by one or more non-whitespace, non-equals chars \s* # optional whitespace = # literal \s* # optional whitespace (?<quote>['""]) # Either a single or double quote - giving it the name 'quote' for back-reference .+? # Non-greedily match anything \k<quote> # The end-quote to match the one we found earlier ", RegexOptions.Singleline | RegexOptions.IgnoreCase | RegexOptions.IgnorePatternWhitespace);

public static string RemoveNamespacesFromDocument(string xml) { return namespaceRegex.Replace(xml, string.Empty); }

Of course, this is written in C#, and I'm taking advantage of the IgnorePatternWhitespace feature in .NET regexes, which allows for the copious comments that might well be necessary if I ever have to actually read this code instead of just writing it.

But just in case you are hardcore, and all that named matches and commenting fuss is for wusses, here's the TL;DR...

@"(?is)xmlns(:[^\s=]+)?\s*=\s*(['""]).+?\2"

What's not to like? :-)

Mashing your scanned JPGs back into one big PDF

It happens more often these days. You get some form sent to you as a PDF. You print it out, and fill it in, and then you want to scan it back in and send it back. For one reason or another, my scanner likes to scan documents to JPEG files: one file per scan. Grr...

In the past, I've used some PDF printer driver or other to solve this problem, but under the water they pretty much all use ghostscript, so why not do it directly. I used to install cygwin on my Windows machines to get access to utilities like this, but these days, Windows embeds a pretty much functional Ubuntu.

So yeah - just directly using ghostscript. How hard can it be? Well it turns out that a bit of Googling leads you to typing some pretty gnarly command lines, especially since I had scanned a 15 page document into 15 separate JPG files. And then Adobe Acrobat didn't understand the resulting document. No good at all. So then I googled further and found this.

It turns out that by installing not only ghostscript but imagemagick, the imagemagick "convert" utility knows how to do exactly what you want, presumably by enlisting the help of ghostscript. So simply by cd'ing to the directory where I had my scans, this...

$ convert *.JPG outputfile.pdf

... did the trick. Pretty neat, huh? Note to self....

Decoding webdav URLs (or how to avoid going cross-eyed reading your error messages)

I was doing some Content Porting the other day. When moving code up the DTAP street the general practice is to switch off dependency management and, well, manage the dependencies yourself. This is great for a surgical software release, where you know exactly what's in the package and can be sure that you aren't unintentionally releasing something you hadn't planned to, but....

Yeah - there's always a but. In this case, you have to make sure that all the items your exported items depend on are present, either in the export or in the target system. Sometimes you miss one, and during the import you get a nice error message saying which item is missing. Unfortunately, the location of the item is given as a WebDAV URL. If the item in question has lots of spaces, quote marks, or other special characters in it, by the time you get to read the URL in all its escaped glory, it can be a complete alphabet soup.

So there I was, squinting at some horrible URL and mentally parsing out the escape sequences to figure out what I was looking at.. when it dawned on me. Decoding encoded URLs is not work for humans - we have computers for that. So I fired up my trusty Powershell, thinking "hey, I have the awesome power of the .NET framework at my disposal". As it turns out, the HttpUtility libraries that most devs are familiar with is probably not there in your ordinary desktop OS, but System.Net.WebUtility is. So if you've copied a webdav url into your paste buffer, you can open the shell, type in

[net.webutility]::Ur

From here on tab completion will fill in the rest of UrlDecode, and with one or two more keystrokes and a right-mouse-click you have something like this:

[net.webutility]::UrlDecode("/webdav/Some%20Publication/This%20%26%20that/More%20%22stuff%22%20to%20read/a%20soup%C3%A7on%20of%20something")

and then hitting enter gets you this:

/webdav/Some Publication/This & that/More "stuff" to read/a soupçon of something

which is much more readable.

Of course, if even that is too much typing for you, you can stick something like this in your profile:

function decode ($subject) {

[net.webutility]::UrlDecode($subject)

}

Of course, none of this is strictly necessary - you can always stare at the WebDAV URLs and decipher them as an exercise in mental agility, but some days you just want the easy life.

Spoofing a MAC address in gentoo linux

I spent a few hours this weekend fiddling with networking things at home. One of the things I ran into was that the DHCP server provided by my ISP was behaving erratically. Specifically, it was being very fussy about giving out a new lease. It would give out a lease to a Windows 7 system I was using for testing, but not to my Gentoo server. At some point, having spent the day with this kind of frustration, I was ready to put up with almost any hack to get things running. Someone on the #gentoo IRC channel suggested that spoofing the MAC address that already had a lease might be a solution. Their solution was to do this:

ifconfig eth0 down ifconfig eth0 hw ether 08:07:99:66:12:01 ifconfig eth0 up

Here, you have to imagine that eth0 is the name of the interface, although on my system it isn't any more. (Another thing I learned this weekend was about predictable interface names.) You should also imagine that 08:07:99:66:12:01 is the mac address of the network interface on my Win7 system.

The trouble with this is that it doesn't integrate very well in the standard init scripts that get things going on a Gentoo system. Network interfaces are started by running /etc/init.d/net.eth0 (although that's just a link to another script). The configuration is to be found in /etc/init.d/net where you can add directives that control the way your network interfaces are configured. The most important of these are the ones that begin with "config_". For example, to set up a static IP for eth0, you might say something like:

config_eth0="192.168.0.99 netmask 255.255.255.0 brd 192.168.0.255"

or for DHCP it's much simpler:

config_eth0="dhcp"

So my obvious first try for setting up a spoofed MAC address was something like this:

config_eth0="dhcp hw ether 08:07:99:66:12:01"

but this didn't work at all. Anyway - after a bit of fiddling and more Googling (sorry - I can't remember where I found this) it turned out that there's a specific directive just for this purpose. I tried this

mac_eth0="08:07:99:66:12:01" config_eth0="dhcp"

It works a treat. Note that the order is important, which is obvious once you know it I suppose, but wasn't obvious to me until I'd got it wrong once.

The good news after that was that for an established lease, everything worked rather better.

Getting the complete component XML

One of the basic operations that a Tridion developer needs to be able to do is getting the full XML of a Component. Sometimes you only need the content, but say, for example that you're writing an XSLT that transforms the full Component document - you need to be able to get an accurate representation of the underlying storage format (OK - for now let's just skate over the fact that different versions have different XML formats under the water)

In the balmy days of early R5 versions, this was pretty easy to do. The Tridion installation included a "protocol handler", which meant that if you just pasted a TCM URI into the address bar of your browser, you'd get the XML of that item displayed in the browser. This functionality was actually present so that you could reference Tridion items via the document() function in an XSLT, but this was a pretty useful side effect. OK... you had to be on the server itself, but hey - that's not usually so hard for a developer. If you couldn't get on the server, or you found it less convenient, another option was to configure the GUI to be in debug mode, and you'd get an extra button that opened up some "secret" dialogs that gave you access to, among other things, the XML of the item you had open in the GUI.

Moving on a little to the present day, things are a bit different. Tridion versions since 2011 have a completely different GUI, and XSTL transforms are usually done via the .NET framework, which has other ways of supporting access to "arbitrary" URIs in your XSLT. The GUI itself is built on a framework of supported APIs, but doesn't have a secret "debug" setting. However, this isn't a problem, because all modern browsers come fully loaded with pretty powerful debugging tools.

So how do we go about getting the XML if we're running an up-to-date version of Tridion? This question cropped up just a couple of days ago on my current project, where there's an upgrade from Tridion 2009 to 2013 going on. I didn't really have a simple answer - so here's how the complicated answer goes:

My first option when "talking to Tridion" is usually the core service. The TOM.NET API will give you the XML of an item directly via the .ToXml() methods. Unfortunately, someone chose not to surface this in the core service API. Don't ask me why? Anyway - for this kind of development work, you could use the TOM.NET. You're not really supposed to use the TOM.NET for code that isn't hosted by Tridion (such as templates) but on your development server, what the eye doesn't see the heart won't grieve over. Of course, in production code, you should take SDL's advice on such things rather more seriously. But we're not reduced to that just yet.

Firstly, a brief stop along the way to explain how we solved the problem in the short term. Simply enough - we just fired up a powershell and used it to access the good-old-fashioned TOM.COM. Like this:

PS C:\> $tdse = new-object -com TDS.TDSE

PS C:\> $tdse.GetObject("tcm:2115-5977",1).GetXml(1919)

Simple enough, and it gets the job done... but did I mention? We have the legacy pack installed, and I don't suppose this would work unless you have.

So can it be done at all with the core service? Actually, it can, but you have to piece the various parts together yourself. I did this once, a long time ago, and if you're interested, you can check out my ComponentFactory class over on a long lost branch of the Tridion power tools project. But that's probably too much fuss for day to day work. Maybe there are interesting possibilities for a powershell module to make it easier, but again.... not today.

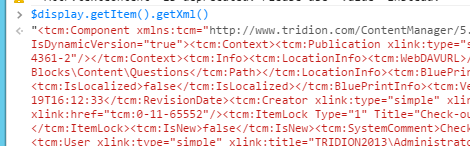

But thinking about these things triggered me to remember the Power tools project. One of the power tools integrates an extra tab into your item popup, giving you the raw XML view. I'd been thinking to myself that the GUI API (Anguilla) probably had reasonably easy support for what we're trying to do, but I didn't want to go to the effort of figuring it all out. Never fear: after a quick poke around in the sources I found a file called ItemXmlTab.ascx.js, and there I found the following gem:

var xmlSource = $display.getItem().getXml();

That's all you need. The thing is... the power tool is great. It does what it says on the box, and as far as I'm concerned, it's an exceedingly good idea to install it on your development server. But still, there are reasons why you might not. Your server might be managed by someone else, and they might not be so keen, or you might be doing some GUI extension development yourself and want to keep a clear field of view without other people's extensions cluttering up the system. Whatever - sometimes it won't be there, and you'd still like to be able to just suck that goodness out of Tridion.

Fortunately - it's not a problem. Remember when I said most modern browsers have good development tools? We use them all the time, right? F12 in pretty much any browser will get you there - then you need to be able to find the console. Some browsers will take you straight there with Ctrl+Shift+J. So you just open the relevant Tridion item, go to the console and grab the XML. Here's a screenshot from my dev image.

So now you can get the XML of an item on pretty much any modern Tridion system without installing a thing. Cool, eh? Now some of you at the back are throwing things and muttering something about shouldn't it be a bookmarklet? Yes it should. That's somewhere on my list, unless you beat me to it.

Dumping publication properties to a spreadsheet - a Powershell one-liner

A colleague mentioned to me today that he'd solved a problem with Tridion publishing that had been caused by the publication path being incorrectly set on some publications. We talked about how useful it would be to be able to get a summary of the paths without having to open every publication. Time for a powershell one-liner! How about this?

$core.GetSystemWideList((new-object PublicationsFilterData)) | select-object -property Title,MultimediaPath,MultimediaURL,PublicationPath| Export-Csv -path c:\pubs.csv

Those of you who have been following along will notice that I have imported the core service namespace using the reflection module, but even on a bare Tridion system, you could type this easily enough. The CSV file can be opened up directly in Excel, and Bob's your uncle.