Dominic Cronin's weblog

Removing the replacement tokens from Tridion configuration files, and choosing not to

In SDL Web 8.5 we saw the introduction of replacement tokens in the content delivery configuration files. Whereas previously we'd simply had XML files with attributes and elements that we filled in with the relevant values, the replacement tokens allowed for the values to be provided externally when the configuration file is used. The commonest way to do this is probably by using environment variables, but you can also pass them as arguments to the java runtime. (A while ago, I wasted a bunch of time writing a script to pass environment variables in via java arguments. You don't need to do this.)

So anyway - taking the deployer config as my example, we started to see this kind of thing:

<Queues>

<Queue Id="ContentQueue" Adapter="FileSystem" Verbs="Content" Default="true">

<Property Value="${queuePath}" Name="Destination"/>

</Queue>

<Queue Id="CommitQueue" Adapter="FileSystem" Verbs="Commit,Rollback">

<Property Value="${queuePath}/FinalTX" Name="Destination"/>

</Queue>

<Queue Id="PrepareQueue" Adapter="FileSystem" Verbs="Prepare">

<Property Value="${queuePath}/Prepare" Name="Destination"/>

</Queue>

Or from the storage conf of the disco service:

<Role Name="TokenServiceCapability" Url="${tokenurl:-http://localhost:8082/token.svc}">

So if you have an environment variable called queuePath, it will be used instead of ${queuePath}. In the second example, you can see that there's also a syntax for providing a default value, so if there's a tokenurl environment variable, that will be used, and if not, you'll get http://localhost:8082/token.svc.

This kind of replacement token is very common in the *nix world, where it's taken to even further extremes. Most of this is based on the venerable Shell Parameter Expansion syntax.

All this is great for automated deployments and I'm sure the team running SDL's cloud services makes full use of this technique. For now, I'm still using my own scripts to replace values in the config files, so a recent addition turned out to be a bit inconvenient. In Tridion Sites 9, the queue Ids in the deployer config have also been tokenised. So now we have this kind of thing:

<Queue Default="true" Verbs="Content" Adapter="FileSystem" Id="${contentqueuename:-ContentQueue}">

<Property Name="Destination" Value="${queuePath}"/>

</Queue>

Seeing as I had an XPath that locates the Queue elements by ID, this wasn't too helpful. (Yes, yes, in the general case it's great, but I'm thinking purely selfishly!) Shooting from the hip I quickly updated my script with an awesome regex :-) , so instead of

$config = [xml](gc $deployerConfig)

I had

$config = [xml]((gc $deployerConfig) -replace '\$\{(?:.*?):-(.*?)\}','$1')

About ten seconds after finishing this, I realised that what I should be doing instead is fixing my XPath to glom onto the Verbs attribute instead, but you can't just throw away a good regex. So - I present to you, this beautiful regex for converting shell parameter expansions (or whatever they are called) into their default values while using the PowerShell. In other words, ${contentqueuename:-ContentQueue} becomes ContentQueue.

How does it work? Here it comes, one piece at a time:

' single quote. Otherwise Powershell will interpret characters like $ and {, which you don't want

\ a slash to escape the dollar from the regex

$ the opening dollar of the expansion espression

\{ match the {, also escaped from the regex

(?:.*?) match zero or more of anything, non-greedily, and without capturing

:- match the :-

(.*?) match zero or more of anything non-greedily. No ?: this time so it's captured for use later as $1

\} match the }

' single quote

The second parameter of -replace is '$1', which translates to "the first capture". Note the single quotes, for the same reason as before

So there you have it. Now if ever you need to rip through a bunch of config files and blindly accept the defaults for everything, you know how. But meh... you could also just not provide any values in the environment. I refuse to accept that this hack is useless. A reason will emerge. The universe abhors a scripting hack with no purpose.

Preparing HTML data for use in a Tridion Rich Text Format area

I recently had to create some Tridion components from code via the core service. The incoming data was in the form of HTML, and not XML in the XHTML namespace, which is what is required for a Tridion RTF area. I'd also had to do some preparatory clean-up of the data, and by the time I wanted to fix up the namespaces, I already had the input data in an XLinq XElement.

These days, if I'm processing XML in .NET, I'm quite likely to use XLinq. It's taken me a while to get comfortable with some of its idioms. The technique I ended up using is similar to the classic approach we typically adopt in XSLT, starting with an identity transform and making a couple of minor tweaks to the data as it goes through.

So, mostly by way of a "note to self", here's how it looks in XLinq. All you need to do is pass in your XElement containing your XHTML, and it will rip through all the elements and put them in the XHTML namespace, leaving all the attributes and other nodes untouched.

public XNode PutHtmlElementsInXhtmlNamespace(XNode input){

XNamespace xhtmlNs = "http://www.w3.org/1999/xhtml";

var element = input as XElement;

if (element != null)

{

XName name = xhtmlNs + element.Name.LocalName;

return new XElement(name,element.Attributes(),

element.Nodes().Select(n => PutHtmlElementsInXhtmlNamespace(n)));

}

return input;

}

In this way you can easily create data that's suitable for use in an RTF. Piecing the rest of a Content element together with XElement is pretty easy too, or of course, you can use the venerable Fields class for the rest.

Powershell 5 for tired old eyes

With the release of Powershell 5, they introduced syntax highlighting. This is, in general, a nice improvement, but I wasn't totally happy with it, so I had to find out how to customise it. My problems were probably self-inflicted to some extent, as I think at some point I had tweaked the console colour settings. The Powershell is hosted in a standard Windows console, and the colours it uses are in fact the 16 colours available from the console.

The console colours start out by default as fairly basic RGB combinations. You can see these if you open up the console properties (right-click on the title bar of a console window will get you there). In the powershell, these are given names - the powershell has its own enum for these, which maps pretty directly on to the ConsoleColor enumeration of the .NET framework.

| ConsoleColor |

Description |

Red |

Green | Blue |

| Black |

The color black. |

0 |

0 |

0 |

| Blue |

The color blue. |

0 |

0 |

255 |

| Cyan |

The color cyan (blue-green). |

0 |

255 |

255 |

| DarkBlue |

The color dark blue. |

0 |

0 |

128 |

| DarkCyan |

The color dark cyan (dark blue-green). |

0 |

128 |

128 |

| DarkGray |

The color dark gray. |

128 |

128 |

128 |

| DarkGreen |

The color dark green. |

128 |

0 |

0 |

| DarkMagenta |

The color dark magenta (dark purplish-red). |

128 |

0 |

128 |

| DarkRed |

The color dark red. |

128 |

0 |

0 |

| DarkYellow |

The color dark yellow (ochre). |

128 |

128 |

0 |

| Gray |

The color gray. |

128 |

128 |

128 |

| Green |

The color green. |

0 |

0 |

255 |

| Magenta |

The color magenta (purplish-red). |

255 |

0 |

255 |

| Red |

The color red. |

255 |

0 |

0 |

| White |

The color white. |

255 |

255 |

255 |

| Yellow |

The color yellow. |

255 |

255 |

0 |

In the properties dialog of the console these are displayed as a row of squares like this:

and you can click on each colour and adjust the red-green-blue values. In addition to the "Properties" dialog, there is also an identical "Defaults" dialog, also available via a right-click on the title bar. Saving your tweaks in the Defaults dialog affects all future consoles, not only powershell consoles.

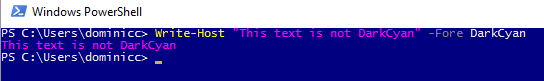

In the Powershell, you can specify these colours by name. For example, the fourth one from the left is called DarkCyan. This is where it gets really weird. Even if you have changed the console colour to something else, it's still called DarkCyan. In the following screenshot, I have changed the fourth console colour to have the values for Magenta.

Also of interest here is that the default syntax highlighting colour for a String, is DarkCyan, and of course, we also get Magenta in the syntax-highlighted Write-Host command.

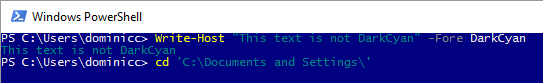

Actually - this is where I first had trouble. The next screenshot shows the situation after setting the colours back to the original defaults. You can also see that I am trying to change directory, and that the name of the directory is a String.

My initial problem was that I had adjusted the Blue console color to have some green in it. This meant that a simple command such as CD left me with unreadable text with DarkCyan over a slightly green Blue background. This gave a particularly strange behaviour, because the tab-completion wraps the directory in quotes (making it a String token) when needed, and not otherwise. This means that as you tab through the directories, the directory name flips from DarkCyan to White and back again, depending on whether there's a space in it. Too weird...

But all is not lost - you also have control over the syntax highlighting colours. You can start with listing the current values using:

Get-PSReadlineOption

And then set the colours for the various token types using Set-PSReadlineOption. I now have the following line in my profile

Set-PSReadlineOption -TokenKind String -ForegroundColor White

(If you use the default profile for this, you will be fine, but if you use one of the AllHosts profiles, then you need to check that your current host is a ConsoleHost.)

Anyway - lessons learned... Be careful when tweaking the console colours - this was far less risky before syntax highlighting... and you can also fix the syntax highlighting colours if you need to, but you can only choose from the current console colours.

New Tridion cookbook article: Recursive walk of Tridion tree

I'm still trying to get the important parts of my Tridion developer summit talk online. With a code-based demo like that, sharing the slides is pretty pointless, so I'm putting the code on-line where ever it makes sense. So far this has been in the Tridion cookbook. Here's the latest

https://github.com/TridionPractice/tridion-practice/wiki/Recursive-walk-of-Tridion-tree

The thing that really triggered me to get this on-line was that someone had recently asked me if it was possible to query Tridion to find items that were local to a publication rather than shared from higher in the BluePrint. With the tree walk in place, this becomes almost trivial. (I'm not saying that there aren't better ways to get the list of items to process, but the tree walk certainly works.)

So having got the items into a variable following the technique in the recipe, finding the shared items becomes as simple as:

$items | ? {$_.BluePrintInfo.IsShared}

But it might be more productive to throw all the items into a spreadsheet along with the relevant parts of their BluePrint Info:

$items | select Title, Id, @{n="IsShared";e={$_.BluePrintInfo.IsShared}}, `

@{n="IsLocalized";e={$_.BluePrintInfo.IsLocalized}} `

| Export-csv blueprintInfo.csv

Am I the only one that finds this fun? It's fun, right! :-)

Spoofing a MAC address in gentoo linux

I spent a few hours this weekend fiddling with networking things at home. One of the things I ran into was that the DHCP server provided by my ISP was behaving erratically. Specifically, it was being very fussy about giving out a new lease. It would give out a lease to a Windows 7 system I was using for testing, but not to my Gentoo server. At some point, having spent the day with this kind of frustration, I was ready to put up with almost any hack to get things running. Someone on the #gentoo IRC channel suggested that spoofing the MAC address that already had a lease might be a solution. Their solution was to do this:

ifconfig eth0 down ifconfig eth0 hw ether 08:07:99:66:12:01 ifconfig eth0 up

Here, you have to imagine that eth0 is the name of the interface, although on my system it isn't any more. (Another thing I learned this weekend was about predictable interface names.) You should also imagine that 08:07:99:66:12:01 is the mac address of the network interface on my Win7 system.

The trouble with this is that it doesn't integrate very well in the standard init scripts that get things going on a Gentoo system. Network interfaces are started by running /etc/init.d/net.eth0 (although that's just a link to another script). The configuration is to be found in /etc/init.d/net where you can add directives that control the way your network interfaces are configured. The most important of these are the ones that begin with "config_". For example, to set up a static IP for eth0, you might say something like:

config_eth0="192.168.0.99 netmask 255.255.255.0 brd 192.168.0.255"

or for DHCP it's much simpler:

config_eth0="dhcp"

So my obvious first try for setting up a spoofed MAC address was something like this:

config_eth0="dhcp hw ether 08:07:99:66:12:01"

but this didn't work at all. Anyway - after a bit of fiddling and more Googling (sorry - I can't remember where I found this) it turned out that there's a specific directive just for this purpose. I tried this

mac_eth0="08:07:99:66:12:01" config_eth0="dhcp"

It works a treat. Note that the order is important, which is obvious once you know it I suppose, but wasn't obvious to me until I'd got it wrong once.

The good news after that was that for an established lease, everything worked rather better.

Editing Tridion templates with a little Wasavi sauce

This post is dedicated to all the brave Tridion hackers that struggled through the vbScript years, editing endless templates, most likely in IE6 or worse. How many of us, back in the day, wished for better editing support in the browser? These days, of course, if you have any sense, most of your complex code will be safely tucked away in Visual Studio, but still... who doesn't still occasionally have to carve up a Razor building block, or even DWT? Sure the browsers have all become awesome, but when all is said and done, you're still editing that template in a textarea. Cheer up - that's all about to change!

I first came across Wasavi a couple of months ago - I can't even remember what the context was, but I mentally classified it as an interesting curiosity and moved on. It was still installed in my browser, but I forgot about it until today, when it suddenly came in useful. Oh hang on a sec... what's Wasavi? It's a browser extension that turns each textarea into a vi editor. Cute eh?

OK - I can see what you're thinking... oh yeah - vi. Bloody geeks. And fair enough - I cut my teeth on vi in 1989 running on a nasty old mainframe running Multics. I still like it as an editor, but that probably only indicates a certain level of perversity. So why am I pestering you, my fellow Tridion people, with it? Well - of course, some of you will be as comfortable as I am in grungy old-skool editors. For the rest of you, it may still be useful enough to have it installed in your browser (Chrome, Firefox or Opera... so the first two, eh?)

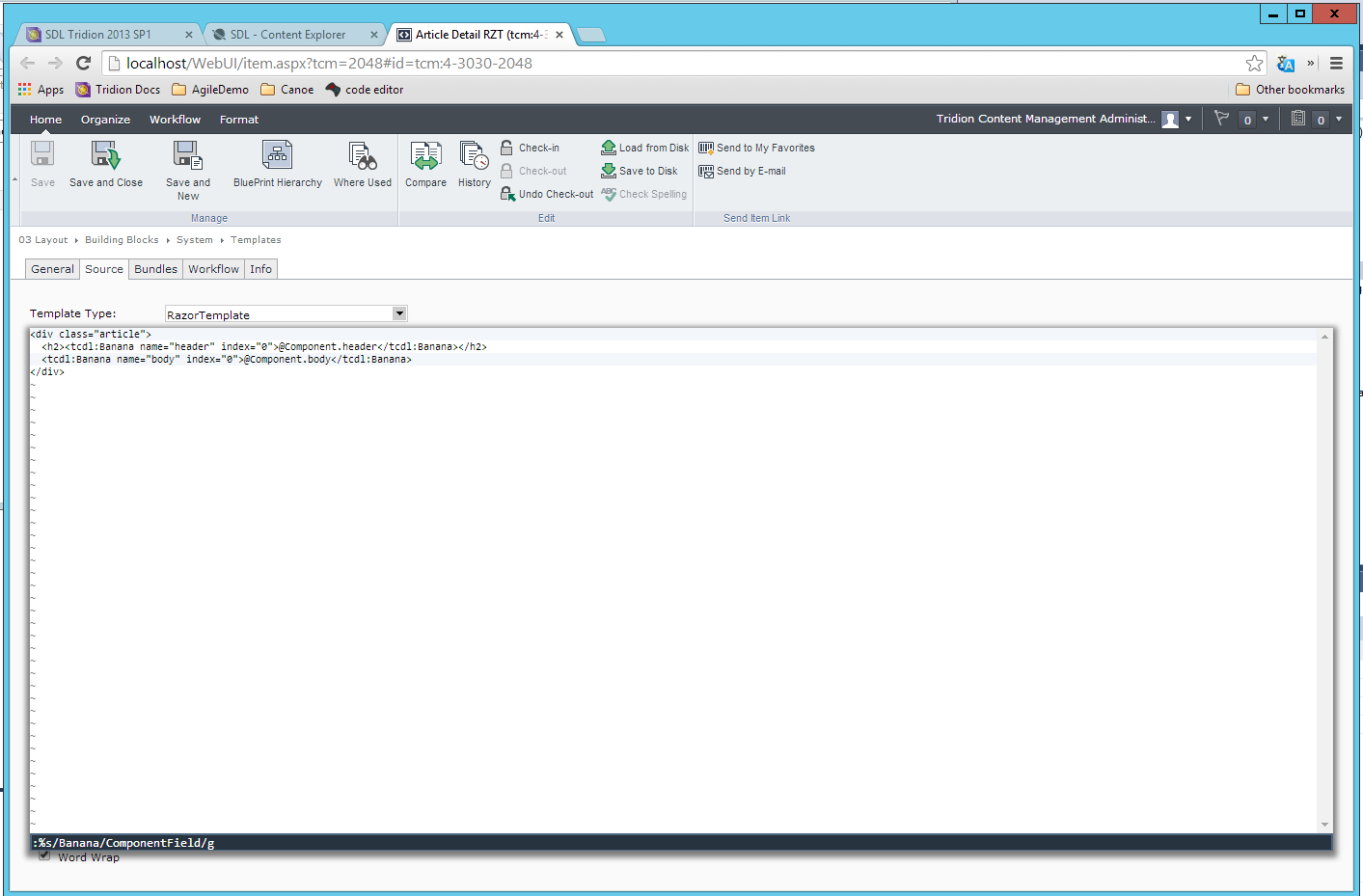

I offer you.... tada.... Global Search and replace!! As you can see in the screenshot, something awful has happened to my template, and all the instances of "ComponentField" have unfortunately been transformed to "Banana". How to fix this?

<Ctrl>-<Enter> :%s/Banana/ComponentField/g<Enter> :wq

Ctrl-Enter opens up the wasavi view. The line beginning with :%s means "on every line, substitute all instances of Banana with ComponentField", and :wq is vi-speak for write-quit, which is the same as save and close, which returns you to the normal textarea view.

This is one example, albeit a fairly handy one, but vi is capable of much more. The next time you're looking at some awkward editing task - think of wasavi, and just Google for the relevant vi commands.

Edit: Just thought of another cool way to use this.... vi has parenthesis-matching, so if you have to unscramble a badly formatted piece of code, just park on a paren, or any other kind of bracket, and hit % to go to its counterpart. (Full-on vim has good auto-formatting features too, but I'm not sure if the browser implementation does much more than the basics... still - useful.)

Getting my VMware server to resolve DNS in a reasonable time.

I have a Windows 2012 server that I run under VMWare. I've probably mentioned this image before, as it's the one I use for my Tridion research. I'm fairly unusual in that I like to have my database server running "on the bare metal" of my laptop rather than in the Windows Server image. It's probably just perversity or masochism or whatever, but that's how I roll. What this means is that I have two network interfaces on the image: one configured as "Host only", which I use for my database connections and other "on the box" stuff, and another running NAT. Sure, you could run a development image completely isolated from the Internet, but it'd be a pain, so I run the NAT interface as well.

All good in theory, but as it turned out, it was a pain anyway, because it was taking 10 seconds to resolve a DNS name. Don't ask me why 10 seconds - presumably it was hitting some timeout and then trying an approach that worked better. Anyway - it was getting annoying. Sure I could flip out of the image to run a browser outside, but nah! Apart from anything else, I hate to be irritated by things I don't understand. I don't mind having things I don't understand, - gee, you'd go crazy! - but if it's an in-your-face irritation, that's another story.

So I poked around a bit. I could run:

[net.dns]::GetHostByName("www.yahoo.com")

in my powershell on the image and it would take 10 seconds. Natively on the laptop - instant response. So I had a quick look in the VMWare network settings. There are some obscure settings on the NAT interface for policies for automatically detecting DNS servers. But hang on - was it attempting to get DNS from the Host only interface, or the NAT one? So what would nslookup tell me:

PS C:\Users\Administrator> nslookup

Default Server: UnKnown

Address: 192.168.126.1

> www.yahoo.com

Server: [192.168.126.1]

Address: 192.168.126.1

DNS request timed out.

timeout was 2 seconds.

DNS request timed out.

timeout was 2 seconds.

DNS request timed out.

timeout was 2 seconds.

DNS request timed out.

timeout was 2 seconds.

*** Request to [192.168.126.1] timed-out

> server 192.168.146.2

Default Server: [192.168.146.2]

Address: 192.168.146.2

> www.yahoo.com

Server: UnKnown

Address: 192.168.146.2

Non-authoritative answer:

Name: ds-eu-fp3.wa1.b.yahoo.com

Addresses: 2a00:1288:f00e:1fe::3000

2a00:1288:f006:1fe::3000

2a00:1288:f006:1fe::3001

2a00:1288:f00e:1fe::3001

87.248.112.181

87.248.122.122

Aliases: www.yahoo.com

fd-fp3.wg1.b.yahoo.com

ds-fp3.wg1.b.yahoo.com

ds-eu-fp3-lfb.wa1.b.yahoo.com

OK - so the first thing that this told me was that the default lookup was on my Host only interface, and that this was failing. When I manually set the server to the one on the NAT interface, boom... the response came back in a split second.

Next problem - how do I get it to default to the one that works (yes - I could also attempt to get the Host only one working properly, but not if it's easy to switch to the other - llfe's too short, and with networking, getting it to stop being irritating is much more achievable than understanding!).

After a bit of Googling I discovered that you Windows brings up the network interfaces in a specified order, and the first one becomes the "primary" interface, which in turn is used as the default for DNS and goodness knows what else. All I needed to do was change the order. I picked up a hint from The Regime and was almost surprised to find that also in Windows Server 2012, you can get to the advanced settings of the network interfaces by hitting and releasing the Alt key. (Who knows about this stuff? Isn't that just disturbing?) A couple of minutes later I was testing it and finding that it worked.

So it's all good. I still don't understand networking, but that's never been a serious itch for me.

Debugging 64 bit Tridion content delivery on IIS 7.5

I'm currently developing a web application which will run on Windows 2008 R2 and which is intended to run in a 64bit Application pool. This means that I'm running IIS 7.5, and that the web application is installed with the 64 bit versions of the Tridion content delivery assemblies. As you'll know if you've tried to run this kind of web application in a 32 bit process, you pretty soon get exceptions telling you that you have an invalid format. This gets a little inconvenient if you just start to debug your web application in Visual Studio. By default, if you have a page selected, and hit the big green Run triangle, the page will launch in IIS Express. If you have IIS 7.5, then IIS Express runs a 32 bit process, so the default setup just isn't going to work for you.

So - what to do? I had two options:

- Configure the properties of the web application to debug using IIS rather than IIS Express

- Launch the web page directly from the browser, and attach the debugger to the correct w3wp.exe process.

To be honest, the second of these was the choice that most matched my usual debugging approaches. Having said that, I did try the first approach, but so far without success. Visual Studio 2012 has frozen on me a few times while trying this. I'm interested if anyone has any tips on getting this working, but right now, I'm happy enough that I was able to succeed in attaching a debugger to w3wp.exe.

My biggest challenge was to figure out which process I wanted to attach to. On my development server, I have quite a few web sites running, and it's not altogether obvious which w3wp.exe to attach to. Attaching to them all might work in a trivial case, but realistically, it takes quite a while to load all the dlls, and adding any more processes than necessary is just going to hurt too much. So - how do you find out which process it is?

The first step is to ensure you have the IIS powershell provider installed on your server. These days, this is shipped as a module, so if it's available on your system, you should be able to open a powershell and type:

Get-Module -ListAvailable

If the response includes "WebAdministration" you are good to go. Just import the module as follows:

Import-Module WebAdministration

If this succeeds, you should be able to "change directory" into the IIS provider. (Although a PowerShell purist might prefer set-location... whatever floats your boat!)

cd IIS:

If you can't find the module, then go into the Server manager, and check that you have the relevant role services for IIS installed. On other platforms, you might find that you can install it from the WebInstaller from the MSDN web site.

Now you're ready to find the process that you want to attach to: Assuming that your application pool is called "MyApplicationPool", then you can list its worker processes like this: (or use "dir" or "ls", either of which is an alias for "gci")

> gci IIS:\AppPools\MyApplicationPool\WorkerProcesses

Process State Handles Start Time Id -------- ----- ------- ---------- 2608 Running 776 1/2/2013 6:55:33 PM

This assumes, of course, that your app pool is actually running, but you'd have made sure it was before trying to debug it, right. Anyway - as you can see, the process id is there just to read off, and you can get straight on with your debugging session.

Enabling XML syntax-highlighting for .config files in gVim

I've used the vi text editor for many years; (at least long enough to know that it's pronounced vie and not vee-eye!). Over those years my level of expertise has varied somewhat - I'm fairly sure I've learned some commands and forgotten them several times over. Anyway - recently (i.e. in the last year or so), I've put some more effort in to reacquainting myself with some of its many joys. In practice, of course, I really mean vim: I'd be hard-pressed to remember the last time I saw vi in its "good-old-fashioned" form (does one say Plain-old-vi?) As most of my work is on Windows systems, this means using gVim.

Of the many improvements that vim has over vi, syntax highlighting is one of my favourites. The trouble is, one of my commonest use-cases for editing text files on Windows systems is .NET configuration files. Because these have a file extension of .config, they aren't recognised by default as XML files, and I end up going through the rigmarole of selecting one menu option to get a choice of file types added to the menus, and then locating XML among those newly added options to get highlighting to come on. Well there had to be a better way, and of course there was. What you have to do is this:

- Locate your vi directory (on the system I was working on this evening, it's "C:\Program Files (x86)\Vim\"

- Having found this directory, locate or create C:\Program Files (x86)\Vim\vimfiles\ftdetect

- In ftdetect, create a file called config.vim with the following contents:

au BufRead,BufNewFile *.config set filetype=xml

I have Windows configured to use vi as the default editor for .config files, so now with this in place, all I have to do is double-click on the file and it opens with XML syntax-highlighting enabled. Great stuff!

Why is it really slow to access Tridion via webdav?

Today I wanted to upload 20 or so image files to my Tridion server. This is a bit of a faff to do through the normal user interface. (You'd have to create multimedia components one by one and then upload the binaries individually.) But no problem, because you can always use WebDAV, right? I wanted to upload the images from the server, which runs Windows 2008 Server R2. OK - so where are we now? Erm... Computer.... right-click ... Map network drive.... Pick a letter.... http://localhost/webdav/ ....OK! Boom... there we are - a nicely mapped webdav drive.

But.... it was awful. Like wading knee-deep through treacle with all the acrobats of the Chinese state circus balanced on your head. Slow? I could have made a cup of tea while it opened a folder.

So what was going on? My first instinct was that it probably wasn't Tridion to blame. Something like this, that more or less renders the feature unusable would have been flushed out during product testing, and fixed. So let's start by blaming Windows! (Millions of Apple fan-persons and Linux-inhaling Bill-haters can't all be wrong eh?) Oh enough of that. Suffice it to say that a quick google took me to Mark Lognoul's blog, where he describes the solution to this problem on Vista or Seven. Does it work on Server 2008? Yup - works like a charm. Thanks Mark. Job's a good'un.