Dominic Cronin's weblog

Logback could be groovy! But XML FTW

Anyone who works with Tridion content delivery will be familiar with the fact that Logback is used as the logging framework. Recently I found myself looking into this more than I had previously, so here are a couple of observations that might be interesting. The first is that you can use the groovy scripting language instead of XML to write your configuration files. (I'll get to exactly how useful, or otherwise, this might be in a bit...) Anyway - the following is a machine translation of the logback.xml file that ships with Tridion, Now - proponents of the groovy approach will tell us that groovy can be much terser than the XML equivalent. At first sight it doesn't look much different, but I imagine you could factor out the creation of all those appenders to some sort of factory, and then it would look a lot shorter. Can I leave that as "an exercise for the student"? :-)

import ch.qos.logback.classic.encoder.PatternLayoutEncoder

import ch.qos.logback.core.rolling.RollingFileAppender

import ch.qos.logback.core.rolling.TimeBasedRollingPolicy

import java.nio.charset.Charset

import static ch.qos.logback.classic.Level.${LOG.LEVEL}

import static ch.qos.logback.classic.Level.OFF

scan()

def log.pattern = "%date %-5level %logger{0} - %message%n"

def log.history = "7"

def log.folder = "c:/tridion/log"

def log.level = "ERROR"

def log.encoding = "UTF-8"

appender("rollingTransportLog", RollingFileAppender) {

rollingPolicy(TimeBasedRollingPolicy) {

fileNamePattern = "${log.folder}/cd_transport.%d{yyyy-MM-dd}.log"

maxHistory = "${log.history}"

}

encoder(PatternLayoutEncoder) {

charset = Charset.forName("${log.encoding}")

pattern = "${log.pattern}"

}

prudent = true

}

appender("rollingDeployerLog", RollingFileAppender) {

rollingPolicy(TimeBasedRollingPolicy) {

fileNamePattern = "${log.folder}/cd_deployer.%d{yyyy-MM-dd}.log"

maxHistory = "${log.history}"

}

encoder(PatternLayoutEncoder) {

charset = Charset.forName("${log.encoding}")

pattern = "${log.pattern}"

}

prudent = true

}

appender("rollingMonitorLog", RollingFileAppender) {

rollingPolicy(TimeBasedRollingPolicy) {

fileNamePattern = "${log.folder}/cd_monitor.%d{yyyy-MM-dd}.log"

maxHistory = "${log.history}"

}

encoder(PatternLayoutEncoder) {

charset = Charset.forName("${log.encoding}")

pattern = "${log.pattern}"

}

prudent = true

}

appender("rollingCoreLog", RollingFileAppender) {

rollingPolicy(TimeBasedRollingPolicy) {

fileNamePattern = "${log.folder}/cd_core.%d{yyyy-MM-dd}.log"

maxHistory = "${log.history}"

}

encoder(PatternLayoutEncoder) {

charset = Charset.forName("${log.encoding}")

pattern = "${log.pattern}"

}

prudent = true

}

appender("rollingSessionPreviewLog", RollingFileAppender) {

rollingPolicy(TimeBasedRollingPolicy) {

fileNamePattern = "${log.folder}/cd_preview.%d{yyyy-MM-dd}.log"

maxHistory = "${log.history}"

}

encoder(PatternLayoutEncoder) {

charset = Charset.forName("${log.encoding}")

pattern = "${log.pattern}"

}

prudent = true

}

logger("com.tridion", ${LOG.LEVEL})

logger("com.tridion.transport", ["rollingTransportLog"])

logger("com.tridion.transport.HTTPSReceiverServlet", ["rollingDeployerLog"])

logger("com.tridion.transport.transportpackage", ["rollingDeployerLog"])

logger("com.tridion.transformer", ["rollingDeployerLog"])

logger("com.tridion.deployer", ["rollingDeployerLog"])

logger("com.tridion.tcdl", ["rollingDeployerLog"])

logger("com.tridion.event", ["rollingDeployerLog"])

logger("com.tridion.monitor", ["rollingMonitorLog"])

logger("Tridion.ContentDelivery", ${LOG.LEVEL}, ["rollingCoreLog"])

logger("com.tridion.preview", ["rollingSessionPreviewLog"])

logger("com.tridion.storage.persistence.session", ["rollingSessionPreviewLog"])

root(OFF, ["rollingCoreLog"])

So why might this be interesting to Tridion infrastructure specialists? Well it isn't. Not at all. At least not right now - because doing it this way requires the groovy runtime to be available, and that isn't in a standard Tridion content delivery setup. I attempted a trivial hack by dropping a couple of the groovy jars in place, but no joy. Realistically, this would only be a practical approach if Tridion decided to build it into the product and support it. I imagine the dev team puts quite some effort into keeping their dependency tree as clean as possible, so this might come under the heading of stuff that would only get added if people really, really wanted it!

Anyway - I love the smell of XML in the morning, so it's all the same to me. So on with the useful part of this post. If you check out exactly how logback gets its configuration settings, you'll see that before it picks up logback.xml, it first looks for a file called logback-test.xml. I'm happy to say that this does work out-of-the-box. This means that when you come across a server where you need to debug a problem, and its standard logging settings need to be boosted up to DEBUG, you don't have to edit the existing config file. Just drop your insanely debuggy logback-test.xml file in next to logback.xml (and restart things) and Bob's your uncle. When you're done, just delete (and restart). Even the restarting might be optional - another feature of logback is that you can configure it to scan for configuration changes, although I have no clue whether it would then pick up the existence of logback-test.xml)

Ok - this is such a minor benefit over copying and renaming that it hardly justifies the deaths of all those IP packets that were bravely lost in transmission during the serving of this web page. Whatever.... that's the thing with research, eh? Negative results are also important to report. In short - logback.groovy looked cool, but won't work - and maybe carrying a customised logback-test.xml around in your toolkit might be handy, but then again, maybe not.

I'll sign off with one more public service announcement. I recently saw someone using a logback configuration that specified a logging level of ON. Apparently they had been advised to do so by someone who ought to have checked first. The possible values are OFF, ERROR, WARN, INFO, DEBUG and TRACE. Anything other than that will not be recognised, and you'll get DEBUG logging, which is the default if that happens.

The Razor Mediator for Tridion - in practice

Most of my blog output is to be found right here, but this week I published an article on the Indivirtual web site.

http://inside.indivirtual.nl/2013/10/the-razor-mediator-for-tridion-in-practice/

The article goes into the background of the Razor Mediator for Tridion, and our thinking behind using it on a customer project. I hope you find it interesting.

Why the "new" Tridion events system is a game-changer

When SDL released Tridion 2011, a lot had changed. So much so, that the introduction of a new Events system was almost unremarkable. After all, they had to replace the old one, so there was a new one. Nothing to see here, move along now please. Most of the effort in those days went into a flurry of upgrades and ports of old-style events systems to the new architecture. So you might be forgiven if you hadn't ever stopped to think just how much of a difference the new architecture makes. Specifically - we now subscribe to events using a mechanism based on .NET multicast delegates. This has a couple of consequences.

Firstly, we are freed from the need to write dispatchers. To implement an events system with the old "COM+"-based system, you would implement an interface containing all the event handler methods, and register your implementation with a specific COM ProgID. Tridion would ask COM+ to instantiate an object of that ProgID, and merrily call into whichever of the interface methods were configured to be called. This meant there could only be one implementation. All your functionality had to be in that implementation, even if different parts of your system had different requirements. So if, for example, you were using Tridion for your Internet site and for your intranet, or for whatever other reason you were running diverse sites, then you'd need a dispatcher. This would be a simple events system implementation that did nothing more than pass on the calls to one of several different implementations, usually depending on configuration. So calls coming from your Internet publications would go to one DLL, and the ones from your intranet would go to another, but Tridion itself would only see one interface: that of your dispatcher. This was quite a pain. You could separate out different concerns this way, but you wouldn't want to do more than carving it up into very big chunks. Like I said - Internet and intranet, or maybe different customers or departments. Nothing more fine-grained than that anyway. The new events system meant we didn't need to have a dispatcher any more, and the "configuration" could mostly be baked into the code itself.

For myself, (and I suspect for others), this was such a relief that it was enough. It wasn't until some time later that I realised that it was just a beginning. We'd got so used to limiting ourselves to big chunks that it didn't really sink in that we could really start slicing things up. The game-changer I referred to in the title of this piece is exactly that. We can slice it up as small as we want. OK - big deal, you might say - but if we can slice it up arbitrarily, then we can write an events system implementation for a single concern. And that means [ta-da!!] that we can start making re-usable modules that can just be "dropped in" on whatever project needs them. I recently wrote a Component Save event handler that enforces height and width constraints on multimedia components. It does one thing - that's all, so I can use it whenever I have that need. When I went to configure it, I noticed that on my research system I already have three other events handlers registered. These are all from Tridion, and belong to Audience Manager, UGC, and External Content Library respectively. Without looking, I don't know or care whether any of them subscribes to the Initiated phase of a Component Save. They can all co-exist.

So now I'm looking forward to seeing a lot more (small and useful) events systems made available in the community - the days are gone when an events system only made sense for a single implementation.

Dumping publication properties to a spreadsheet - a Powershell one-liner

A colleague mentioned to me today that he'd solved a problem with Tridion publishing that had been caused by the publication path being incorrectly set on some publications. We talked about how useful it would be to be able to get a summary of the paths without having to open every publication. Time for a powershell one-liner! How about this?

$core.GetSystemWideList((new-object PublicationsFilterData)) | select-object -property Title,MultimediaPath,MultimediaURL,PublicationPath| Export-Csv -path c:\pubs.csv

Those of you who have been following along will notice that I have imported the core service namespace using the reflection module, but even on a bare Tridion system, you could type this easily enough. The CSV file can be opened up directly in Excel, and Bob's your uncle.

A Tridion tree-walk in Powershell

Now that I've got some reasonably terse syntax working for Tridion scripting, it's time to start building out some tooling to make the whole thing useful. It's quite often useful to be able to enumerate everything in your Tridion system, so walking the tree is a basic operation. You don't want to write the tree walk every time you have a different operation to perform, so it's handy to abstract the mechanics of the recursion out into a function. Somewhere in the nether regions of this blog, you'll find a JavaScript implementation of such a function. The basic technique I used in JavaScript was to have my tree-walking function accept a "process" function as an argument. For each item in your system, this is invoked, and is able to perform whatever processing is necessary on your item. (In the JavaScript version, I actually had two functions: process and filter. The filter function was responsible for deciding whether the item was interesting to process. In practice, this is probably too much abstraction. You can just as easily code an if-block in your process function, so on this occasion I'm restricting myself to just the one.)

To anyone who has written any JavaScript, it's pretty much impossible to miss the fact that functions are first-class objects. It may not be immediately apparent that this is true in Powershell, but it is. A Script Block in Powershell, is simply an anonymous function, and you can pass them around in variables or as parameters to other functions. (These days, the concept isn't even weird to C# hackers, what with lambda expressions and all.)

So - here goes: if you start with the function "recurseTridionItems" shown below....

import-module Reflection

import-namespace Tridion.ContentManager.CoreService.Client

function recurseTridionItems{

Param(

[parameter(Mandatory=$true)]

[ValidateNotNullOrEmpty()]

[SessionAwareCoreServiceClient]$core,

[IdentifiableObjectData]$parent,

[ScriptBlock]$scriptblock,

[int]$level = 0

)

$ro = new-object ReadOptions

if ($parent -eq $null){

[PublicationData[]]$items = @($core.GetSystemWideList((new-object PublicationsFilterData)))

foreach ($item in $items) {

$fullItem = $core.Read($item.Id, $ro)

&$Scriptblock $fullItem $level

recurseTridionItems $core $fullItem $scriptblock ($level + 1)

}

}

else {

if ($parent -is [OrganizationalItemData]){

$items = $core.GetList($parent.Id, (new-object OrganizationalItemItemsFilterData))

} else {

$items = $core.GetList($parent.Id, (new-object RepositoryItemsFilterData))

}

foreach($item in $items) {

$fullItem = $core.Read($item.Id, $ro)

&$Scriptblock $fullItem $level

if ($fullItem -is [PublicationData]) {

recurseTridionItems $core $fullItem $scriptblock ($level + 1)

} elseif ($item -is [OrganizationalItemData]) {

recurseTridionItems $core $fullItem $scriptblock ($level + 1)

}

}

}

}

... this will take care of all the tree walking. For an example to show how you might use this, I've written a script block that outputs the Title of the item, indented based on the recursion level.

EDIT: my first version of this function didn't re-read the items that come from GetList. It worked fine for the trivial case of listing the titles but as soon as I tried anything more interesting, I discovered that GetList returns objects that are only partially loaded. This is apparently by design, as the documentation mentions it.

recurseTridionItems $core $null {param($item,$level)"`t" * $level + $item.Title}

On my system, this produces output like this:

_Empty Master

Building Blocks

Default Templates

Outbound E-mail

Generate Plain Text E-mail

Outbound E-mail Post-processing

Outbound E-mail Pre-processing

Generate Plain Text E-mail

Outbound E-mail Post-processing

Outbound E-mail Pre-processing

Set Output Item By Email Mode

Tridion.OutboundEmail.Templating.Templates

SDL External Content Library

Adjust SiteEdit 2009 markup for External Content Library i

Adjust SiteEdit 2012 markup for External Content Library i

Resolve External Content Library items

Search External Content Library items

Tridion.ExternalContentLibrary.Templating

Component Query

Convert Html to Xml

Convert Xml to Html

Default Finish Actions

Dreamweaver Region Selection

Enable inline editing for content

Enable inline editing for Page

Extract Binaries from Html

Image Resizer

Link Resolver

Publish Binaries in Package

Default Component Template

Default Component Template for UGC

Default Page Template

Default Page Template for UGC

Activate Tracking

Cleanup Template

Component Query

Convert Html to Xml

Convert Xml to Html

Default Dreamweaver Component Design

Default Dreamweaver Page Design

Default Finish Actions

Default UGC Dreamweaver Template design

Enable inline editing for content

Enable inline editing for Page

Enable User Generated Content Processing

Extract Binaries from Html

Extract Components from Page

Image Resizer

Link Resolver

Publish Binaries in Package

Sample XSLT Component Design

Target Group Personalization

Tridion.SiteEdit.Templating

Tridion.Ugc.Templating.DefaultTemplates

Default Multimedia Schema

root

01 Definitions

Building Blocks

Default Templates

Outbound E-mail

Generate Plain Text E-mail

I think I'll truncate it there: you get the picture. Obviously, this is a trivial use-case that probably isn't terribly useful on an industrial scale installation. Fortunately, your script-block doesn't have to be a one-liner, and you can easily expand on this technique to meet your own needs. I should think I'll find quite a few uses for it myself. Just one word of caution: this was just a quick hack, and I haven't tested it exhaustively.

Straightforward Powershell scripting with the Tridion core service

Almost exactly a year ago, I blogged about Getting to grips with the Tridion core service in Powershell. The core service had been around for a while even then, and the point was to actually start using it for some of the scripting tasks I had habitually done via the TOM. In many ways the TOM was much more script-friendly. Of course, that might have had something to do with the fact that it was created expressly for use from scripting languages. The Tridion core service API wasn't. I don't know exactly what they had in mind, but I'd imagine the thinking was that most mainstream users would use C#. Yeah, sure - any compliant .NET language would do, but F#? Nah!

But a year further on, and where are all those scripts I was going to write? I have to say, the comfort zone for scripting is quite different than for writing "proper" programmes. There's huge usefulness in being able to hack out something quickly, and very much a sense that stuff will be intermingled ina-code-is-data-stylee. So when I started actually trying to use the core service for scripting tasks, it sucked pretty hard. There were two main areas of difficulty:

- Getting the core service wired up in the first place

- Powershell doesn't natively have the equivalent of C#'s using directive to allow you to avoid typing the full namespace of your type.

I covered the first point last year. Suffice it to say that currently, I'm still using Peter Kjaer's Tridion powershell module, although at the moment I'm running a local copy, modified to cope with the Tridion 2013 client, and also to allow me to specify which protocol I want to use. (Obviously I don't want to have a permanent fork, so with a bit of luck, Peter will be able to integrate some of this work into the next release of the module.) On a related subject, my experience has been that working with the core service client has some fundamental differences with using the TOM. You could keep a TDSE lying around for minutes at a time, and it would still be usable, even after a method call had failed. The core service, even when you're on the same server, is most definitely a web service. Failed calls tend to leave your connection in a "faulted" state (i.e. unusable), and the timeouts are generally shorter. Once you are aware of this, you can adjust your coding style accordingly, but it adds somewhat to the ritual.

The namespace issue is on the face of it more trivial. OK - so it's a PITA to have to type something like:

$folder = new-object Tridion.ContentManager.CoreService.Client.FolderData

when all you wanted was a folder. You could argue: "well it works, doesn't it? Get over it!". However, I found all this extra verbiage too much of a distraction, not only when reading and editing longer scripts, but also when "knocking off a quick one". After all, what's the point of having a great scripting environment if your one-liners aren't?

So what to do? Well I scoured the Internet, and discovered that Powershell has something called a Type Accelerator. You've seen these often enough, as there are several available by default. For example, you can (and should) type "[string]" when what you really mean is "[System.String]". Unfortunately, creating type accelerators isn't completely straightforward, but No Worries, the Powershell community is vibrant and there are implementations available that take care of it for you. (OK, at the time of writing I know of one that works, but that's enough, eh? My first Googling had taken me to the Type Accelerators module (PSTX) at codeplex. At first this seemed to be useful, but as soon as I moved to Tridion 2013, support for Powershell 3 became a hard requirement. This project is not actively maintained, and it doesn't work in Powershell 3. As I said, it's not straightforward to wire up type accelerators, and the code uses an undocumented API, which changed. Not Microsoft's fault.)

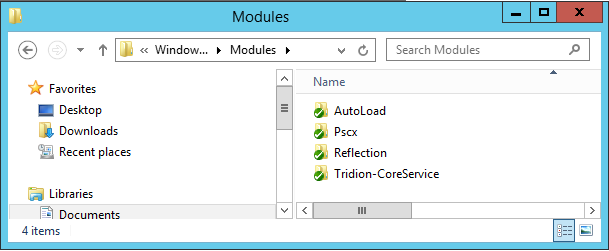

At this point, I went to the Powershell IRC channel (#powershell on freenode) and asked there if anyone knew about fixes or updates. I was steered in the direction of Jaykul's reflection module, available on Poshcode. (Make sure you get the latest version, and beware of the script getting truncated.) Installing modules is a fairly straightforward task: often as simple as dropping the files into a suitably named directory in your WindowsPowerShell modules directory (sometimes you need to "unblock" them) . Here's a shot of what mine looks like: (What you can see is C:\Users\Administrator\Documents\WindowsPowerShell\Modules)

In there you can see the Reflection module and AutoLoad (which is another module it depends on). Apart from that you can see the Tridion core service module (and Pscx).

With all this in place, you are set to start writing your "straightforward" Tridion scripts. I've chosen to demonstrate this by hacking out a script that will create a default publication layout for you. It will be a handy tool to have on my research image, but mostly it's to show some real-world scripting.

param ($publicationPrefix = "")

$core = Get-TridionCoreServiceClient -protocol nettcp

import-module reflection

import-namespace Tridion.ContentManager.CoreService.Client

function createPublication {

Param(

[parameter(Mandatory=$true)]

[ValidateNotNullOrEmpty()]

[SessionAwareCoreServiceClient]$core,

[parameter(Mandatory=$true)]

[ValidateNotNullOrEmpty()]

[string]$title,

[string]$key,

[string[]]$parents,

[switch]$Passthru

)

write-host "Creating publication $title"

$newPublication = $core.GetDefaultData([ItemType]::Publication,"",$null)

$newPublication.Title = $title

if ($key -eq [string]::Empty){

$newPublication.Key = $title

}

else {

$newPublication.Key = $key

}

foreach ($parent in $parents){

$link = new-object LinkToRepositoryData

if ($parent -match "^tcm:"){

$link.IdRef = $parent

} elseif ($parent -match "^/webdav"){

$link.WebDavUrl = $parent

} else {

continue

}

$newPublication.Parents += $link

}

if ($Passthru){

$core.Create($newPublication, (new-object ReadOptions))

}

else {

$core.Create($newPublication,$null)

}

}

function createFolder([SessionAwareCoreServiceClient]$core, [string]$parentId, [string]$title, [switch]$Passthru){

write-Host "Creating folder $title"

$newFolder = $core.GetDefaultData([ItemType]::Folder, $parentId, $null)

$newFolder.Title = $title

if ($Passthru){

$core.Create($newFolder, (new-object ReadOptions))

}

else {

$core.Create($newFolder, $null)

}

}

function createStructureGroup([SessionAwareCoreServiceClient]$core, [string]$parentId, [string]$title, [string]$directory, [switch]$Passthru){

write-Host "Creating Structure Group $title"

$newStructureGroup = $core.GetDefaultData([ItemType]::StructureGroup, $parentId, $null)

$newStructureGroup.Title = $title

$newStructureGroup.Directory = $directory

if ($Passthru){

$core.Create($newStructureGroup, (new-object ReadOptions))

}

else {

$core.Create($newStructureGroup, $null)

}

}

$chainMasterPub = createPublication $core "$($publicationPrefix)ChainMaster" -Passthru

$rsg = createStructureGroup $core $chainMasterPub.Id "root" "root" -Passthru

$definitionsPub = createPublication $core "$($publicationPrefix)Definitions" -parents @($chainMasterPub.Id) -Passthru

$systemFolder = createFolder $core $definitionsPub.RootFolder.IdRef "System" -Passthru

createFolder $core $systemFolder.Id "Schemas"

$contentPub = createPublication $core "$($publicationPrefix)Content" -parents @($definitionsPub.Id) -Passthru

$contentFolder = createFolder $core $contentPub.RootFolder.IdRef "Content" -Passthru

$layoutPub = createPublication $core "$($publicationPrefix)Layout" -parents @($definitionsPub.Id) -Passthru

createFolder $core $core.GetTcmUri($systemFolder.Id, $layoutPub.Id, $null) "Templates"

createPublication $core "$($publicationPrefix)Web" -parents @($contentPub.Id,$layoutPub.Id)

The script accepts a parameter which lets me prefix the publications with some name relevant to whatever I'm doing, so if you invoke it like this:

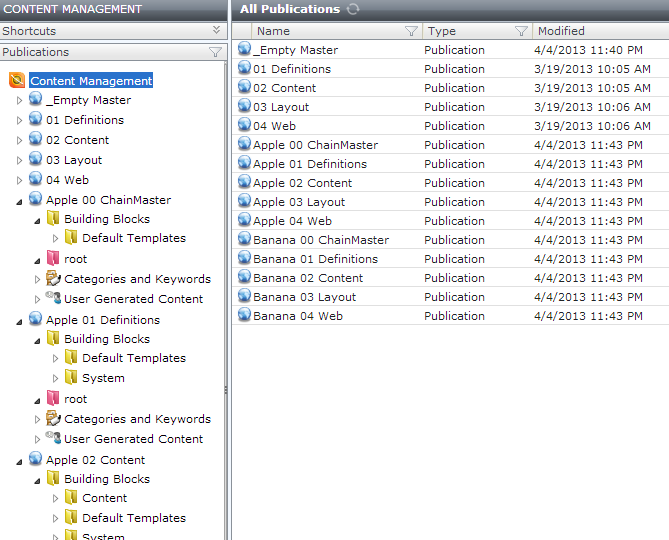

PS C:\code\dominic\tridion> .\CreateDefaultStructure.ps1 "Apple" Connecting to the Core Service at localhost... Creating publication Apple 00 ChainMaster Creating Structure Group root Creating publication Apple 01 Definitions Creating folder System Creating folder Schemas Creating publication Apple 02 Content Creating folder Content Creating publication Apple 03 Layout Creating folder Templates Creating publication Apple 04 Web PS C:\code\dominic\tridion> .\CreateDefaultStructure.ps1 "Banana" Connecting to the Core Service at localhost... Creating publication Banana 00 ChainMaster Creating Structure Group root Creating publication Banana 01 Definitions Creating folder System Creating folder Schemas Creating publication Banana 02 Content Creating folder Content Creating publication Banana 03 Layout Creating folder Templates Creating publication Banana 04 Web PS C:\code\dominic\tridion>

... you end up with publications like this:

I import the Tridion-CoreService module in my Powershell profile, so it's not needed in the script. (As noted earlier, my copy is a bit hacked, as you can see from the fact that I'm passing a protocol parameter to Get-TridionCoreServiceClient). I don't import the reflection module by default, so this is done in the script, followed immediately by "import-namespace Tridion.ContentManager.CoreService.Client", which is the magic from the Reflection module that wires up all the type accelerators. Once this is done, you can see that I can simply type [ReadOptions] instead of [Tridion.ContentManager.CoreService.Client.ReadOptions], and so on. Much better, I think! :-)

If you're wondering about the -Passthru switch on my functions, this is a powershell idiom that lets you indicate whether or not you are interested in the return value. In Tridion, this is controlled by whether or not you pass a ReadOptions argument. Perhaps obviously, the Read() method wouldn't make any sense if it didn't return anything, so a $null works fine - I'm still agonizing over whether it would be more stylish to pass a ReadOptions anyway. What do you think?)

Actually that's a good question. What do you think? I'm still trying to find my feet in terms of the correct idioms for this kind of work. Let's get the debate out in the open. Feel free to say mean things about my code (not obligatory). I've got a thick skin, and I'd genuinely value your feedback, especially if you think I'm doing it wrong.

Alt text, title text and web content management

I've been reading Alvin Reyes' recent blog post about "alt" text for images, and how you should manage it in Tridion. That triggered me to think about a few of these issues, and I'd like to respond to Alvin's post, and perhaps also broaden out the discussion a bit.

The typical scenario he describes is that you have a "content" component which links to a multimedia component representing an image. In the web site output, of course, the alt text needs to be associated with the image, but Alvin raises some questions over where we should manage this data in the CMS. He starts off by saying that the "Common practice" (of putting the alt text in the metadata of the multimedia component) is wrong, and that instead you should put the alt text in the content component. OK - so he then goes on to show that he understands the practical benefits of keeping the alt text with the image, and suggests a couple of other approaches, including putting the data in both places.

What Alvin describes may indeed be good practice for some organisations, but so much depends on the requirements. I think perhaps the most important take away from this discussion might be that unless you can elicit accurate requirements, you are going to get this wrong. Let's face it, the "common practice" technique is pretty solid. You have metadata that describes an image, so you manage it with the image. This isn't just an 80:20 rule, it's a 99% rule. The cases where you need multiple descriptions of the same image are rare in practice. Perhaps the obvious exception is where you're using the image as a link. Stop there a second... I mean the case where you're using a meaningful image to link to something and the description of your linking image is inappropriate for your link target.

OK - before I get carried away, let's deal with this. Firstly - there ought to be various distinct multimedia schemas in your site, intended for different purposes. Having a single "Image" schema is probably an anti-pattern. Tridion schemas have great support for constraining the choice of multimedia schema in helpful ways. (Although maybe it's weaker in Rich Text format areas). If you really, truly have a site where simple 80:20-rule alt text down on the bare metal doesn't work, then you can probably isolate the difficult cases to a particular image type or types. Obviously, you will want a schema for layout images (Lime green bullet, gothic top left corner, and so forth.) This schema should not allow for alt text, and the templating should always emit an empty alt attribute.

Then let's imagine we have a News schema, which allows for a relevant picture. When displaying the detail view of the news item, you obviously want the alt text that describes the image. "President Obama concedes defeat to the President of the NRA, at their meeting at the Whitehouse". When you render the thumbnail variant of the same image in a list of news items, the alt text will tell you that it's a link, and use the title of the news item. "Link to: Hope dies for gun control as Obama confirms his commitment to 2nd Amendment". You don't need to store any extra metadata to do this. Your multimedia schema (News event image) has exactly the metadata it needs, and your news item schema allows you to use multimedia components of this schema for adding a picture of the news event being described. (Should you also have a distinct schema for pictures whose only purpose is to look nice?)

Of course, there are sites where a high value is placed on accessibility, and where they'll do everything. Often these are government sites, or those of large enterprises that can afford the investment in keeping a squeaky clean image on how they treat the disabled. This still isn't the majority, though - by any means. The rest don't want to pay for it, and by that, I don't just mean they don't want to pay for the extra complexity in implementing all the bells and whistles. Perhaps even more to the point, they don't want to invest the extra effort that it will cost their "content" department to actually work with all this extra detail. To achieve an accessible web site, you need more than just clued-in technologists. The web content people need a pretty sophisticated understanding of the issues too. For alt text, they need to know when it should be empty, and they need to know how to write it with the right amount of context when it is needed. Generally, if you have optional fields in your schema, you'll need fairly good editorial control to ensure that it gets filled in at all. When your implementation has some sort of fallback mechanism that puts in a default when you leave it empty, how are you going to deal with that? An extra checkbox next to the alt text field that says "Yes, I really meant to leave it empty". (I'm joking, but if you're doing the site for a blind peoples' charity, you might even go to these extremes). So even with all the complexity built in to your implementation, you're still going to have to send all the content workers on courses where they get to play with screen readers and live for a day like the visually disadvantaged part of their audience, and then you're going to have to repeat this often enough to keep it real next year, and the year after.

So - most organisations aim way lower. If I had a Euro for every professional web content worker I've met who didn't know the difference between title text and alt text, I could probably go on a nice holiday. This is by no means a tirade against the content workers. Most of them are working very, very hard just keeping their sites updated, and it's no wonder they just want the CMS implementation to magically take care of all that accessibility stuff. If you put a separate field for alt text and title text, or a separate field for alt text in a different context, they will probably write something straightforward, paste it in all those places, and then curse you (again) for an idiot for making them do that last step (again). And then they'll go home and tweet that they hate Tridion.

So again, it's about the requirements. Requirements, requirements, requirements!. If your organisation is very, very keen on getting this stuff right, then your shiny new implementation isn't going to help them unless they plan for those training sessions for the web team, and then allow the content team to adjust their estimates on every new piece of content to allow for all that extra effort.... It's half past four in the afternoon, and someone in marketing or communications has emailed them a word document with the text of the press release that absolutely must hit the site at midnight tonight. Knocking-off time is half past five, but what the heck, we'll stay late to show commitment, but really, if I'm staying late it had better be for something more profound than faffing around with the third variation of the alt text. The chief editor might just bother to look for a tooltip on the image if they are on the ball, and the rest of the team are already in the pub. Whatever!

So really - if you are in that elite group that wants to do full-on accessibility, and understands and is willing to deal with the implications, good for you. Alvin's "ultimate practice" might be for you, but that'll be OK, because the team will know enough to be able to work with it. If, on the other hand, you want to do the right thing (so presumably you'll go at least as far as some moderately good alt text) the best bet might be to just stick with putting the alt text in the image component, and re-using this text for the title attribute. At least, make sure you have the requirements discussion, because the costs are very clearly not just one-off implementation costs. Even that copy-paste-paste-paste cycle will cost them time and patience every single day. Anyway - my point is that the web content management implementation needs to support the actual practices that people want or expect to follow in their day to day work.

While we're on the subject... when exactly was the last time you sat down with the person responsible for the web site and discussed the need for quote tags, or longdesc attributes? Isn't it about time?

Debugging 64 bit Tridion content delivery on IIS 7.5

I'm currently developing a web application which will run on Windows 2008 R2 and which is intended to run in a 64bit Application pool. This means that I'm running IIS 7.5, and that the web application is installed with the 64 bit versions of the Tridion content delivery assemblies. As you'll know if you've tried to run this kind of web application in a 32 bit process, you pretty soon get exceptions telling you that you have an invalid format. This gets a little inconvenient if you just start to debug your web application in Visual Studio. By default, if you have a page selected, and hit the big green Run triangle, the page will launch in IIS Express. If you have IIS 7.5, then IIS Express runs a 32 bit process, so the default setup just isn't going to work for you.

So - what to do? I had two options:

- Configure the properties of the web application to debug using IIS rather than IIS Express

- Launch the web page directly from the browser, and attach the debugger to the correct w3wp.exe process.

To be honest, the second of these was the choice that most matched my usual debugging approaches. Having said that, I did try the first approach, but so far without success. Visual Studio 2012 has frozen on me a few times while trying this. I'm interested if anyone has any tips on getting this working, but right now, I'm happy enough that I was able to succeed in attaching a debugger to w3wp.exe.

My biggest challenge was to figure out which process I wanted to attach to. On my development server, I have quite a few web sites running, and it's not altogether obvious which w3wp.exe to attach to. Attaching to them all might work in a trivial case, but realistically, it takes quite a while to load all the dlls, and adding any more processes than necessary is just going to hurt too much. So - how do you find out which process it is?

The first step is to ensure you have the IIS powershell provider installed on your server. These days, this is shipped as a module, so if it's available on your system, you should be able to open a powershell and type:

Get-Module -ListAvailable

If the response includes "WebAdministration" you are good to go. Just import the module as follows:

Import-Module WebAdministration

If this succeeds, you should be able to "change directory" into the IIS provider. (Although a PowerShell purist might prefer set-location... whatever floats your boat!)

cd IIS:

If you can't find the module, then go into the Server manager, and check that you have the relevant role services for IIS installed. On other platforms, you might find that you can install it from the WebInstaller from the MSDN web site.

Now you're ready to find the process that you want to attach to: Assuming that your application pool is called "MyApplicationPool", then you can list its worker processes like this: (or use "dir" or "ls", either of which is an alias for "gci")

> gci IIS:\AppPools\MyApplicationPool\WorkerProcesses

Process State Handles Start Time Id -------- ----- ------- ---------- 2608 Running 776 1/2/2013 6:55:33 PM

This assumes, of course, that your app pool is actually running, but you'd have made sure it was before trying to debug it, right. Anyway - as you can see, the process id is there just to read off, and you can get straight on with your debugging session.

Mysterious 404 errors showing up in the Tridion message centre

Today I spent some time setting up a Tridion 2011 Content Manager server. In fact, the content manager had already been installed and had been working fine. Then we'd installed Microsoft Search Server. OK - so it's quite unusual to be doing quite so much all on one server, but this is a customer with minimal needs. Not everyone has 200 servers in the rack! Although Search Server is packaged as a product in it's own right, it's built on Sharepoint, and when you install it, it seems to bring two thirds of Sharepoint with it, including 2 MSSQL instances and three web sites. So to get the benefit of Microsoft's "free" search services, we'll probably have to configure another couple of gigs of RAM. (SFX: Sound of a cash register going "ca-ching" at VMWare headquarters)

Anyway to be fair, the search solution looks pretty good and it definitely does what it says on the box, although it's got about a hundred configuration screens (I haven't actually counted them, though). Well anyway - we'd installed this beast on our previously working Tridion server, and most things were going OK. Until I did an IISRESET, and then suddenly the Tridion CME started to complain about a 404 problem. So when you started the CME, you'd get error messages like:

The remote server returned an error (404) not found

On examining the message centre, I found this message 6 times, along with "Loading list of languages failed" and "Loading list of locales failed". Sure enough, the relevant drop-downs in the User preferences are not populated.

When I F12'd the browser. (Is there a verb, to F12? There should be.) I could see that the browser wasn't seeing any responses with HTTP status 404. So what was going on?

After digging a bit on the server, I found that there were entries in the web server log like this:

2012-12-19 12:59:41 ::1 POST /WebUI/Models/CME/Services/General.svc/GetListCustomPages - 80 BLAH\Administrator ::1 - 404 0 0 58 2012-12-19 12:59:41 ::1 POST /WebUI/Models/CME/Services/General.svc/GetListFavorites - 80 BLAH\Administrator ::1 - 404 0 0 62 2012-12-19 12:59:41 ::1 POST /WebUI/Models/CME/Services/General.svc/GetListSystemAdministration - 80 BLAH\Administrator ::1 - 404 0 0 15 2012-12-19 12:59:41 ::1 POST /WebUI/Models/TCM54/Services/Lists.svc/GetList - 80 BLAH\Administrator ::1 - 404 0 0 30 2012-12-19 12:59:41 ::1 POST /WebUI/Models/TCM54/Services/Lists.svc/GetListEnumerationValues - 80 BLAH\Administrator ::1 - 404 0 0 5 2012-12-19 12:59:41 ::1 POST /WebUI/Models/TCM54/Services/Lists.svc/GetListEnumerationValues - 80 BLAH\Administrator ::1 - 404 0 0 8

So I could see from here that the errors were taking place when the CME web application made a local call-back on the server to it's own service layer. A bit more poking around showed that the problem was displayed whenever the CME made a callback to a service.

So what was going on? (Did I ask that already?)

It turned out that installing large portions of Sharepoint had had the undesired effect that the Tridion CME web site no longer owned the default binding. We had a host header binding mapped in IIS, and you could reach this just fine, but since the install, traffic aimed at 'localhost' was going to the wrong web site. Actually, Tridion has got this covered, because in the WebRoot Web.Config there's a an app setting called "Tridion.WCF.RedirectTo". This was pointing to localhost (which had worked fine when the server was first intalled). So when the CME tried to make calls back to the Model services, it was aiming these calls at localhost, which of course, ended up in the sharepoint site and a 404.

We fixed the immediate problem by editing the IIS bindings, but we're considering whether it might be good practice to always configure Tridion.WCF.RedirectTo to go to the name of your site, and not to localhost.

The relevant Tridion documentation is here,

Tridion Explorer reports System.ServiceModel.ServiceActivationException

I'd been noticing strange messages popping up in the message centre of the SDL Tridion Explorer. The messages were about some service call failing with a 500 status and System.ServiceModel.ServiceActivationException, and seemed to be coming from various service points under C:\Program Files (x86)\Tridion\web\WebUI\Models\TCM54\Services. Here's an example:

/WebUI/Core/Services/Communicator.svc/Invoke failed to execute. STATUS(500): System.ServiceModel.ServiceActivationException

Not all the time, just occasionally when I did certain things. The thing that got me irritated enough to do something about it was when I wanted to delete a list of old versions of some items, and the multiple items functionality was breaking, and throwing up these messages. I could delete them one item at a time, but not all together. I suspect you can get problems with other things too, looking at the list of services that are served the same way from Models\TCM54\Services, and I think I remember also having problems with publishing and where-used.

A bit of Googling pointed me in the right direction, and I ended up after a couple of false starts editing: C:\Program Files (x86)\Tridion\web\WebUI\WebRoot\Web.Config

<serviceHostingEnvironment>

<baseAddressPrefixFilters>

<add prefix="http://localhost/"/>

</baseAddressPrefixFilters>

</serviceHostingEnvironment>