Dominic Cronin's weblog

Is it the end of the component presentation?

Among the most interesting talks at the recent Tridion Developer Summit, was one by Raimond Kempees and Anton Minko, in which they looked into their crystal ball to give some hints about the direction content delivery is going in. In brief, the news is that Tridion R&D are now following through on the consequences of recent changes in the way web content management is done. The market is demanding headless Web CMS systems, and although Tridion's current offering is fully able to punch its weight as a headless system, the focus looks as though it's going even further in that direction. Buzzwords aside, the reality is that templating is moving further and further away from the content manager, and will probably end up being done largely in the browser.

This blog post is not about the death of the server-based web application: that might be a little premature, but it's really clear that we won't be doing our templating on the content manager. Apart from legacy work, this is already the case for most practical purposes. If you are using a framework like DXA, you will most likely not wish to modify the templating that comes with the framework. The framework templating doesn't render output, but presents the data from your components and pages in a generic format such as JSON. Assuming that all the relevant data is made available, you shouldn't ever need to intervene at this stage in the proceedings. Any modifications or customisations you may wish to make will probably be done in C#, and not in a templating language. (Tridion's architecture is very flexible, and I'm talking here about where mainstream use of the product is going. There are still organisations whose current work looks very different to this, and they have their own very good reasons for that.)

So the templating has already moved out to DXA, and there's an advanced content service which offers JSON data which you specify in GraphQL queries. Architectural decisions in your project are still likely to be about what should happen on the server and what in the browser, but you won't be doing much on the content manager beyond, erm... managing content.

Among the interesting new directions sketched out in the talk were the following:

- A "native" data format for publishing. Effectively - instead of templates generating JSON, Tridion itself will do this, so you won't need templates any more.

- A fast publishing pipeline to ensure that the content gets from the content manager to the content service in a highly efficient manner.

All well and good, but somewhere in there, almost as a throwaway line, they touched on the end of the component presentation? Well it's kind of a logical conclusion in some ways, but when they mentioned this, I had a kind of "whoa" moment. So don't throw the baby out with the bath water, guys!

Seriously - for sure, if we don't have component templates any more, we can't really have component presentations, can we? Well yes, of course, we can, and both DD4T and DXA have followed the route of using good-old-fashioned page composition in Tridion, with the editors selecting component templates to indicate how they'd like to see the component rendered. In practice, all the component templates are identical, but the choice serves to trigger a specific "view" in the web application. The editorial experience remains the same as ever, and everyone knows what they are doing.

So in practice, instead of a page being a list of component presentations, it's become a list of components, with some sort of metadata indicating the choice of view for each component. That metadata doesn't need to be a component template, and you can see why they'd want to tidy this up. If you're writing a framework, you use the mechanisms available to you, but Tridion R&D can make fundamental changes when it's the right thing to do.

So they could get rid of component presentations. My initial reaction was to think they'd still have to do something pretty similar to "page composition as we know it", but that got me thinking. We now have page regions, which effectively turns a page from one list into a number of smaller lists. With this in place, it comes down to the fundamental reasons that we have traditionally used different component presentations. It's very common to have page template/view logic that does something like: get all the link-list components and put them in the right-hand side bar, or get the main component and use it to render the detail view in the main content area, or get all the content components and put them in the main content area. These very common use patterns are easily coped with by using regions, but maybe there are other cases where it would still be handy to specify your choice of view for a given component.

Actually, a lot of our work in page templates over the years has been about working around the inflexibility of a page being simply a list of component presentations. We've written logic that switched on which schema it was, or maybe a dozen other things, to achieve the results we needed to. Still, that simple familiar model has a lot to be said for it, and I suspect there are cases where regions on their own probably aren't enough.

I've really appreciated the way product managers at Tridion have reached out to the community in recent times to validate their ideas and share inspiration. I have every confidence that the future of pages in a world without component presentations will be the subject of similar consultations. The Tridion of two or three versions hence might look a lot different, but it will probably also have a lot of familiar things. It's all rather exciting.

Discovering content delivery environment capabilities... on Tridion practice

I recently wrote a script to query the capabilities of a Tridion content delivery environment. Rather than post it here, I've put it up on Tridion Practice. After all, it's about time "practice" got a bit of love. I think I'll try to get a bit more fresh material up there soon.

I hope you'll find the script useful. Dare I also hope that some of you might be inspired to contribute something to Tridion Practice? It's always been the intention that we'd try to get contributions from a range of practitioners, so please feel welcome. Cookbook recipes are probably the most prominent part of the site, but the patterns and practices sections are also interesting. Come on down!

Peering behind the veil of Tridion's discovery service

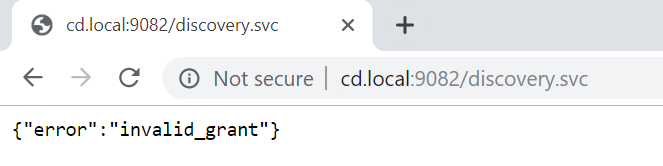

Many of us have configured applications that use Tridion's discovery service to find the various other content delivery services. All these services can be secured using oAuth, and the discovery service itself is no exception. It's almost a rite of passage to point a browser at the discovery endpoint only to see something like this:

In order to be allowed to use the discovery service, you need to provide an Authorization header when you call it, and otherwise, the "invalid_grant" error is what you get. Some of you may remember a blog post of mine from a few years ago where I showed how to call the content service with such a header. The essense of this was that first you call the token service with some credentials, and it hands you back a token that you can use in your authorization header.

That was when Web 8 was new, and I remember wondering, given that you only need to provide a discovery endpoint, how you could arrive at the location of the token service in order to get a token in the first place. I've seen enough code in the intervening period that takes a very pragmatic line and just assumes that the token service is to be found by taking the URL of the discovery service and replacing "discovery.svc" with "token.svc". That works, but it's somehow unsatisfactory. Wasn't the whole point of these service architectures that things should be discoverable and self-describing. Back then I'm fairly sure, after a cursory glance at https://www.odata.org/, I tried looking for the $metadata properties, but with the discovery service that just gets you an invalid grant.

I recently started looking at this stuff again, and somehow stumbled on how to make it work. (For the life of me, I can't remember what I was doing - maybe it's even documented somewhere.) It turns out that if you call the discovery service like this:

http://cd.local:9082/discovery.svc/TokenServiceCapabilities

it doesn't give you an invalid grant. Instead it gives you an oData response like this:

<?xml version="1.0" encoding="UTF-8"?>

<feed

xmlns:metadata="http://docs.oasis-open.org/odata/ns/metadata"

xmlns:data="http://docs.oasis-open.org/odata/ns/data"

xmlns="http://www.w3.org/2005/Atom" metadata:context="http://cd.local:9082/discovery.svc/$metadata#TokenServiceCapabilities" xml:base="http://cd.local:9082/discovery.svc">

<id>http://cd.local:9082/discovery.svc/TokenServiceCapabilities</id>

<title></title>

<updated>2019-09-04T19:28:36.916Z</updated>

<link rel="self" title="TokenServiceCapabilities" href="TokenServiceCapabilities"></link>

<entry>

<id>http://cd.local:9082/discovery.svc/TokenServiceCapabilities('DefaultTokenService')</id>

<title></title>

<summary></summary>

<updated>2019-09-04T19:28:36.916Z</updated>

<author>

<name>SDL OData v4 framework</name>

</author>

<link rel="edit" title="TokenServiceCapability" href="TokenServiceCapabilities('DefaultTokenService')"></link>

<link rel="http://docs.oasis-open.org/odata/ns/related/Environment" type="application/atom+xml;type=entry" title="Environment" href="TokenServiceCapabilities('DefaultTokenService')/Environment"></link>

<link rel="http://docs.oasis-open.org/odata/ns/relatedlinks/Environment" type="application/xml" title="Environment" href="TokenServiceCapabilities('DefaultTokenService')/Environment/$ref"></link>

<category scheme="http://docs.oasis-open.org/odata/ns/scheme" term="#Tridion.WebDelivery.Platform.TokenServiceCapability"></category>

<content type="application/xml">

<metadata:properties>

<data:id>DefaultTokenService</data:id>

<data:LastUpdateTime metadata:type="Int64">1566417662739</data:LastUpdateTime>

<data:URI>http://cd.local:9082/token.svc</data:URI>

</metadata:properties>

</content>

</entry>

</feed>

Looking at this, you can just reach into the "content" payload and read out the URL of the token service. So that explains the mystery, even if the possibility of doing a replace on the discovery service URL also counts as sufficient to render it unmysterious.

Anyway - who knew? I certainly didn't. Is this a better or more correct way of getting to the token service? I really don't know. Answers on a postcard?

Encrypting passwords for Tridion content delivery... revisited

A while ago I posted a "note to self" explaining that in order to use the Encrypt utility from the Tridion content delivery library, you needed to put an extra jar on your classpath. That was in SDL Web 8.5. This post is to explain that in SDL Tridion Sites 9, this advice still stands, but the names have changed.

But first, why would you want to do this? Basically it's a measure to prevent your passwords being shoulder-surfed. Imagine you have a configuration file with a password in it like this:

<Account Id="cduser" Password="${cduserpassword:-CDUserP@ssw0rd}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

You might not want everyone who passes by to see that your password is "CDUserP@ssw0rd". Much better to have something like encrypted:o/cgCBwmULeOyUZghFaKJA==

<Account Id="cduser" Password="${cduserpassword:-encrypted:o/cgCBwmULeOyUZghFaKJA==}">

<Metadata>

<Param Name="FirstName" Value="CD"/>

<Param Name="LastName" Value="User"/>

<Param Name="Role" Value="cd"/>

<Param Name="AllowedCookieForwarding" Value="true"/>

</Metadata>

</Account>

Actually - with the possibility to do token replacement, I do wonder why you need a password in your config files at all, but that's not what this post's about.

The thing is that the jar files that used to be called cd_core.jar is now called udp-core.jar and cd_common_util.jar has become udp-common-util.jar. Actually this is a total lie, because in recent versions of Tridion all the jars have versioned names, as you'll see in the example I'm about to show you. One of these jars is to be found in the lib folder of your services, and the other in the services folder, so you might find it's easier just to copy them both to the same directory, but this is what it looks like doing it directly from the standalone folder of discovery:

PS D:\Tridion Sites 9.0.0.609 GA\Tridion\Content Delivery\roles\discovery\standalone> java -cp services\discovery-servic

e\udp-core-11.0.0-1020.jar`;lib\udp-common-util-11.0.0-1022.jar com.tridion.crypto.Encrypt foo

Configuration value = encrypted:6oR074TGuXmBdXM289+iDQ==

Note that here I've escaped the semicolon from the powershell with a backtick, but you can just as easily wrap the whole cp argument in quotes. Please note that I do not recommend the use of foo as a password. Equally, please don't use this encryption as your only means of safeguarding your secrets. It raises the bar a bit for the required memory skills of shoulder surfers, and that's about it. It's a good thing, but don't let it make you complacent. You also need to follow standard industry practices to control access to your servers and the data they hold. Of course, this is equally true of any external provisioning systems you have.

Discovery service in Tridion Sites nine has two storage configs

I just got bitten by a little "gotcha" in SDL Tridion Sites 9. When you unpack the intallation zip, you'll find that in the Content Delivery/roles/Discovery folder, there's a separate folder for registration, with the registration tool and its own copy of cd_storage_conf.xml. The idea seems to be that running the service and registering capabilities are two separate activities. I kind of get that. When I first saw the ConfigRepository element at the bottom of Discovery's configuration, I felt like it had been shoehorned into a somewhat awkward place. Yet now, it seems even more awkward. So sure, both the service and the registration tool need access to the storage settings for the discovery database, while only the registration tool needs the configuration repository.

The main difference seems to be one of security. The version of the config that goes with the registration tool has the ClientId and ClientSecret attributes while the other doesn't. This, in fact, is the gotcha that caught me out; I'd copied the storage config from the service, and ended up being unable to perform an update. The error output did mention being unable to get an OAuth token, but I didn't immediately realise that the missing ClientId and ClientSecret were the reason. Kudos to Damien Jewett for his answer on Stack Exchange, which saved me some hair-pulling.

I'm left wondering if this is the end game, or whether a future version will see some further tidying up or separation of concerns.

EDIT: On looking at this again, I realised that even in 8.5 we had two storage configs. The difference is that in 8.5 both had the ClientId and ClientSecret attributes.

Enumerating the Tridion config replacement tokens

OK - I get it. It's starting to look like I've got some kind of monomania regarding the replacement tokens in Tridion config files, but bear with me. In my last blog post, I'd hacked out a regex that could be used for replacing them with their default values, but had thought better of actually doing so. But still, the idea of being able to grab all the tokens has some appeal. I can't bear to waste that regex, so now I'm looking for a reasonable use for it.

It occurred to me that at some point in an installation, it might be handy to have a comprehensive list of all the things you can pass in as environment variables. Based on what I'd done yesterday this was quite straightforward

gci -r -include *.xml -exclude logback.xml| sls '\$\{.*?\}' `

| select {$_.RelativePath((pwd))},LineNumber,{$_.Matches.value} `

| Export-Csv SitesNineTokens.csv

By going to my unzipped Tridion zip and running this in the "Content Delivery/roles" folder, I had myself a spreadsheet with a list of all the tokens in Sites 9. Similarly, I created a spreadsheet for Web 8.5. (As you can see, I've excluded the logback files just to keep the volume down a bit, but in real life, you might also want to see those listed.)

The first thing you see when comparing Sites 9 with Web 8.5 is that there are a lot more of the things. More than twice as many. (At this point I should probably confess to some possible inaccuracy, as I haven't gone to the trouble of stripping out XML comments, so there could be some duplicates.)

65 of these come from the addition of XO and another 42 from IQ, but in general, there are just more of them. The bottom line is that to get a Tridion system up and running these days, you are dealing with hundreds of settings. To be fair, that's simply what's necessary in order to implement the various capabilities of such an enterprise system.

One curious thing I noticed is that the ambient configs all have a token to allow you to disable oauth security, yet no tokens for the security settings for the various roles. I wonder if this reflects the way people actually use Tridion.

Of course, you aren't necessarily limited by the tokens in the example configs of the shipped product. Are customers defining their own as they need them?

That's probably enough about this subject, though, isn't it?

Removing the replacement tokens from Tridion configuration files, and choosing not to

In SDL Web 8.5 we saw the introduction of replacement tokens in the content delivery configuration files. Whereas previously we'd simply had XML files with attributes and elements that we filled in with the relevant values, the replacement tokens allowed for the values to be provided externally when the configuration file is used. The commonest way to do this is probably by using environment variables, but you can also pass them as arguments to the java runtime. (A while ago, I wasted a bunch of time writing a script to pass environment variables in via java arguments. You don't need to do this.)

So anyway - taking the deployer config as my example, we started to see this kind of thing:

<Queues>

<Queue Id="ContentQueue" Adapter="FileSystem" Verbs="Content" Default="true">

<Property Value="${queuePath}" Name="Destination"/>

</Queue>

<Queue Id="CommitQueue" Adapter="FileSystem" Verbs="Commit,Rollback">

<Property Value="${queuePath}/FinalTX" Name="Destination"/>

</Queue>

<Queue Id="PrepareQueue" Adapter="FileSystem" Verbs="Prepare">

<Property Value="${queuePath}/Prepare" Name="Destination"/>

</Queue>

Or from the storage conf of the disco service:

<Role Name="TokenServiceCapability" Url="${tokenurl:-http://localhost:8082/token.svc}">

So if you have an environment variable called queuePath, it will be used instead of ${queuePath}. In the second example, you can see that there's also a syntax for providing a default value, so if there's a tokenurl environment variable, that will be used, and if not, you'll get http://localhost:8082/token.svc.

This kind of replacement token is very common in the *nix world, where it's taken to even further extremes. Most of this is based on the venerable Shell Parameter Expansion syntax.

All this is great for automated deployments and I'm sure the team running SDL's cloud services makes full use of this technique. For now, I'm still using my own scripts to replace values in the config files, so a recent addition turned out to be a bit inconvenient. In Tridion Sites 9, the queue Ids in the deployer config have also been tokenised. So now we have this kind of thing:

<Queue Default="true" Verbs="Content" Adapter="FileSystem" Id="${contentqueuename:-ContentQueue}">

<Property Name="Destination" Value="${queuePath}"/>

</Queue>

Seeing as I had an XPath that locates the Queue elements by ID, this wasn't too helpful. (Yes, yes, in the general case it's great, but I'm thinking purely selfishly!) Shooting from the hip I quickly updated my script with an awesome regex :-) , so instead of

$config = [xml](gc $deployerConfig)

I had

$config = [xml]((gc $deployerConfig) -replace '\$\{(?:.*?):-(.*?)\}','$1')

About ten seconds after finishing this, I realised that what I should be doing instead is fixing my XPath to glom onto the Verbs attribute instead, but you can't just throw away a good regex. So - I present to you, this beautiful regex for converting shell parameter expansions (or whatever they are called) into their default values while using the PowerShell. In other words, ${contentqueuename:-ContentQueue} becomes ContentQueue.

How does it work? Here it comes, one piece at a time:

' single quote. Otherwise Powershell will interpret characters like $ and {, which you don't want

\ a slash to escape the dollar from the regex

$ the opening dollar of the expansion espression

\{ match the {, also escaped from the regex

(?:.*?) match zero or more of anything, non-greedily, and without capturing

:- match the :-

(.*?) match zero or more of anything non-greedily. No ?: this time so it's captured for use later as $1

\} match the }

' single quote

The second parameter of -replace is '$1', which translates to "the first capture". Note the single quotes, for the same reason as before

So there you have it. Now if ever you need to rip through a bunch of config files and blindly accept the defaults for everything, you know how. But meh... you could also just not provide any values in the environment. I refuse to accept that this hack is useless. A reason will emerge. The universe abhors a scripting hack with no purpose.

I'm an Idea Machine!

Apparently, I'm an "Idea Machine". Who'da thought it?

So.... the SDL community site just gave me the Idea Machine badge. Apparently you get this for submitting five ideas. Alright, alright - I'll get over myself, really. Just let me enjoy the moment!! :-)

But seriously... for all the nonsense of online achievements, badges and whatever... submitting ideas is a really good... um.. idea. Many of us are working with Tridion software day in and day out, and sometimes you come across something that could be better, or even just something a bit irritating. Chances are you aren't the only one who would appreciate an improvement. So do us all a favour, hold that thought and pop along to https://community.sdl.com/ideas/sdl-tridion-dx-ideas/sdl-tridion-sites-ideas/, or the relevant site for you, and post your idea.

Once your idea is in, people can vote it up or down and comment on it. You can also be sure that it will be seen by people from R&D and product management. The only down side of the whole thing is that they have an "ideation wiki". Ideation? Ideation? Really. Maybe they'll have to find some cweatives for that.

Oh what the heck?! Come on folks! Get ideating!!!

Out with the old, in with the new. How will 2019 look for Tridion specialists?

It's New Year's eve: a traditional time to look backwards and forwards. I've spent a little time contemplating these grand themes in the context of my life as a Tridion specialist. Where are we now and what will the new year bring?

Let's start with 2018. The major event this year, of course, was the release of SDL Tridion DX, incorporating SDL Tridion Sites 9. So the first thing you see is that we got the Tridion name back. Hurrah! OK - enough of that: getting the name back is good, but there are other things to get excited about.

Firstly - Tridion DX brought SDL's two content management systems together: the web content management system "formerly known as Tridion", and the SDL Knowledge Center. The new branding names the first "SDL Tridion Sites" and the second "SDL Tridion Docs". So now we have both the web content management and structured content management features in the same product. To be honest, I suspect at first the number of customers who want to combine the two will be small, but for companies that do need to straddle these two worlds, the integration provided by DX will be a killer feature. As time goes on, we'll probably find that having both approaches available helps to prevent the need to knock a round peg into a square hole in some implementations. It's also clear that this represents a significant engineering effort at SDL. They haven't just put everything in the same shrink-wrap, but for example, the content delivery architecture has had a major revamp to get the two systems to play nicely together. Even this got a new branding: the Unified Delivery Platform!

I suspect, though that in 2019, I'll be mostly busy with pure WCM work. Sites 9 brings a raft load of enhancements that help to keep it current in the fast-moving world of modern web development. The most interesting is perhaps the new model service. We've seen a model service as part of the DXA framework, but Sites 9 has a "Public Content API", which boils down to a GraphQL endpoint. Tridion's architecture has always had great separation of concerns, so in many ways, it can take the current trend for headless sites in its stride, but a GraphQL service will make it easier to consume content directly, without having to build server-side support as part of your implementation. GraphQL allows you to specify exactly which data you want to get back, and will enable developers to ensure the data traffic between server and client is clean and lean.

There are also other interesting new features. A good example is regions within pages. In practice, the build-up of a web page is done this way - we have different areas of the page showing different kinds of content, and it's great to see that this kind of structure can now be modeled directly in the content manager. I'll stop there; there are far too many new features for a short blog post.

The Sites 9 release has meant a matching update (2.1) to the DXA framework, which is now using the new public content API and of course has support for the new page regions.

So going in to 2019, things are looking really great for anyone beginning a greenfield project on SDL Tridion. That's not the whole story, though. At the other end of the spectrum, there are always customers who are waiting for the right moment to upgrade from an older version. This might be the year when we finally say goodbye to our old friend vbScript. As I understand it, from the Sites 9 release onwards, the legacy support won't even install any more, so organisations that still have vbScript will be planning how to migrate before the next "major" release puts them out of support. To be fair to SDL, by my calculation it's 16 years since compound templating was introduced. That ought to be ample time, you'd have thought. Putting that a bit more positively, we now have very much better ways of doing things, and a Tridion 9/DXA 2.1 approach is a very much better place to be.

I suspect the other main themes for 2019 will be cloud computing and devops. As organisations move forward to the new product versions, they are also looking at their architectures and working practices. Fortunately, Tridion as a product is already highly cloud-capable, and the move away from templating on the content manager has definitely had an impact on how easy it is to implement continuous integration and delivery/deployment.

It will be a year of transition for many of our customers: not only the technical transitions that I've mentioned, to new architectures and techniques, but also for the business people who are looking to take the next step towards a unified on-line experience for their customers and visitors.

I'm looking forward to it. Bring it on!

A happy new year to you all.

Unboxing SDL Tridion Sites 9

It's Boxing Day, so I thought I'd treat myself by unboxing Tridion Sites 9, or more to the point, installing the Content Manager. Just to give a bit of context, this is not a production installation, but rather a "fifth environment". (Chris Summers once suggested this term for a developer's own setup, as distinct from the Developent, Test, Acceptance and Production environments of a traditional delivery street. The name seems to have stuck.)

I'm installing on the Google Cloud Platform (GCP) so I selected a "SQL Server 2017 Standard on Windows Server 2016 Datacenter" with a standard 50GB boot disk. By default I got a system with 3.75GB of memory, but during installation I got a notification from GCP that this might not be enough, so I accepted the suggested upgrade to 4.75GB. I'm not sure if the installation process is sufficiently typical use to determine memory sizing, but well... 4 gigs is pretty small these days, eh? I'm trying to run this on a tight budget, but an extra gig of memory won't be what breaks the bank. (If anything turns out to be expensive, it will be the Windows license, but you pay by the second and I plan to be very disciplined about shutting things down when I don't need them.) The shoestring budget is why I chose a version that already has MSSQL installed. The last time I did this, I ended up running up two separate Windows Servers, but this time I'm just starting with the version with MSSQL, which might help to keep the costs down. Of course, in production, a separate database server or two would still make sense, but this is a research rig. As for where to run the database, I'm also looking at the dockerised version of MSSQL, which has some attractions, but to get going quickly, a Windows image with it installed will be fine.

For SDL Web 8.5 I already had some scripts that took care of most of the content manager installation. I'm pleased to say that these ran with only very minor modifications for Tridion 9. So, for example, the layout of the installer directory is relatively predictable, but the installer executable is now called SDLTridionSites9.exe. But let's start at the beginning. After the usual fuss trying to get the files I needed up to the cloud and available from my image, I was able to do the following:

- Run my script that kicks off all the Tridion database install scripts. Nothing very exciting here, just a rinse-and-repeat operation for most of the time.Having a script for this saves you typing the same input parameters a dozen times, and gives you an audit trail. Before I could do this, I had to install Microsoft Sql Server Management studio and use it to set up the sa account as I wanted it. For content delivery I may well choose to put the databases somewhere else, but I'll definitely remember to read through this note to self I wrote a while ago.

- As it's an all-in-one install, it's necessary to disable the loopback check. If you're happy with a quick-and-dirty, this will get you there:

New-ItemProperty HKLM:\System\CurrentControlSet\Control\Lsa -Name DisableLoopbackCheck -Value 1 -PropertyType dword

- After that I ran my installation script. Mostly this is a question of passing some pre-determined parameters to the installer, mainly so it's repeatable. I do, however do a couple of things first. One is to create the content manager system account (tridionsys, or mtsuser if you're old-fashioned). I also set up a couple of optional windows features: like this -

$desiredFeatures = "IIS-ApplicationInit", ` "IIS-ASPNET",` "IIS-HttpCompressionDynamic", ` "IIS-ManagementService", ` "Web-Mgmt-Service", ` "WAS-NetFxEnvironment", ` "Windows-Identity-Foundation" Get-WindowsOptionalFeature -Online `

| ?{$desiredFeatures -contains $_.FeatureName -and -not ($_.State -eq 'Enabled')} `

| Enable-WindowsOptionalFeature -Online

The old installer had problems with installing the content manager and topology manager on the same port with distinct host headers. My installation approach involves letting the installer put them on separate ports and then running a separate script to fix things up how I like it. I haven't tested whether the new installer makes this unnecessary, as for now my priority is just getting a working system. Perhaps it's also interesting to test whether my pre-install fixup of Windows features is still necessary. UPDATE: I have now tested whether this problem still exists in the Sites 9 installer, and it does.

Anyway, I now have the content manager and topology manager running, and can move on to content delivery. My overall assessment so far is that it's pretty straightforward setup. I'm looking forward to my further adventures with Tridion Sites 9.