Dominic Cronin's weblog

Why can't I get my special characters to display properly?

Today there was a question (http://tridion.stackexchange.com/q/2891/129) on the Tridion Stack Exchange that referred to putting superscript characters in a non-RTF field in Tridion. I started to answer it there, but soon realised that my answer was for a rather broader question - "How can I figure it out if funky characters don't display properly?"

- Install a good byte editor. I personally use a freeware tool: http://mh-nexus.de/en/

- Understand how UTF-8 works and be prepared to decode characters with a pencil and a sheet of paper. Make reference to http://www.ietf.org/rfc/rfc3629.txt and particularly the table on page 3. This way you can translate UTF-8 to Unicode.

- Use the code charts at www.unicode.org/charts to verify the character in Unicode.

E2 84 A2

11100010 10000100 10100010

0010000100100010

Why the "new" Tridion events system is a game-changer

When SDL released Tridion 2011, a lot had changed. So much so, that the introduction of a new Events system was almost unremarkable. After all, they had to replace the old one, so there was a new one. Nothing to see here, move along now please. Most of the effort in those days went into a flurry of upgrades and ports of old-style events systems to the new architecture. So you might be forgiven if you hadn't ever stopped to think just how much of a difference the new architecture makes. Specifically - we now subscribe to events using a mechanism based on .NET multicast delegates. This has a couple of consequences.

Firstly, we are freed from the need to write dispatchers. To implement an events system with the old "COM+"-based system, you would implement an interface containing all the event handler methods, and register your implementation with a specific COM ProgID. Tridion would ask COM+ to instantiate an object of that ProgID, and merrily call into whichever of the interface methods were configured to be called. This meant there could only be one implementation. All your functionality had to be in that implementation, even if different parts of your system had different requirements. So if, for example, you were using Tridion for your Internet site and for your intranet, or for whatever other reason you were running diverse sites, then you'd need a dispatcher. This would be a simple events system implementation that did nothing more than pass on the calls to one of several different implementations, usually depending on configuration. So calls coming from your Internet publications would go to one DLL, and the ones from your intranet would go to another, but Tridion itself would only see one interface: that of your dispatcher. This was quite a pain. You could separate out different concerns this way, but you wouldn't want to do more than carving it up into very big chunks. Like I said - Internet and intranet, or maybe different customers or departments. Nothing more fine-grained than that anyway. The new events system meant we didn't need to have a dispatcher any more, and the "configuration" could mostly be baked into the code itself.

For myself, (and I suspect for others), this was such a relief that it was enough. It wasn't until some time later that I realised that it was just a beginning. We'd got so used to limiting ourselves to big chunks that it didn't really sink in that we could really start slicing things up. The game-changer I referred to in the title of this piece is exactly that. We can slice it up as small as we want. OK - big deal, you might say - but if we can slice it up arbitrarily, then we can write an events system implementation for a single concern. And that means [ta-da!!] that we can start making re-usable modules that can just be "dropped in" on whatever project needs them. I recently wrote a Component Save event handler that enforces height and width constraints on multimedia components. It does one thing - that's all, so I can use it whenever I have that need. When I went to configure it, I noticed that on my research system I already have three other events handlers registered. These are all from Tridion, and belong to Audience Manager, UGC, and External Content Library respectively. Without looking, I don't know or care whether any of them subscribes to the Initiated phase of a Component Save. They can all co-exist.

So now I'm looking forward to seeing a lot more (small and useful) events systems made available in the community - the days are gone when an events system only made sense for a single implementation.

Getting my VMware server to resolve DNS in a reasonable time.

I have a Windows 2012 server that I run under VMWare. I've probably mentioned this image before, as it's the one I use for my Tridion research. I'm fairly unusual in that I like to have my database server running "on the bare metal" of my laptop rather than in the Windows Server image. It's probably just perversity or masochism or whatever, but that's how I roll. What this means is that I have two network interfaces on the image: one configured as "Host only", which I use for my database connections and other "on the box" stuff, and another running NAT. Sure, you could run a development image completely isolated from the Internet, but it'd be a pain, so I run the NAT interface as well.

All good in theory, but as it turned out, it was a pain anyway, because it was taking 10 seconds to resolve a DNS name. Don't ask me why 10 seconds - presumably it was hitting some timeout and then trying an approach that worked better. Anyway - it was getting annoying. Sure I could flip out of the image to run a browser outside, but nah! Apart from anything else, I hate to be irritated by things I don't understand. I don't mind having things I don't understand, - gee, you'd go crazy! - but if it's an in-your-face irritation, that's another story.

So I poked around a bit. I could run:

[net.dns]::GetHostByName("www.yahoo.com")

in my powershell on the image and it would take 10 seconds. Natively on the laptop - instant response. So I had a quick look in the VMWare network settings. There are some obscure settings on the NAT interface for policies for automatically detecting DNS servers. But hang on - was it attempting to get DNS from the Host only interface, or the NAT one? So what would nslookup tell me:

PS C:\Users\Administrator> nslookup

Default Server: UnKnown

Address: 192.168.126.1

> www.yahoo.com

Server: [192.168.126.1]

Address: 192.168.126.1

DNS request timed out.

timeout was 2 seconds.

DNS request timed out.

timeout was 2 seconds.

DNS request timed out.

timeout was 2 seconds.

DNS request timed out.

timeout was 2 seconds.

*** Request to [192.168.126.1] timed-out

> server 192.168.146.2

Default Server: [192.168.146.2]

Address: 192.168.146.2

> www.yahoo.com

Server: UnKnown

Address: 192.168.146.2

Non-authoritative answer:

Name: ds-eu-fp3.wa1.b.yahoo.com

Addresses: 2a00:1288:f00e:1fe::3000

2a00:1288:f006:1fe::3000

2a00:1288:f006:1fe::3001

2a00:1288:f00e:1fe::3001

87.248.112.181

87.248.122.122

Aliases: www.yahoo.com

fd-fp3.wg1.b.yahoo.com

ds-fp3.wg1.b.yahoo.com

ds-eu-fp3-lfb.wa1.b.yahoo.com

OK - so the first thing that this told me was that the default lookup was on my Host only interface, and that this was failing. When I manually set the server to the one on the NAT interface, boom... the response came back in a split second.

Next problem - how do I get it to default to the one that works (yes - I could also attempt to get the Host only one working properly, but not if it's easy to switch to the other - llfe's too short, and with networking, getting it to stop being irritating is much more achievable than understanding!).

After a bit of Googling I discovered that you Windows brings up the network interfaces in a specified order, and the first one becomes the "primary" interface, which in turn is used as the default for DNS and goodness knows what else. All I needed to do was change the order. I picked up a hint from The Regime and was almost surprised to find that also in Windows Server 2012, you can get to the advanced settings of the network interfaces by hitting and releasing the Alt key. (Who knows about this stuff? Isn't that just disturbing?) A couple of minutes later I was testing it and finding that it worked.

So it's all good. I still don't understand networking, but that's never been a serious itch for me.

Getting IIS Express to run in a 64 bit process, and other fun Tridion content delivery configurations

In the last couple of days, I've spent far more time than I'd like figuring out how to get a Tridion-based web application to run correctly under Visual Studio. There are three basic choices:

- Run it directly using Visual Studio

- Run it using IIS Express

- Run it using IIS (non-Express version)

As the application is intended to run on a 64 bit architecture, there are some challenges. Visual Studio runs in 32 bit mode, so the first option is out. Using full-on IIS is an attractive thought; you can manually configure the application pool to run in 64 bit mode. Unfortunately, getting a debug session up and running takes more configuration than that. You have to set up the web site correctly, and it was just too fiddly. I ran out of time, or steam or whatever. (Somebody will probably tell me it's easy, and I dare say it is when you know how, and aren't spending time you really should be spending on something else. Any hints are always welcome.)

Of course, with a Tridion site, half the game is making sure you have the correct DLLs in place for the processor architecture you are using. Along the way, I discovered that the quick and dirty way to tell if you have a 32 or 64 bit version of xmogrt.dll (Juggernet's "native" layer) is the size. The 64 bit version comes in at 1600KB and the 32 bit version is about half that at 800KB or so. This varies from version to version, so on a 2013 system, it's 1200ish/900ish KB, but once you get the hang of it, you can tell them apart at sight, which is pretty useful. The other DLLs are also important, although as far as I can tell, only Tridion.ContentDelivery.AmbientData.dll is hard-compiled for 64 bit architecture, at least on the 2011 system I was working on. The rest of the .NET assemblies are compiled to MSIL, which of course, will run on either architecture.

But I digress. The thing I wanted to blog (and this will definitely be tagged note-to-self) was how to get IIS Express to run in 64 bit mode. By default it runs on 32 bits, but if you follow this link:

... you will find the following nugget of goodness:

You can configure Visual Studio 2012 to use IIS Express 64-bit by setting the following registry key:

reg add HKEY_CURRENT_USER\Software\Microsoft\VisualStudio\11.0\WebProjects /v Use64BitIISExpress /t REG_DWORD /d 1

However, this feature is not supported and has not been fully tested by Microsoft. Improved support for IIS Express 64-bit is under consideration for the next release of Visual Studio.

Very handy indeed. Running under IIS express is just one click of the button. Just works.

And by way of a PS. (Post Script that is, not PowerShell) here's how you find the processor architecture of a DLL (This time on my 2013 image.)

PS C:\inetpub\www.visitorsweb.local\bin> [reflection.assemblyname]::GetAssemblyName((resolve-path '.\Tridion.ContentDelivery.AmbientData.dll')).ProcessorArchitecture MSIL

Well anyway - it's no fun scratching your head over stuff like this. Maybe this helps.

Understanding the eight Powershell profiles (or Nobody expects the Spanish Inquisition)

A while ago, I started using the Windows Powershell ISE, and immediately fell foul of the fact that the scripts I was debugging didn't work because their environment was set up in my $profile. It turns out that the ISE has a separate profile from the one used in the PowerShell proper. It's easy enough to deal with once you know about this, although my first attempt of at working round the problem would have been better if I'm known how easy it is to get the names of the different profile locations. At that time, things would have gone better if I'd have first read this article by Ed Wilson on the Scripting Guy blog: Understanding the Six Powershell Profiles.

In this article, he explains that there are four profiles which are loaded by the PowerShell on startup. You can get the names of them by looking at the $profile variable. The commonest use of this is to do something like:

notepad $profile

to edit your profile. Well as you already know by this point, there are more. You can see these with a little more typing, as follows:

$profile.CurrentUserAllHosts $profile.CurrentUserCurrentHost $profile.AllUsersCurrentHost $profile.AllUsersAllHosts

What you get when you type $profile is the same as $profile.CurrentUserCurrentHost.

So that's four. You can get to six quite easily by understanding that the ISE and the PowerShell are different hosts, so are entitled to specify their own locations for the CurrentHost versions. Of course, having got that far, we can immediately suppose that any application that hosts the PowerShell might also have it's own CurrentHost profiles, so in theory it's limitless. Whatever... I don't have any beef with Ed for saying there are six - he was describing the general case, and doing a good job of helping people avoid a slightly non-obvious pitfall. (Like I said - I wish I'd read his article earlier than I did.)

So there you have it. We can say eight, ten, twelve... whatever.. so what's the point of this blog post? Well it turns out that I managed to uncover a different non-obvious pitfall. Drove me crazy for a while, I can tell you!

I quite often use Vim for text editing, and I thought it would be cool to get it wired up to work with PowerShell. I already had PowerShell syntax highlighting set up, but I wanted to be able to open up files and edit them from within the shell. There's plenty of material on the Internet about how to do this, and I set to with a will. Not too hard - set up a couple of aliases and so forth. But then I found that when I opened up files directly in the shell (instead of spawning the a gVim window) the syntax highlighting combined with the background color of the shell was just awful. If I am in an elevated shell, I have this set to DarkRed, and the code to do this is in my profile. So I went to disable it. Looked in my profile. The colour changing code wasn't there. Then I remembered the other profiles - and that I'd put this code into one of the AllUsers profiles, or at least I thought I had - because it wasn't there either. Well it was definitely executing. If I started the powershell with -noprofile, I got a dark blue background, and without -noprofile, I got red. Hmm... Then I edited all four profiles so that each would emit it's name when executed. Nothing doing! The AllUsers profiles weren't even executing.

Eventually I poked at it some more, and realised that if I used notepad to edit the AllUsers profiles, I could see my colour changing code, but from vi I saw my "This is the AllUsersCurrentHost profile" line. Bingo! You've probably guessed by now. It was a 64bit server, and as the AllUsers profiles are down in C:\Windows\System32, they are mirrored in C:\Windows\SysWOW64\. It therefore depends on whether you are using a 32 bit or 64 bit editor.

So according to Ed's counting system, the total is now eight!

Fun with tag clouds

Alvin Reyes recently posted about Tridion bloggers, and included a bunch of tag clouds based on the blogs of various folks at SDL. I didn't want to miss out on the fun, so I've followed his link to wordle and created my own. For some reason, it's strangely satisfying. I'm quite pleased with it. :-)

Pacman

This is mostly by way of a "note to self". I've recently started working at a customer where connecting my computer to their network is not just allowed, but necessary. Once connected, if I want to use the Internet, I have to go through their filtering proxy - presumably to keep the badness of the Internet from their systems (and yes, they do pay a lot of attention to ensuring the machine is virus-free). Previously, when I worked there for a day or two, setting up the proxy was a minor irritation, but as I'm going to be there rather longer, the idea of reconfiguring my networking twice a day started to look pretty unattractive. My first attempt at solving this had been to have a couple of scripts that set up the proxy by making the relevant registry settings, but unfortunately, Windows doesn't pick these up immediately. Yeah - sure - if I could remember to run the scripts before shutting down it might work, but I'm not that obsessive.Or I could get Windows to pick up the settings by opening the various screens... Internet Options... Connections.... LAN Settings... oh wait... there had to be a better way.

It turns out that there's something called a Proxy Auto-configuration file. If you select "Automatically detect settings", then Windows will try and locate one of these on the network using the Web Proxy Auto-discovery Protocol, however the customer in question doesn't do this. My needs were simple enough, though, so I checked the next box down: the one that says "Use automatic configuration script". All that remained was to create the script.

It turns out that you write such things in JavaScript, and it's simply a matter of writing a function which is named in the PAC standard, and using other functions that are made available. Here's what I ended up with (although I'll probably add refinements):

function FindProxyForURL(url, host) {

var customerProxy = "PROXY 10.62.40.42:1234";

if (atCustomer()){

if(dnsDomainIs(host, ".internal.customer.com") || dnsDomainIs(host, "localhost")|| dnsDomainIs(host,".local")){

return "DIRECT";

}

else return customerProxy;

} else {

return "DIRECT";

}

}

function atCustomer(){

return isResolvable("server.not.on.external.dns");

// or maybe

// return isInNet(myIpAddress(), "10.62.0.0", "255.255.0.0");

}

Nothing fancy, but it works. I suspect I'll find a few edge cases where I maybe have to enhance the script or even configure things by hand, but for now I have the satisfaction of knowing I can just turn up, plug in, and start work.

Dumping publication properties to a spreadsheet - a Powershell one-liner

A colleague mentioned to me today that he'd solved a problem with Tridion publishing that had been caused by the publication path being incorrectly set on some publications. We talked about how useful it would be to be able to get a summary of the paths without having to open every publication. Time for a powershell one-liner! How about this?

$core.GetSystemWideList((new-object PublicationsFilterData)) | select-object -property Title,MultimediaPath,MultimediaURL,PublicationPath| Export-Csv -path c:\pubs.csv

Those of you who have been following along will notice that I have imported the core service namespace using the reflection module, but even on a bare Tridion system, you could type this easily enough. The CSV file can be opened up directly in Excel, and Bob's your uncle.

A Tridion tree-walk in Powershell

Now that I've got some reasonably terse syntax working for Tridion scripting, it's time to start building out some tooling to make the whole thing useful. It's quite often useful to be able to enumerate everything in your Tridion system, so walking the tree is a basic operation. You don't want to write the tree walk every time you have a different operation to perform, so it's handy to abstract the mechanics of the recursion out into a function. Somewhere in the nether regions of this blog, you'll find a JavaScript implementation of such a function. The basic technique I used in JavaScript was to have my tree-walking function accept a "process" function as an argument. For each item in your system, this is invoked, and is able to perform whatever processing is necessary on your item. (In the JavaScript version, I actually had two functions: process and filter. The filter function was responsible for deciding whether the item was interesting to process. In practice, this is probably too much abstraction. You can just as easily code an if-block in your process function, so on this occasion I'm restricting myself to just the one.)

To anyone who has written any JavaScript, it's pretty much impossible to miss the fact that functions are first-class objects. It may not be immediately apparent that this is true in Powershell, but it is. A Script Block in Powershell, is simply an anonymous function, and you can pass them around in variables or as parameters to other functions. (These days, the concept isn't even weird to C# hackers, what with lambda expressions and all.)

So - here goes: if you start with the function "recurseTridionItems" shown below....

import-module Reflection

import-namespace Tridion.ContentManager.CoreService.Client

function recurseTridionItems{

Param(

[parameter(Mandatory=$true)]

[ValidateNotNullOrEmpty()]

[SessionAwareCoreServiceClient]$core,

[IdentifiableObjectData]$parent,

[ScriptBlock]$scriptblock,

[int]$level = 0

)

$ro = new-object ReadOptions

if ($parent -eq $null){

[PublicationData[]]$items = @($core.GetSystemWideList((new-object PublicationsFilterData)))

foreach ($item in $items) {

$fullItem = $core.Read($item.Id, $ro)

&$Scriptblock $fullItem $level

recurseTridionItems $core $fullItem $scriptblock ($level + 1)

}

}

else {

if ($parent -is [OrganizationalItemData]){

$items = $core.GetList($parent.Id, (new-object OrganizationalItemItemsFilterData))

} else {

$items = $core.GetList($parent.Id, (new-object RepositoryItemsFilterData))

}

foreach($item in $items) {

$fullItem = $core.Read($item.Id, $ro)

&$Scriptblock $fullItem $level

if ($fullItem -is [PublicationData]) {

recurseTridionItems $core $fullItem $scriptblock ($level + 1)

} elseif ($item -is [OrganizationalItemData]) {

recurseTridionItems $core $fullItem $scriptblock ($level + 1)

}

}

}

}

... this will take care of all the tree walking. For an example to show how you might use this, I've written a script block that outputs the Title of the item, indented based on the recursion level.

EDIT: my first version of this function didn't re-read the items that come from GetList. It worked fine for the trivial case of listing the titles but as soon as I tried anything more interesting, I discovered that GetList returns objects that are only partially loaded. This is apparently by design, as the documentation mentions it.

recurseTridionItems $core $null {param($item,$level)"`t" * $level + $item.Title}

On my system, this produces output like this:

_Empty Master

Building Blocks

Default Templates

Outbound E-mail

Generate Plain Text E-mail

Outbound E-mail Post-processing

Outbound E-mail Pre-processing

Generate Plain Text E-mail

Outbound E-mail Post-processing

Outbound E-mail Pre-processing

Set Output Item By Email Mode

Tridion.OutboundEmail.Templating.Templates

SDL External Content Library

Adjust SiteEdit 2009 markup for External Content Library i

Adjust SiteEdit 2012 markup for External Content Library i

Resolve External Content Library items

Search External Content Library items

Tridion.ExternalContentLibrary.Templating

Component Query

Convert Html to Xml

Convert Xml to Html

Default Finish Actions

Dreamweaver Region Selection

Enable inline editing for content

Enable inline editing for Page

Extract Binaries from Html

Image Resizer

Link Resolver

Publish Binaries in Package

Default Component Template

Default Component Template for UGC

Default Page Template

Default Page Template for UGC

Activate Tracking

Cleanup Template

Component Query

Convert Html to Xml

Convert Xml to Html

Default Dreamweaver Component Design

Default Dreamweaver Page Design

Default Finish Actions

Default UGC Dreamweaver Template design

Enable inline editing for content

Enable inline editing for Page

Enable User Generated Content Processing

Extract Binaries from Html

Extract Components from Page

Image Resizer

Link Resolver

Publish Binaries in Package

Sample XSLT Component Design

Target Group Personalization

Tridion.SiteEdit.Templating

Tridion.Ugc.Templating.DefaultTemplates

Default Multimedia Schema

root

01 Definitions

Building Blocks

Default Templates

Outbound E-mail

Generate Plain Text E-mail

I think I'll truncate it there: you get the picture. Obviously, this is a trivial use-case that probably isn't terribly useful on an industrial scale installation. Fortunately, your script-block doesn't have to be a one-liner, and you can easily expand on this technique to meet your own needs. I should think I'll find quite a few uses for it myself. Just one word of caution: this was just a quick hack, and I haven't tested it exhaustively.

Straightforward Powershell scripting with the Tridion core service

Almost exactly a year ago, I blogged about Getting to grips with the Tridion core service in Powershell. The core service had been around for a while even then, and the point was to actually start using it for some of the scripting tasks I had habitually done via the TOM. In many ways the TOM was much more script-friendly. Of course, that might have had something to do with the fact that it was created expressly for use from scripting languages. The Tridion core service API wasn't. I don't know exactly what they had in mind, but I'd imagine the thinking was that most mainstream users would use C#. Yeah, sure - any compliant .NET language would do, but F#? Nah!

But a year further on, and where are all those scripts I was going to write? I have to say, the comfort zone for scripting is quite different than for writing "proper" programmes. There's huge usefulness in being able to hack out something quickly, and very much a sense that stuff will be intermingled ina-code-is-data-stylee. So when I started actually trying to use the core service for scripting tasks, it sucked pretty hard. There were two main areas of difficulty:

- Getting the core service wired up in the first place

- Powershell doesn't natively have the equivalent of C#'s using directive to allow you to avoid typing the full namespace of your type.

I covered the first point last year. Suffice it to say that currently, I'm still using Peter Kjaer's Tridion powershell module, although at the moment I'm running a local copy, modified to cope with the Tridion 2013 client, and also to allow me to specify which protocol I want to use. (Obviously I don't want to have a permanent fork, so with a bit of luck, Peter will be able to integrate some of this work into the next release of the module.) On a related subject, my experience has been that working with the core service client has some fundamental differences with using the TOM. You could keep a TDSE lying around for minutes at a time, and it would still be usable, even after a method call had failed. The core service, even when you're on the same server, is most definitely a web service. Failed calls tend to leave your connection in a "faulted" state (i.e. unusable), and the timeouts are generally shorter. Once you are aware of this, you can adjust your coding style accordingly, but it adds somewhat to the ritual.

The namespace issue is on the face of it more trivial. OK - so it's a PITA to have to type something like:

$folder = new-object Tridion.ContentManager.CoreService.Client.FolderData

when all you wanted was a folder. You could argue: "well it works, doesn't it? Get over it!". However, I found all this extra verbiage too much of a distraction, not only when reading and editing longer scripts, but also when "knocking off a quick one". After all, what's the point of having a great scripting environment if your one-liners aren't?

So what to do? Well I scoured the Internet, and discovered that Powershell has something called a Type Accelerator. You've seen these often enough, as there are several available by default. For example, you can (and should) type "[string]" when what you really mean is "[System.String]". Unfortunately, creating type accelerators isn't completely straightforward, but No Worries, the Powershell community is vibrant and there are implementations available that take care of it for you. (OK, at the time of writing I know of one that works, but that's enough, eh? My first Googling had taken me to the Type Accelerators module (PSTX) at codeplex. At first this seemed to be useful, but as soon as I moved to Tridion 2013, support for Powershell 3 became a hard requirement. This project is not actively maintained, and it doesn't work in Powershell 3. As I said, it's not straightforward to wire up type accelerators, and the code uses an undocumented API, which changed. Not Microsoft's fault.)

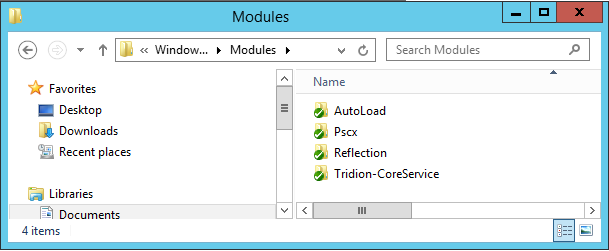

At this point, I went to the Powershell IRC channel (#powershell on freenode) and asked there if anyone knew about fixes or updates. I was steered in the direction of Jaykul's reflection module, available on Poshcode. (Make sure you get the latest version, and beware of the script getting truncated.) Installing modules is a fairly straightforward task: often as simple as dropping the files into a suitably named directory in your WindowsPowerShell modules directory (sometimes you need to "unblock" them) . Here's a shot of what mine looks like: (What you can see is C:\Users\Administrator\Documents\WindowsPowerShell\Modules)

In there you can see the Reflection module and AutoLoad (which is another module it depends on). Apart from that you can see the Tridion core service module (and Pscx).

With all this in place, you are set to start writing your "straightforward" Tridion scripts. I've chosen to demonstrate this by hacking out a script that will create a default publication layout for you. It will be a handy tool to have on my research image, but mostly it's to show some real-world scripting.

param ($publicationPrefix = "")

$core = Get-TridionCoreServiceClient -protocol nettcp

import-module reflection

import-namespace Tridion.ContentManager.CoreService.Client

function createPublication {

Param(

[parameter(Mandatory=$true)]

[ValidateNotNullOrEmpty()]

[SessionAwareCoreServiceClient]$core,

[parameter(Mandatory=$true)]

[ValidateNotNullOrEmpty()]

[string]$title,

[string]$key,

[string[]]$parents,

[switch]$Passthru

)

write-host "Creating publication $title"

$newPublication = $core.GetDefaultData([ItemType]::Publication,"",$null)

$newPublication.Title = $title

if ($key -eq [string]::Empty){

$newPublication.Key = $title

}

else {

$newPublication.Key = $key

}

foreach ($parent in $parents){

$link = new-object LinkToRepositoryData

if ($parent -match "^tcm:"){

$link.IdRef = $parent

} elseif ($parent -match "^/webdav"){

$link.WebDavUrl = $parent

} else {

continue

}

$newPublication.Parents += $link

}

if ($Passthru){

$core.Create($newPublication, (new-object ReadOptions))

}

else {

$core.Create($newPublication,$null)

}

}

function createFolder([SessionAwareCoreServiceClient]$core, [string]$parentId, [string]$title, [switch]$Passthru){

write-Host "Creating folder $title"

$newFolder = $core.GetDefaultData([ItemType]::Folder, $parentId, $null)

$newFolder.Title = $title

if ($Passthru){

$core.Create($newFolder, (new-object ReadOptions))

}

else {

$core.Create($newFolder, $null)

}

}

function createStructureGroup([SessionAwareCoreServiceClient]$core, [string]$parentId, [string]$title, [string]$directory, [switch]$Passthru){

write-Host "Creating Structure Group $title"

$newStructureGroup = $core.GetDefaultData([ItemType]::StructureGroup, $parentId, $null)

$newStructureGroup.Title = $title

$newStructureGroup.Directory = $directory

if ($Passthru){

$core.Create($newStructureGroup, (new-object ReadOptions))

}

else {

$core.Create($newStructureGroup, $null)

}

}

$chainMasterPub = createPublication $core "$($publicationPrefix)ChainMaster" -Passthru

$rsg = createStructureGroup $core $chainMasterPub.Id "root" "root" -Passthru

$definitionsPub = createPublication $core "$($publicationPrefix)Definitions" -parents @($chainMasterPub.Id) -Passthru

$systemFolder = createFolder $core $definitionsPub.RootFolder.IdRef "System" -Passthru

createFolder $core $systemFolder.Id "Schemas"

$contentPub = createPublication $core "$($publicationPrefix)Content" -parents @($definitionsPub.Id) -Passthru

$contentFolder = createFolder $core $contentPub.RootFolder.IdRef "Content" -Passthru

$layoutPub = createPublication $core "$($publicationPrefix)Layout" -parents @($definitionsPub.Id) -Passthru

createFolder $core $core.GetTcmUri($systemFolder.Id, $layoutPub.Id, $null) "Templates"

createPublication $core "$($publicationPrefix)Web" -parents @($contentPub.Id,$layoutPub.Id)

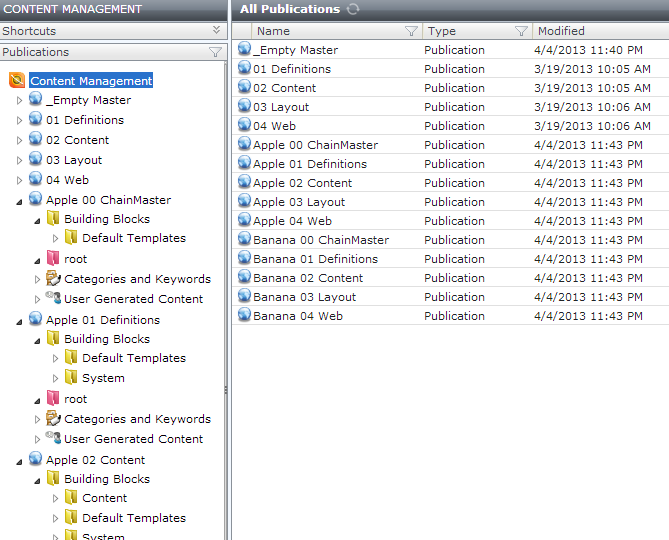

The script accepts a parameter which lets me prefix the publications with some name relevant to whatever I'm doing, so if you invoke it like this:

PS C:\code\dominic\tridion> .\CreateDefaultStructure.ps1 "Apple" Connecting to the Core Service at localhost... Creating publication Apple 00 ChainMaster Creating Structure Group root Creating publication Apple 01 Definitions Creating folder System Creating folder Schemas Creating publication Apple 02 Content Creating folder Content Creating publication Apple 03 Layout Creating folder Templates Creating publication Apple 04 Web PS C:\code\dominic\tridion> .\CreateDefaultStructure.ps1 "Banana" Connecting to the Core Service at localhost... Creating publication Banana 00 ChainMaster Creating Structure Group root Creating publication Banana 01 Definitions Creating folder System Creating folder Schemas Creating publication Banana 02 Content Creating folder Content Creating publication Banana 03 Layout Creating folder Templates Creating publication Banana 04 Web PS C:\code\dominic\tridion>

... you end up with publications like this:

I import the Tridion-CoreService module in my Powershell profile, so it's not needed in the script. (As noted earlier, my copy is a bit hacked, as you can see from the fact that I'm passing a protocol parameter to Get-TridionCoreServiceClient). I don't import the reflection module by default, so this is done in the script, followed immediately by "import-namespace Tridion.ContentManager.CoreService.Client", which is the magic from the Reflection module that wires up all the type accelerators. Once this is done, you can see that I can simply type [ReadOptions] instead of [Tridion.ContentManager.CoreService.Client.ReadOptions], and so on. Much better, I think! :-)

If you're wondering about the -Passthru switch on my functions, this is a powershell idiom that lets you indicate whether or not you are interested in the return value. In Tridion, this is controlled by whether or not you pass a ReadOptions argument. Perhaps obviously, the Read() method wouldn't make any sense if it didn't return anything, so a $null works fine - I'm still agonizing over whether it would be more stylish to pass a ReadOptions anyway. What do you think?)

Actually that's a good question. What do you think? I'm still trying to find my feet in terms of the correct idioms for this kind of work. Let's get the debate out in the open. Feel free to say mean things about my code (not obligatory). I've got a thick skin, and I'd genuinely value your feedback, especially if you think I'm doing it wrong.