Dominic Cronin's weblog

Encrypting passwords for Tridion content delivery

This is just a quick note to self, because I just spent a few minutes figuring out something fairly trivial and I don't want to forget it.

Previously, to encrypt a password for Tridion content delivery, you would do something like:

java -cp cd_core.jar com.tridion.crypto.Encrypt foobar

It's been a while since I did this, and I hadn't realised that in Web 8.5 it doesn't work any more. They've factored the Crypto class out into a utility jar, so now the equivalent command has become something like:

java -cp cd_core.jar;cd_common_util.jar com.tridion.crypto.Encrypt foobar

Of course, these days the jars also have build numbers in the name, so it's a bit uglier. The point is that you have to have cd_core and cd_common_util on your classpath.

deployer-conf.xml barfs on the BOM

Today I was working on some scripts to provision, among other things, the SDL Web deployer service. It should have been straightforward enough, I thought. Just copy the relevant directory and fix up a couple of configuration files. Well I got that far, at least, but my deployer service wouldn't start. When I looked in the logs and found this:

2017-09-16 19:20:21,907 ERROR NonLegacyConfigConditional - The operation could not be performed.

com.sdl.delivery.configuration.ConfigurationException: Could not load legacy configuration

at com.sdl.delivery.deployer.configuration.DeployerConfigurationLoader.configure(DeployerConfigurationLoader.java:136)

at com.sdl.delivery.deployer.configuration.folder.NonLegacyConfigConditional.matches(NonLegacyConfigConditional.java:25)

I thought it was going to be a right head-scratcher. Fortunately, a little further down there was something a little more clue-bestowing:

Caused by: org.xml.sax.SAXParseException: Content is not allowed in prolog.

at org.apache.xerces.parsers.DOMParser.parse(Unknown Source)

at org.apache.xerces.jaxp.DocumentBuilderImpl.parse(Unknown Source)

at com.tridion.configuration.XMLConfigurationReader.readConfiguration(XMLConfigurationReader.java:124)

So it was about the XML. It seems that Xerxes thought I had content in my prolog. Great! At least, despite its protestations about a legacy configuration, there was a good clear message pointing to my "deployer-conf.xml". So I opened it up, thinking maybe my script had mangled something, but it all looked great. Then some subliminal, ancestral memory made me think of the Byte Order Mark. (OK, OK, it was Google, but honestly... the ancestors were there talking to me.)

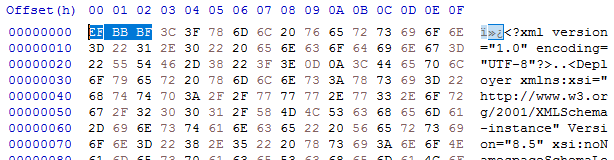

I opened up the deployer-conf.xml again, this time in a byte editor, and there it was, as large as life:

Three extra bytes that Xerxes thought had no business being there: the Byte Order Mark, or BOM. (I had to check that. I'm more used to a two-byte BOM, but for UTF-8 it's three. And yes - do follow this link for a more in-depth read, especially if you don't know what a BOM is for. All will be revealed.)

What you'll also find if you follow that link is that Xerxes is perfectly entitled to think that, as it's a "non-normative" part of the standard. Great eh?

Anyway - so how did the BOM get there, and what was the solution?

My provisioning scripts are written in Windows PowerShell, and I'd chosen to use PowerShell's "native" XML processing, which amounts to System.Xml.XmlDocument. In previous versions of these scripts, I'd used XLinq, but it's not really a good fit with PowerShell as you can't really use XPath without extension methods. So I gave up XLinq's ease of parsing fragments for a return to XmlDocument. To be honest, I wouldn't be surprised if the BOM problem also happens with XLinq: after all, it's Xerxes that's being fussy - you could argue Microsoft is playing "by the book".

So what I was doing was this.

$config = [xml](gc $deployerConfig)

Obviously, $deployerConfig refers to the configuration file, and I'm using Powershell's Get-Content cmdlet to read the file from disk. The [xml] cast automatically loads it into an XmlDocument, represented by the $config variable. I then do various manipulations in the XmlDocument, and eventually I want to write it back to disk. The obvious thing to do is just use the Save() method to write it back to the same location, like this:

$config.Save($deployerConfig)

Unfortunately, this gives us the unwanted BOM, so instead we have to explicitly control the encoding, like this:

$encoding = new-object System.Text.UTF8Encoding $false

$writer = new-object System.IO.StreamWriter($deployerConfig,$false,$encoding) $config.Save($writer) $writer.Close()

As you can see, we're still using Save(), but this time with the overload that writes to a stream, and also allows us to pass in an encoding. This seems to work fine, and Xerces doesn't cough it's lunch up when you try to start the deployer.

I think it will be increasingly common for people to script their setups. SDL's own "quickinstall" doesn't use an XML parser at all, but simply does string replacements based on its own, presumably hand-made, copies of the configuration files. Still - one of the obvious benefits of having XML configuration files is that you can use XML processing tools to manipulate them, so I hope future versions of the content delivery microservices will be more robust in this respect. Until then, here's the workaround. As usual - any feedback or alternative approaches are welcome.

Finding the powershell profiles you actually have

Many of you Powershell aficionados out there will be familiar with the fact that there are four separate locations where you can place a profile script. These scripts will run when you start the shell, and that allows you to get some default stuff set up. (How hard can it be? Well actually, much harder!)

Today I got irritated with the fact that I can never find which profile I've put something in. It starts with a vague recollection of "didn't I have something in my profile for that?". Then I start by opening a shell and typing:

notepad $profile

... and thereby opening up my $profile.CurrentUserCurrentHost - which to be fair is where I put most stuff. Not there eh? Ok, let's go looking for the other profiles. So I type:

notepad $profile.<TAB><TAB>

and end up at

notepad $profile.AllUsersAllHosts

Then notepad tells me that this one doesn't exist, so I end up going through the same steps for the other two profiles. Especially on a system where they aren't there, it's just irritating. So I put this in my profile (yes, the CurrentUserCurrentHost one, but actually AllHosts would be better, eh?):

function get-profiles {

$profile.PSobject.Properties | ? {Test-Path $_.Value} | select Name, Value

}

Now all I have to do is remember that I put it there.

Connecting to Microsoft SQL Server Developer from Tridion Content Delivery

I've recently been setting up a development image for SDL Web 8.5, and as it's only for use on my development rig, it's fair game to use Microsoft SQL Server Developer edition. It's not supported by SDL, but it's close enough to make it a reasonable risk for my purposes. I got the databases set up and the content manager installed OK, so I moved on to the content delivery stack.

First I hacked together a database test script to make sure I had all the logins correct etc. I've done it this way for years, and you may have seen my blog about it quite a long time ago. Everything seemed fine.

I'd started with the Discovery service, and I'd configured the cd_storage_conf.xml with the relevant database settings I'd just tested. How hard could it be? Except that it didn't work. I got messages in the logs telling me to check my firewall. Doh! Off I went and opened up the firewall ports for my microservices (which I'd forgotten to do) and also 1433 for MSSQL. Still no joy.

Somewhere along the way I'd also disabled loopback checking and double-checked a bunch of other things that can cause trouble. No joy.

I went back to my database test script a few times. It uses a System.Data.SqlClient.SqlConnection to execute a simple command. The connection string specifies '(local)' as the server. I'd had trouble with using '(local)' in the cd_storage_conf.xml in a previous version of Tridion, so I had specified 'localhost' instead, and then when that didn't work, a different name that mapped to the same interface. Still nothing.

The troubling thing was that the test script worked fine. Why was that, when Tridion's java stack had trouble doing the same thing? I should have cottoned on to this way earlier, but eventually I started checking to see if there was actually anything listening on 1433. No there wasn't. Well that helped. And then I started poking around in the network configuration of SQL Server. Sure enough: TCP/IP wasn't enabled. I'm still not sure if this is a Developer edition thing. I seem to recall having come across it before. I'm not the only one. Now that I know the answer, finding a suitable Stack Overflow answer is easy! Maybe I'd had trouble with SQLEXPRESS.

Anyway, at least that explained why my test script worked OK. The SqlConnection client sees '(local)' and is then able to attempt a named pipes or shared memory connection as well as TCP/IP. The java client, on the other hand, doesn't have this repertoire of options and if TCP/IP fails, it's over.

Anyway - now it's fixed. Just time for a quick Note To Self, and on with the rest of my system.

Mashing your scanned JPGs back into one big PDF

It happens more often these days. You get some form sent to you as a PDF. You print it out, and fill it in, and then you want to scan it back in and send it back. For one reason or another, my scanner likes to scan documents to JPEG files: one file per scan. Grr...

In the past, I've used some PDF printer driver or other to solve this problem, but under the water they pretty much all use ghostscript, so why not do it directly. I used to install cygwin on my Windows machines to get access to utilities like this, but these days, Windows embeds a pretty much functional Ubuntu.

So yeah - just directly using ghostscript. How hard can it be? Well it turns out that a bit of Googling leads you to typing some pretty gnarly command lines, especially since I had scanned a 15 page document into 15 separate JPG files. And then Adobe Acrobat didn't understand the resulting document. No good at all. So then I googled further and found this.

It turns out that by installing not only ghostscript but imagemagick, the imagemagick "convert" utility knows how to do exactly what you want, presumably by enlisting the help of ghostscript. So simply by cd'ing to the directory where I had my scans, this...

$ convert *.JPG outputfile.pdf

... did the trick. Pretty neat, huh? Note to self....

Decoding webdav URLs (or how to avoid going cross-eyed reading your error messages)

I was doing some Content Porting the other day. When moving code up the DTAP street the general practice is to switch off dependency management and, well, manage the dependencies yourself. This is great for a surgical software release, where you know exactly what's in the package and can be sure that you aren't unintentionally releasing something you hadn't planned to, but....

Yeah - there's always a but. In this case, you have to make sure that all the items your exported items depend on are present, either in the export or in the target system. Sometimes you miss one, and during the import you get a nice error message saying which item is missing. Unfortunately, the location of the item is given as a WebDAV URL. If the item in question has lots of spaces, quote marks, or other special characters in it, by the time you get to read the URL in all its escaped glory, it can be a complete alphabet soup.

So there I was, squinting at some horrible URL and mentally parsing out the escape sequences to figure out what I was looking at.. when it dawned on me. Decoding encoded URLs is not work for humans - we have computers for that. So I fired up my trusty Powershell, thinking "hey, I have the awesome power of the .NET framework at my disposal". As it turns out, the HttpUtility libraries that most devs are familiar with is probably not there in your ordinary desktop OS, but System.Net.WebUtility is. So if you've copied a webdav url into your paste buffer, you can open the shell, type in

[net.webutility]::Ur

From here on tab completion will fill in the rest of UrlDecode, and with one or two more keystrokes and a right-mouse-click you have something like this:

[net.webutility]::UrlDecode("/webdav/Some%20Publication/This%20%26%20that/More%20%22stuff%22%20to%20read/a%20soup%C3%A7on%20of%20something")

and then hitting enter gets you this:

/webdav/Some Publication/This & that/More "stuff" to read/a soupçon of something

which is much more readable.

Of course, if even that is too much typing for you, you can stick something like this in your profile:

function decode ($subject) {

[net.webutility]::UrlDecode($subject)

}

Of course, none of this is strictly necessary - you can always stare at the WebDAV URLs and decipher them as an exercise in mental agility, but some days you just want the easy life.

Powershell 5 for tired old eyes

With the release of Powershell 5, they introduced syntax highlighting. This is, in general, a nice improvement, but I wasn't totally happy with it, so I had to find out how to customise it. My problems were probably self-inflicted to some extent, as I think at some point I had tweaked the console colour settings. The Powershell is hosted in a standard Windows console, and the colours it uses are in fact the 16 colours available from the console.

The console colours start out by default as fairly basic RGB combinations. You can see these if you open up the console properties (right-click on the title bar of a console window will get you there). In the powershell, these are given names - the powershell has its own enum for these, which maps pretty directly on to the ConsoleColor enumeration of the .NET framework.

| ConsoleColor |

Description |

Red |

Green | Blue |

| Black |

The color black. |

0 |

0 |

0 |

| Blue |

The color blue. |

0 |

0 |

255 |

| Cyan |

The color cyan (blue-green). |

0 |

255 |

255 |

| DarkBlue |

The color dark blue. |

0 |

0 |

128 |

| DarkCyan |

The color dark cyan (dark blue-green). |

0 |

128 |

128 |

| DarkGray |

The color dark gray. |

128 |

128 |

128 |

| DarkGreen |

The color dark green. |

128 |

0 |

0 |

| DarkMagenta |

The color dark magenta (dark purplish-red). |

128 |

0 |

128 |

| DarkRed |

The color dark red. |

128 |

0 |

0 |

| DarkYellow |

The color dark yellow (ochre). |

128 |

128 |

0 |

| Gray |

The color gray. |

128 |

128 |

128 |

| Green |

The color green. |

0 |

0 |

255 |

| Magenta |

The color magenta (purplish-red). |

255 |

0 |

255 |

| Red |

The color red. |

255 |

0 |

0 |

| White |

The color white. |

255 |

255 |

255 |

| Yellow |

The color yellow. |

255 |

255 |

0 |

In the properties dialog of the console these are displayed as a row of squares like this:

and you can click on each colour and adjust the red-green-blue values. In addition to the "Properties" dialog, there is also an identical "Defaults" dialog, also available via a right-click on the title bar. Saving your tweaks in the Defaults dialog affects all future consoles, not only powershell consoles.

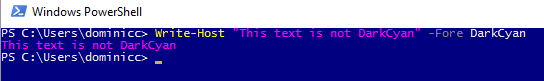

In the Powershell, you can specify these colours by name. For example, the fourth one from the left is called DarkCyan. This is where it gets really weird. Even if you have changed the console colour to something else, it's still called DarkCyan. In the following screenshot, I have changed the fourth console colour to have the values for Magenta.

Also of interest here is that the default syntax highlighting colour for a String, is DarkCyan, and of course, we also get Magenta in the syntax-highlighted Write-Host command.

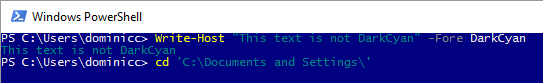

Actually - this is where I first had trouble. The next screenshot shows the situation after setting the colours back to the original defaults. You can also see that I am trying to change directory, and that the name of the directory is a String.

My initial problem was that I had adjusted the Blue console color to have some green in it. This meant that a simple command such as CD left me with unreadable text with DarkCyan over a slightly green Blue background. This gave a particularly strange behaviour, because the tab-completion wraps the directory in quotes (making it a String token) when needed, and not otherwise. This means that as you tab through the directories, the directory name flips from DarkCyan to White and back again, depending on whether there's a space in it. Too weird...

But all is not lost - you also have control over the syntax highlighting colours. You can start with listing the current values using:

Get-PSReadlineOption

And then set the colours for the various token types using Set-PSReadlineOption. I now have the following line in my profile

Set-PSReadlineOption -TokenKind String -ForegroundColor White

(If you use the default profile for this, you will be fine, but if you use one of the AllHosts profiles, then you need to check that your current host is a ConsoleHost.)

Anyway - lessons learned... Be careful when tweaking the console colours - this was far less risky before syntax highlighting... and you can also fix the syntax highlighting colours if you need to, but you can only choose from the current console colours.

Spoofing a MAC address in gentoo linux

I spent a few hours this weekend fiddling with networking things at home. One of the things I ran into was that the DHCP server provided by my ISP was behaving erratically. Specifically, it was being very fussy about giving out a new lease. It would give out a lease to a Windows 7 system I was using for testing, but not to my Gentoo server. At some point, having spent the day with this kind of frustration, I was ready to put up with almost any hack to get things running. Someone on the #gentoo IRC channel suggested that spoofing the MAC address that already had a lease might be a solution. Their solution was to do this:

ifconfig eth0 down ifconfig eth0 hw ether 08:07:99:66:12:01 ifconfig eth0 up

Here, you have to imagine that eth0 is the name of the interface, although on my system it isn't any more. (Another thing I learned this weekend was about predictable interface names.) You should also imagine that 08:07:99:66:12:01 is the mac address of the network interface on my Win7 system.

The trouble with this is that it doesn't integrate very well in the standard init scripts that get things going on a Gentoo system. Network interfaces are started by running /etc/init.d/net.eth0 (although that's just a link to another script). The configuration is to be found in /etc/init.d/net where you can add directives that control the way your network interfaces are configured. The most important of these are the ones that begin with "config_". For example, to set up a static IP for eth0, you might say something like:

config_eth0="192.168.0.99 netmask 255.255.255.0 brd 192.168.0.255"

or for DHCP it's much simpler:

config_eth0="dhcp"

So my obvious first try for setting up a spoofed MAC address was something like this:

config_eth0="dhcp hw ether 08:07:99:66:12:01"

but this didn't work at all. Anyway - after a bit of fiddling and more Googling (sorry - I can't remember where I found this) it turned out that there's a specific directive just for this purpose. I tried this

mac_eth0="08:07:99:66:12:01" config_eth0="dhcp"

It works a treat. Note that the order is important, which is obvious once you know it I suppose, but wasn't obvious to me until I'd got it wrong once.

The good news after that was that for an established lease, everything worked rather better.

Moving your Tridion databases

As part of setting up my new laptop, I installed MSSQL and obviously I wanted to have my existing Tridion databases available. My Tridion image had previously not had a database - I had that running natively on the old laptop, but I'd decided to go with a more conventional approach and run it in the image with Tridion. This transition had a couple of interesting moments, and hence this post.

Moving the databases and getting MSSQL security working again.

The moving part was fairly simple. I just detached all the databases, and copied the pairs of .MDF and .LDF files over to the new location and attached them.

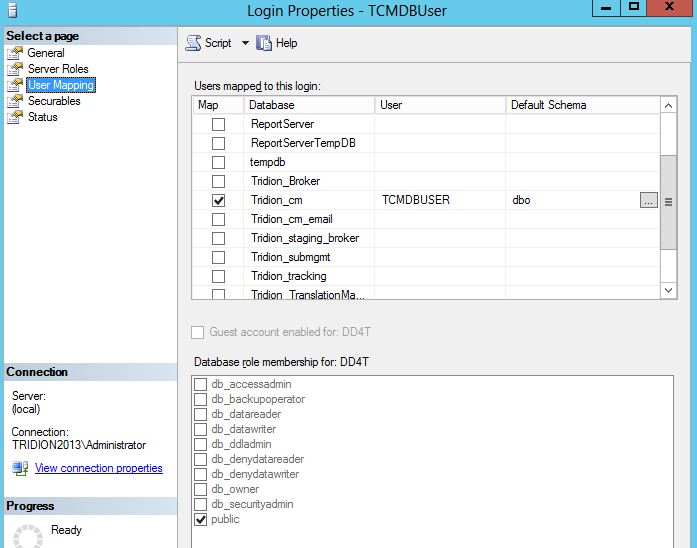

Once you've done this, you'll find that in each database, if you look under Security/Users, you'll find a User with a name that matches the login that you use in your Tridion configuration... for example: TcmDbUser. Unfortunately, this isn't enough. There are (at least) two kinds of User. The one you can see in your database (this is strictly a "database principal") can't be used for logging in. For that you need a "server principal", and these are to be found in your MSSQL instance under Security/Logins. For everything to work correctly, there needs to be a mapping between the database principal and the server principal. You can see this if you look in a correctly configured system. Right click on the login and open the properties, and open up the user mapping page. It should look something like this:

So what we're aiming for is to have a matching Login and database User, with the same name. Creating a Login is easy enough, but if you try to add the mapping by hand in the User Mapping page, it will fail, because it wants to create a database user, and a database user with the same name already exists. (You could delete it, but then you'd have a world of pain trying to figure out all the properties and settings that the Tridion database scripts take care of automatically. I'm not even sure if support would ever talk to you again if you did this.)

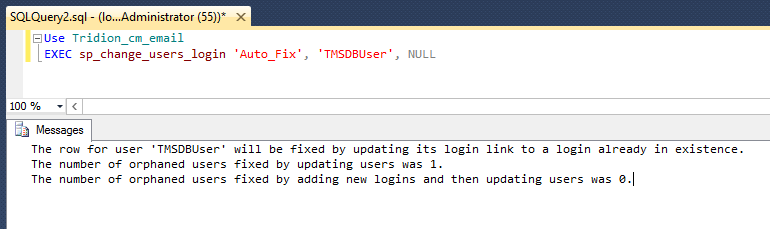

Fortunately, there's a better way. You can do it via SQL with various ALTER USER commands, but then you are going to be deeper into the security features of MSSQL than any normal person ought to wish for. (In this context, DBA's aren't normal, but then they won't be needing to read this blog post, will they?) However, you don't need to figure out all that SQL, because there's a system procedure (sp_change_users_login) that does exactly what you want. As long as your Login and User have the same name, you can just use the Auto_fix method, like this:

Remembering the database settings you'd forgotten about.

So I had all the MSSQL stuff correctly set up, or so I thought, but when I started to try to use the Tridion GUI, I kept getting error notifications in the Message centre.

A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible.

Verify that the instance name is correct and that SQL Server is configured to allow remote connections. (provider SQL: Network interfaces error, 26 - Error locating Server/Instance Specified)

This was pretty odd. I could see most of the GUI working fine, and publications were listed OK, but other lists weren't populated. I speculated that it might only be lists served via service calls that had problems, but when I checked the core service, it was able to list out my entire system. I spent quite some time fiddling with various settings and checking that named pipes etc., were configured correctly, before I eventually got smart enough to check T-REX again.In an old post from 2011, Rick Pannekoek suggested that a similar problem might be caused by the outbound email configuration.

Sure enough - I'd forgotten that outbound email has it's own database configuration (if I'd ever known it - the installer sets it all up and mostly you never need to look there, unless you're actually doing outbound email). Anyway - I certainly hadn't realised that this would break the Content Manager's GUI.

A quick visit to:

C:\Program Files (x86)\Tridion\config\OutboundEmail.xml

and then a bit of fiddling with decrypting and re-encrypting (there are scripts for this that come with the installer), and I had my system in fully working order.

Parameter type quirks of the XSLT mediator

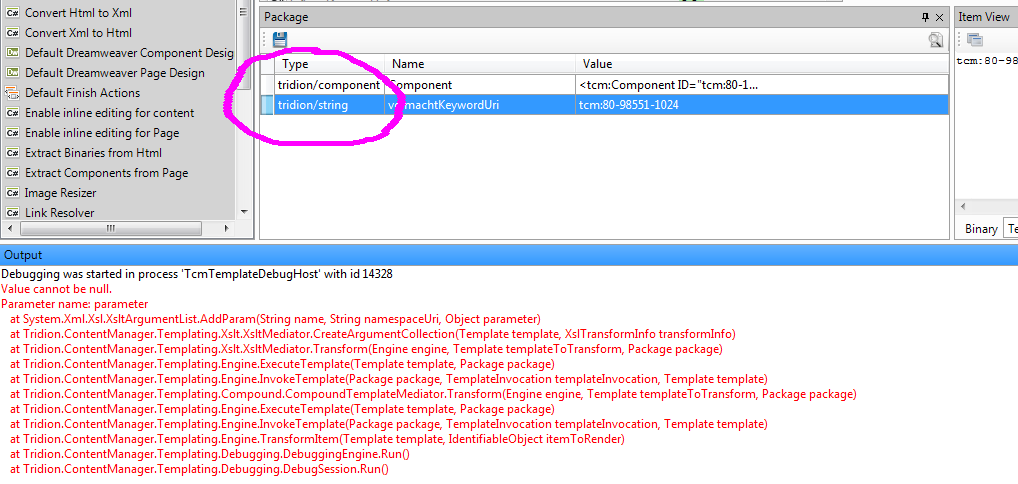

Today I was working on a template with an XSLT building block. I'd added a parameter to the package further up, and expected to use it simply by having an <xsl:param/> element with a matching name. Instead I got the error message you can see in the screencap below... Value cannot be null, Parameter name: parameter.

So what's going on here? Well I had a bit of a dig... (obviously by using my secret powers, and nothing as humdrum as technology) and came up with a couple of interesting things. Firstly, the way I'd imagined things was all wrong. I had assumed that the mediator would loop through the package variables, and add them as parameters to the XSLT. In fact, it's the other way round. The mediator parses the XSLT to get the param elements that are declared, and loops through these to see if it can find a satisfactory parameter to add.

If you look in the documentation, you will find that there are some "magic" parameter names that will cause the mediator to pass various relevant data items as parameters. These are tcm:Publication, tcm:ResolvedItem, tcm:ResolvedTemplate and tcm:XsltTemplate. In addition to these documented parameters, tcm:Page and tcm:ComponentTemplate would also appear to work under the correct circumstances, but of course, if you want your templates to be future-proof, it's better not to use such undocumented features, especially seeing as you could just add the relevant items as XML to your package, and have the same result. It all reminds me of the old XSLT component templates, that also had magic parameters that very few people knew about.

Anyway, back to my bug - for it is indeed a bug. In addition to providing magic parameters, of course the mediator also wires up parameters that are in the package. So - having found a parameter name in the XSLT, it looks for a package item with the matching name. If the item is of type "text" or "html", then it gets added as a string. For any other item type, it tries to get an appropriate XmlDocument and add that. If this process fails, any exceptions get swallowed, and instead of an XmlDocument the "parameter" parameter of AddParam becomes null. And then we see the aforementioned "Value cannot be null. Parameter name: parameter" message, which is the .NET framework quite correctly checking its input values and refusing to play.

The solution is easy - instead of using ContentType.String when I added my parameter to the package, I used ContentType.Text, and everything worked like a charm. But not obvious, and hence the blog post. I'm sure to forget this, and having it in my "external memory" might help.

It's easy to see how this could happen. In fact, it's our old friend LOLA. The GetAsXmlDocument() method of a Templating Item returns a null if it can't manage to return the relevant XmlDocument - for all I know, this is the correct semantics for such a method. Maybe there are very good reasons for it. Still - if you're writing client code, and you don't know this, you'll fail to do the null check, and things will break. FWIW the null check is also missing in older versions of the mediator.

So - there - I've got that off my chest. I should probably report this to customer support. But it's the weekend, and seeing as my stuff works, and the answer is now google-able, I might possibly not have that much energy :-)